In this guide

The complete guide to user testing websites, apps, and prototypes

The complete guide to user testing websites, apps, and prototypes

Understanding your customers is the key to any successful business, to ensure that you provide them with what they want and minimize what they find frustrating or challenging.

Whether it’s a physical product or a digital experience, including websites or apps, you’re more likely to meet—and exceed—customer expectations if you consider customer feedback throughout the product or campaign ideation, development, and optimization process. That way, you create solutions and experiences that match what customers are seeking.

Leveraging human insight at every stage of an experience's development enables teams across an organization to gather qualitative data to understand the intention and motivation driving customer behaviors. Understanding why customers make decisions helps website and app testing so that you can design and provide digital experiences that your customers will love.

Through testing, human insight empowers teams across your organization from product to marketing to UX research and design to:

- Uncover customers’ needs and challenges as the starting point for designing solutions and campaigns

- Make faster, more informed decisions based on known customer needs

- Take a customer-first approach in everything they work on

This guide will cover how to plan, conduct, and analyze your digital experiences. Well-planned and well-executed user research will uncover actionable, qualitative data that your teams can use to make faster, better decisions. And this results in delightful experiences that keep customers satisfied and coming back for more.

Define your research objective

The first step toward gathering meaningful feedback is setting a clear and focused research objective. If you don’t know what information you want to obtain before you conduct your research, you risk launching tests that fail to yield actionable insights.

What am I trying to learn?

Remember: you don’t need to uncover every issue or problem in a single, exhaustive study. While it’s tempting to try to cover as many challenges as possible, running a series of smaller studies with one specific objective for each is much easier and more productive. You’re more likely to get focused feedback that guides you toward a solution or supports a decision or change.

As you set your objective, think about the outcomes, results, or KPIs most impacting your business outcomes. This emphasizes gathering insights aligned with strategies and activities that drive positive business outcomes.

For example, if you notice that customers who visit a certain page of your website don’t typically convert, you can test that page to uncover insights driving that KPI.

Be sure your objective is clear and simple. The more complex an objective is, the harder it is to test, analyze, and achieve.

Example of a complex objective

Can users easily find our products and make an informed purchase decision?

This objective contains three very different components, each of which will have unique test plans and approaches

- Finding a product

- Getting informed

- Making a purchase

Example of a better objective

Can users find the information they need?

This objective is singular in nature and boils down to a yes or no answer. By zeroing in on a clear, definable objective, your team will be able to uncover any opportunities quickly and easily.

Pro tip: Remember to ask open-ended questions to understand customers’ perceptions and how those opinions influence their choices and behaviors.

Check out our blog post on how to write a research objective to learn more.

Identify what to test

Next, you need to identify the experience you’d like to get feedback on. Depending on your insights platform, you can probably test a wide range of digital experiences, including live properties, like websites and apps, unreleased products, low-fidelity prototypes, or websites and apps still in development.

Before you can move on to creating and launching your test, it's important that you understand the considerations for each type of experience you're interested in getting feedback on.

Different experiences will require different pieces of information before you can launch your test, so be sure you've gathered all the necessary information for each experience before launching your test.

To help you prepare, consider these requirements for each type of experience you can test:

When testing live products

Conducting a test on live products typically entails providing a study participant with a URL or the name of the app in a digital marketplace (such as the App Store or Google Play).

Ensure the link or app you're sharing is accessible to the public.

When testing prototypes

Similarly, many prototyping tools, such as InVision, or file hosting solutions like Google Drive or Dropbox allow you to download a link to share the prototype.

You can share the URL to get feedback on your prototype. Ensure sharing permissions are enabled to ensure all test participants can view your content).

Because prototypes aren't fully functioning products, be sure to note this in your test instructions—participants can understandably become frustrated if they're expecting something to happen when they click a button, for example.

For these types of tests, in addition to making it clear the product may not work as expected, it's still important to understand how users expect it to work, so focus your questions around what they would expect to happen if they clicked or tapped a button.

When testing real-world experiences

Testing experiences wherever they happen in your customers' lives is a great way to get a more natural, unbiased understanding of how your customers will interact with your products in real life.

Anything your customers interact with can be tested if your participant has a camera on their mobile phone. Everything from AR/VR experiences, in-home testing including voice devices, streaming media services, and IoT, to testing experiences in brick-and-mortar stores, airport check-in, and more.

When testing in-person experiences, there are a few considerations to remember.

- Participants will be using their mobile cameras in public or private spaces; be sure to instruct them to focus their cameras only on what applies to the test.

- Be sure your instructions for the test are simple and clear (true for any type of test, but especially when participants are on the go)

Try out out test templates for real-world experiences.

ON-DEMAND WEBINAR

Unlock higher conversions: understanding what users really want

In this webinar, you'll learn:

- How to identify hidden friction points that are preventing conversions

- Why traditional analytics fall short—and how customer insights fill the gap

- Actionable strategies to refine navigation, optimize CTAs, and enhance the user journey

Choose your target audience

In many cases, it’s helpful to have feedback from a wide range of participants, spanning age, gender, and income level. This helps you understand how a wider audience perceives your products—whether products are easy to use and relevant to many or whether they present challenges to some groups.

Or perhaps you have a very specific customer profile or persona in mind and want to ensure that you get feedback from people who meet a list of criteria.

Here's what you can expect from the various test participant groups you can work with.

Use a panel of test participants

One of the best ways to ensure a diverse audience is to recruit from a panel of participants who have opted-in to share their feedback on experiences. Depending on the human insight solution you use, you may have access to test participants locally or from around the world.

Many insight solutions enable you to choose from a range of demographics, geographies, device preferences, and more.

If you want to target a specific persona, you can adjust the demographics and write screener questions when setting up your test. You can ask screener questions related to the participants’ psychographics, such as hobbies, attitudes, behaviors, professions, and titles, or anything that will help you locate precise participants.

The primary benefit to using a panel of participants is that it's usually faster and easier than trying to recruit people on your own. Additionally, the insight platform you use will likely have the infrastructure already established to handle payment and test distribution, so you can focus on getting the feedback you need.

Use a custom group of participants

A custom network of participants will be ideal if you'd like to test with your own users, prospects, partners, and even employees. Custom networks are great if you have a highly specialized or specific group of people you'd like to test with who may not be part of a test panel already.

Learn more about UserTesting Audience solutions

How many participants to test with

There’s some debate out there over how many participants you should include in a user test, and the right answer depends on what you’re trying to learn.

Researching usability problems

You don’t need a large sample size for this type of testing. Research shows that 5 test participants will uncover 85% of a product’s usability issues. Run a test with 5-7 users, make improvements to your product, test again, and so on. This is more efficient and cost-effective than running one large test.

Uncovering trends and opinions

If you’re trying to discover trends amongst users, then you’ll need a larger sample of participants. This will help you establish quantitative findings so you can present a more convincing case to your stakeholders. If you’ve segmented your customers by various characteristics, like persona, company size, income, or geographical location, you can start by recruiting participants based on those qualifications. Once you’ve established your segments, run your test with at least 5-7 participants for each segment.

How closely should your test participants match your buyer personas?

When you’re narrowing down your participants, there are typically two schools of thought: cast a wide net and test with a large, general demographic, or get super specific with your requirements and seek out a very specific user. Naturally, there are situations when one will be more appropriate to your research needs than the other.

General demographic

The general consensus amongst many UX practitioners is to avoid getting too granular when choosing participants. Most products should be so clear and easy to use that just about anyone could use them.

With a general demographic, that’s precisely the audience you’ll capture. Plus, you’ll find participants quickly. If you’d like to use a general demographic, make sure your research fits these characteristics:

- The product you’re testing is meant for a wide variety of customers

- Anyone can use it with minimal instruction or prior knowledge

- The product can be tested with each participant in 15 minutes or less

Niche products and customer opinions

However, the challenge with a wide demographic is that those participants might not match your ideal customer. So, while you’ll still get valuable feedback, you might not hear from your target audience.

Here are a few situations when a narrower demographic may be best:

- You have a niche product or audience

- Your product or what you’d like to research is complicated and requires a certain level of prior knowledge or expertise

- You’re comparing your product with direct competitors

Once you've narrowed down your target audience, you can move on to drafting your test plan.

Moderated vs. unmoderated testing: what's the difference?

Before you can begin setting up your tests, you'll need to decide if you want to conduct moderated (i.e., face-to-face either in person or via videoconference) or unmoderated (aka remote or self-guided) test. You can test nearly every experience using either approach, but it’s important to understand how the two strategies differ.

Moderated usability testing

With moderated usability testing, a real person will be there to help facilitate (i.e., moderate) the test. The moderator will work directly with the test participant, guiding them through the test and answering questions if the participant encounters any challenges while completing their tasks.

What’s great about moderated testing is that it can be conducted either remotely or in person. You can ask (or answer) questions in real time, and you can open the space for dynamic discussions.

Unmoderated usability testing

Unmoderated usability testing is just like it sounds. It’s not monitored or guided, so there’s no one else present during the test except the participant. The test participant completes tasks and answers questions at their own pace, on their own time, at a time and location that works best for them.

Unmoderated testing tends to be less time-consuming and more flexible than moderated usability tests, as participants can complete their tests independently without disrupting your daily workflow.

Now that we’ve established the differences let’s dive into how and when to best apply each strategy based on your research needs.

When to use moderated usability testing

It helps to think of moderated user testing as an interview or a real-time conversation that you’re having with a participant or customer. Everything that you’d do to prepare for that type of interaction is what you’ll need to consider for a moderated study.

At UserTesting, we especially recommend them for early development stages use cases, including prototype tests, competitive analysis, usability tests, and discovery interviews.

Pros

Moderated testing works best when you need a high level of interaction between you and your test participant. For example, if you want to study a prototype with limited functionality or a complicated process or concept, moderated testing provides you with the interaction you’d need to guide a participant through the study. It's also an excellent way to conduct interviews, understand the customer journey, and discover pain points.

Moderated user testing is also a great way to observe body language and catch subtle behaviors and responses. It enables you to probe participants for more information if they seem stuck or confused and minimizes the risk of a participant speeding through the tasks or questions.

This type of test allows you to develop a rapport and have a natural conversation with your customers. This helps establish trust and leads to candid feedback that might not be possible with other qualitative research methods.

Ideal if you don’t have a lot of time to spare, moderated user testing is more time-efficient than traditional interviews or focus groups—which may require weeks for both recruiting and scheduling and months to receive and compile results.

Cons

Because of the additional time and resources, moderated testing does cost more than unmoderated testing. However, you can reduce some of that cost by conducting remote moderated studies, rather than in-person. This allows you to connect with participants all around the world and reduces the need to block out dedicated time and space for onsite interviews. Who doesn’t love getting some hours back in their day?

When to use unmoderated usability testing

Unmoderated testing is best for validating concepts and designs quickly with a diverse group of participants. This type of testing works great if you have specific questions that you need answered, need a large sample size, require feedback quickly, or want to observe a participant interacting in their natural environment.

Pros

The beauty of remote unmoderated usability testing is that it can be done anytime, anywhere, and you typically have actionable feedback within a day if not sooner.

Because a moderator isn’t needed, the cost is typically much lower than moderated tests, enabling you to run more tests with a wider variety of participants.

Additionally, if you conduct a test and later (understandably) decide that you need to rework the tasks or questions, sending out a new round of remote unmoderated tests would be more cost- and time-friendly than if you were to redo moderated usability tests. If you prioritize flexibility, unmoderated usability tests may be the way to go.

Cons

Since unmoderated tests are completely unsupervised, they require a fixed set of questions and tasks for participants to complete independently. If participants run into issues, spend less time than asked of, face technical difficulties, or don’t understand the tasks or questions, you, unfortunately, won’t be able to step in and guide them. For this reason, when making tests, our customers are encouraged to over-communicate, avoid making assumptions, and ask the participant to verbalize their expectations if they experience any technical issues.

At the end of the day, the choice of which test is best is up to you and your team, but now you’re better equipped to make an informed decision.

GUIDE

Boost website conversions with real user feedback

This guide is designed to help you break through these barriers by leveraging human insights. Without direct customer feedback, teams are left guessing at the needs, frustrations, and motivations behind the data—and those guesses are often wrong. But with quick access to real customer insights, you can make meaningful website optimizations, validate improvements before going live, and drive better results with confidence.

Build your test plan

If you’re creating a recorded test, start assembling your test by creating a test plan. Your test plan is the list of instructions your participants will follow, the tasks they’ll complete, and the questions they’ll answer during the test.

Depending on your insights solution, there are many different types of "tests" you can leverage, including:

- Tasks

- Five-second test

- Verbal response

- Written response

- Multiple choice

- Rating scale

- Card sort

- Tree test

How to use tasks

Tasks are the actions that you want the study participant to complete. They complete one task before moving on to the next, so keep the ordering of tasks in mind as it will guide the study participant from start to finish. As they work through completing each task, they’ll be sharing their thoughts out loud, which will be captured on video and saved to your dashboard when the session is finished.

When you’re creating tasks for your study, focus on the insights you want to gather about the specific objective you determined. If you have a lot of areas you want to test, we recommend breaking these up into different studies. In most cases, it’s best to keep your study around 15 minutes in length, so keep this in mind as you plan your tasks.

Consider using both broad and specific tasks in your test

Broad, open-ended tasks give your test participants minimal explanation about how to perform the activity. They’re meant to help you learn how your users think and are useful when you’re seeking insight on branding, content, layouts, or any “intangibles” of the user experience. Broad tasks are helpful for observing natural user behavior—what people would do when given the choice and the freedom—which can yield valuable insights on usability, content, aesthetics, and users’ sentimental responses.

Example of a broad task: Find a hotel that you’d like to stay in for a vacation to Chicago next month. Share your thoughts out loud as you go.

Keep in mind that responses may vary significantly from one test participant to the next. Be prepared to get diverse feedback when using broad tasks.

Specific tasks are defined activities that participants must follow. They provide the participant with clear guidance on what actions to take and what features to speak about. Participants focus on a specific action, webpage, or app and talk precisely about their perceptions of this experience.

One thing to keep in mind: specific tasks are most appropriate to situations in which you have identified a problem area or a defined place where you desire feedback.

Example of a specific task: On this page, select an item that you would want to buy. Share how you went about making this decision and selecting the item.

Pro tip: When you’re not sure where to focus your test, try this tip. Run a very open-ended test using broad tasks like “Explore this website/app as you naturally would for 10 minutes, speaking your thoughts out loud.” You’re sure to uncover new areas to study in a more targeted follow-up test.

Five second test

The five second test function will show the URL listed in the Starting URL field to your participants for five seconds only. Afterward, we'll ask them three questions to recall their impressions and understanding of the page.

- What do you remember?

- What can you do on this site?

- Who's this site for?

The five second test will always be the first task, and the three questions cannot be changed. Once the test participant has answered the three questions, they'll see the Starting URL again.

- The five second test is only available for desktop website tests that use the Chrome browser.

- If you are using the Invite Network, running a mobile or prototype test, or asking users to use a browser other than Chrome, do not include the five second test.

- The test Preview feature also will not reflect the five second test, although you can still include it in your test.

Verbal response

Verbal response questions prompt test participants to provide a spoken answer, which correlates with where a participant is at in the study. Since they're recorded, you can skip directly to the answer in the video. They make great clips for your highlight reel!

Written response

Written response tasks are excellent for getting test participants to use their own words when describing the experience. This can make analyzing results easier, however, it can be more cumbersome for mobile tests.

Multiple choice

Multiple choice questions are a type of survey-style question. They can help you collect fast feedback by allowing you to measure participants’ overall opinions and preferences and view those results across all participants in your test.

5 do's and don'ts for multiple choice questions

1. Do: provide clear and distinct answers

Make sure your answers are mutually exclusive, and each answer can stand alone. Otherwise, it will confuse participants and compromise the value of the feedback.

Note: Don't use special characters like asterisks, dashes, or periods in your multiple-choice answer options. Doing so can cause issues, including the answer options not displaying correctly or the participant being unable to move on to the next task.

2. Do: provide a “None of the above,” “I don’t know” or “Other” option

This will prevent your data from being skewed by providing an “out” in case none of the other answers apply to the participant or if the participant is confused.

3. Don't: ask leading questions or yes/no questions

When participants can easily predict which answer you want from them, they’ll be more likely to choose that answer, even if it isn’t accurate.

4. Do: ask participants to “Please explain your answer”

Although most participants realize that this is implied, it never hurts to include this small prompt for participants to articulate the thinking behind their choice.

This is especially important when you are inviting your own participants via a direct link to the test because those individuals will be more familiar with completing surveys silently, than thinking out loud. Plus, it makes for some excellent sound bites that can be passed along to your team.

5. Do: decide whether participants can select more than one answer

In some scenarios, it might make sense to let participants choose multiple answers that apply to them. You'll see the option to do this when you're creating the task.

Rating scale

Rating scale tasks are a great way to extract data quickly during your test. Choose from a variety of rating scales, or create your own and customize the endpoints.

When asking participants to rate an experience or align their feedback with a value, you want to ensure that your rating scale offers the right granularity in response options. This ensures that participants can select the option that best matches their opinion, resulting in more statistically accurate feedback for you.

Card sort

Card sorting is a qualitative research method used to group, label, and describe information more effectively, based on feedback from customers or users.

Usually, card sorting is used when designing (or redesigning) the navigation of a website or the organization of content within it. And that’s because it helps to evaluate information architecture—or the grouping of categories of content, the hierarchy of those categories, and the labels used to describe them.

Card sorting is useful if you’re trying to:

- Increase the findability of content on your website

- Discover how people understand different concepts or ideas

- Find the right words to form your navigation

- Adapt to customer needs and surface the most important information

Card sorting requires you to create a set of cards—sometimes literally—to represent a concept or item. These cards will then be grouped or categorized by your users in ways that make the most sense to them. For best results, you’ll need to decide if it makes sense to run an open, closed, or hybrid card sort. There’s a case for each:

- Open card sort: participants sort cards into categories that make sense to them and label each category themselves

- Closed card sort: participants sort cards into categories you give them

- Hybrid card sort: participants sort cards into categories you give them but may also create their own categories if they choose to

Which one you choose will depend on what you want to find out and what you’re already working with.

Tree testing

Tree testing is a research method used to help you understand where people get lost finding content or information on your website. Organizing content is foundational to building effective digital properties—regardless of your business objectives. However, many teams don’t have easy access to the tools they need to test their information architecture.

Through tree testing, you’ll be able to:

- Optimize your navigation for the most critical tasks, whether that’s to improve engagement or conversions

- Test alternate labels for the same tree category by setting up two tree tests and comparing results (useful to get insights on findability based on label names)

- Test alternate locations for the same category by setting up two tree tests and comparing results (useful when adding new features within an existing site, i.e., where does this fit in?)

- Recreate a competitor's navigation to compare solutions and get insights into customer preferences

Test writing best practices

The structure of your study is important. We recommend starting with broad tasks (such as exploring the home page, then using search, then adding an item to a basket) and moving in a logical flow toward specific tasks (such as putting items in their online shopping cart in preparation for a purchase). The more natural the flow, the more realistic the study will be, putting your participant at ease and enabling better, more authentic feedback.

Example of poor logical flow:

- Create an account

- Find an item

- Check out

- Search the site

- Evaluate the global navigation options

Example of good logical flow:

- Evaluate the global navigation options

- Search the site

- Find an item

- Create an account

- Checkout

6 tips for gathering actionable responses

How you lay out your test plan should align with the objective original set for your study. If you’re interested in discovering the participants’ natural journey or acquiring a better understanding of their motives and rationale as they navigate your products, then give them the freedom to use the product in their own way.

However, if you’re more focused on understanding participant attitudes and behaviors in a well-defined context, then be specific in the tasks you assign and the questions you ask.

Additionally, starting broad and then moving to specific tasks is important if you suspect that a specific task will require the participant to do something complicated or with a high risk of failure.

Putting these types of tasks near the end of the study prevents the test participant from getting stuck or being thrown off track in the beginning, which could negatively impact the remainder of your study.

Once you’ve mapped out a sequence of tasks in your test plan, start writing the questions. It’s critical to structure questions accurately so you get reliable answers and gain the insights that will support your recommendations and business decisions.

1. Use clear, everyday language

You want to provide a seamless experience for participants completing your study. Avoid industry jargon or internal phrases that participants might not know. Terms like “sub-navigation” and “affordances” probably won’t resonate with the average user, so don’t include them in your questions unless you’re certain your actual target customers use those words.

2. Include the time frame

If you’re asking about some sort of frequency, such as how often a user visits a particular site, make sure you define the timeline clearly at the start of the question. This ensures consistency and accuracy in the responses that you receive.

- Example of time frame in question: In the past six months, how often did you visit Amazon.com?

3. Frame questions for more standardized responses

Gathering opinion and preference data can be tricky. To ensure that you collect actionable insights that support changes and decisions (instead of a range of diverging opinions), it is critical that you standardize the experience so that participants answer the same question and provide helpful feedback.

4. Ensure rating scales are balanced

Be fair, realistic, and consistent with the two ends of a rating spectrum. If you’re asking participants to select the more accurate response from two options, ensure that they’re weighing each end of the spectrum evenly.

- Example of an unbalanced scale: After going through the checkout process, to what extent do you trust or distrust this company? I distrust it slightly ←→ I trust it with my life

- Example of a balanced scale: After going through the checkout process, to what extent do you trust or distrust this company? I strongly distrust this company ←→ I strongly trust this company

5. Separate questions to dig into vague or conceptual ideas

Some concepts are complex and can mean different things to different people. For example, satisfaction is a complex concept, and people may evaluate different things when concluding whether or not they’re satisfied with a product or experience.

To ensure that your study yields actionable feedback, break up complex concepts into separate questions. Then, consider all of the responses in aggregate when analyzing the results. You can even create a composite “satisfaction” rating based on the results from the smaller pieces.

Example of a question on a complex concept:

- On a scale of 1 to 5, with 1 being “Very satisfied” and 5 being “Very unsatisfied,” how would you rate your satisfaction with this children’s online learning portal?

Example of breaking up questions on a complex concept:

- On a scale of 1 to 5, with 1 being “Very easy to use” and 5 being “Very difficult to use,” how would you rate the ease of use of this children’s online learning portal?

- On a scale of 1 to 5, with 1 being “Very appealing” and 5 being “Very unappealing,” how would you rate the visual design of this children’s online learning portal?

Remember: one of the great benefits of qualitative research is getting to the why of human behavior. For every question, if you ask “why” a person might respond a certain way, you can include additional questions to understand the source of an opinion better.

6. Avoid leading questions

Sometimes, when asking questions, you can inadvertently influence the participant’s response by including hints in the phrasing of the questions themselves. In doing so, you may influence the outcome of your studies because you get biased and inaccurate responses and results. Be sure that your questions are neutral, unbiased, and free of assumptions.

Example of a leading question:

- How much better is the new version than the original home page?

Example of a better question:

- Compare the new version of the home page to the original. Which do you prefer, and why?

Once you have your test written, there's one more crucial step before you launch it to participants!

Launch a dry run test

Before you launch your test to all participants, we recommend conducting a dry run (sometimes called a pilot study) with just one or two participants. This determines whether there are flaws or confusing instructions within your original test plan. It also provides the opportunity to make adjustments and improve the test plan before launching it fully.

Here’s a good structure to do this:

- When creating your test, select one or two participants and launch your test just with them.

- Review your results and observe how participants responded to each task and question. Take note of any trouble they encounter while trying to complete the tasks and questions or if any responses fail to provide the type, level, or quality of insight you expected.

- After reviewing the results from your dry run sessions, make any necessary adjustments to your test. You can conduct another dry run test to confirm you've made all the necessary changes or proceed with launching your test with your entire audience.

Analyze your results

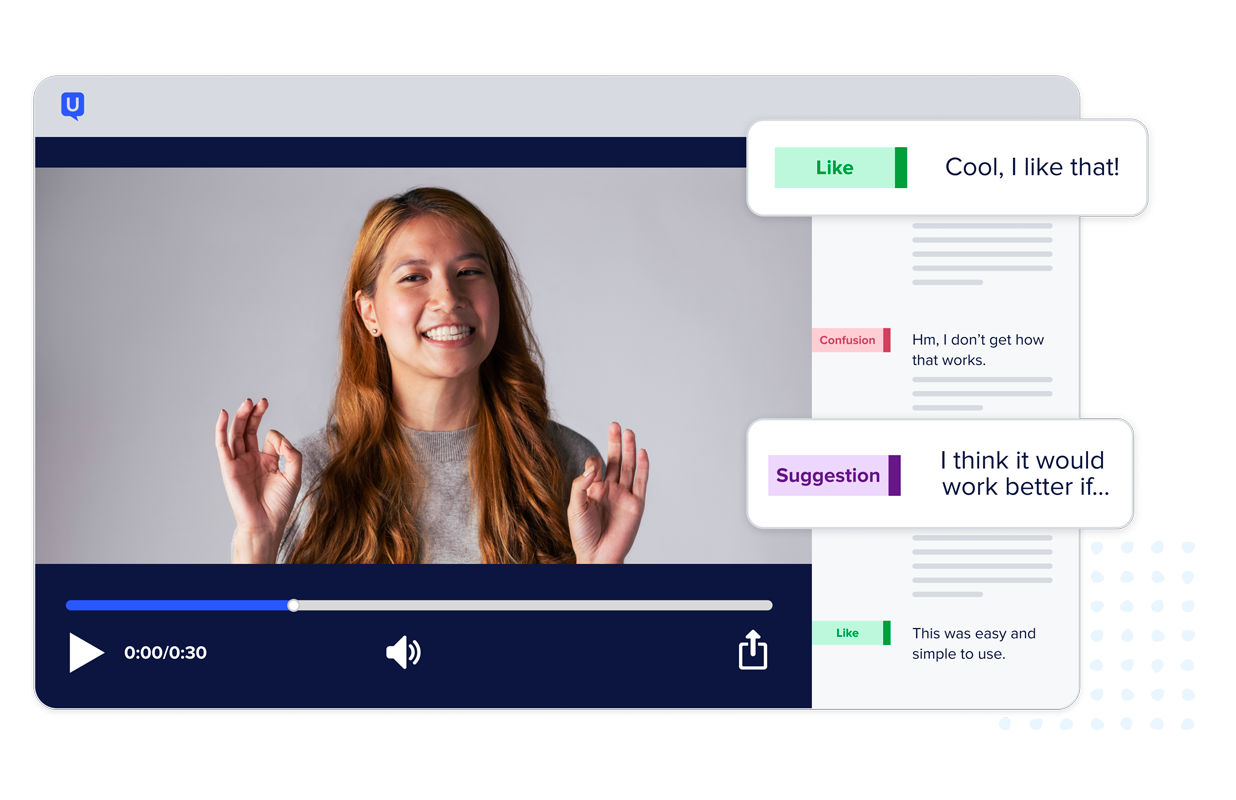

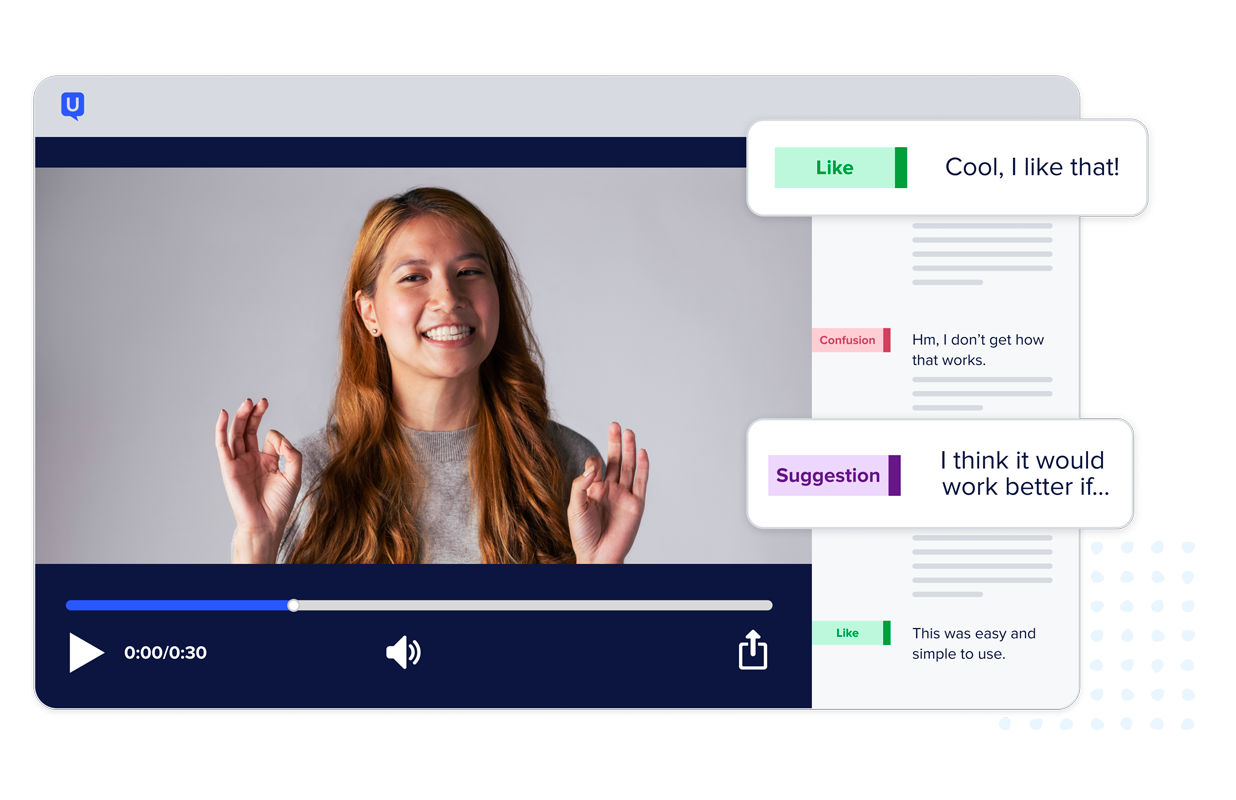

Once your tests are completed, you'll have the opportunity to analyze your results. Depending on your insights platform, you may receive video feedback that contains transcripts, clips, automated metrics, and sentiment analysis to help you surface insights faster.

However you review your results, watch for similar responses, themes, and any major deviations. If a significant number of your study participants provide similar feedback, this could signal an issue impacting your larger customer base.

Or, if a small number of participants shares a unique piece of feedback, you can hone in on this particular video to better understand why participants had such a different experience.

Take note of user frustrations in addition to things that users find particularly helpful or exciting. By knowing what people love about your product experience, you avoid the possibility of “fixing” something that’s not even broken. Hearing about things customers struggle with and enjoy can support informed discussions on future product and experiential improvements.

Share your findings

Sharing the insights you've gathered through testing is one of the most powerful ways to ensure your entire team is keeping your customer at the center of everything they do.

After you’ve uncovered your findings, be sure to share key insights with your team and stakeholders. This will not only help promote a customer-centric culture, but will serve as the basis for important business decisions.

Here are a few ways you can easily share insights with your team:

- Create a highlight reel that aggregates critical video clips: The curation of this feedback can be especially helpful when you have multiple participants with the same opinion.

- Host an insights party: Invite your team, stakeholders, and even executives for a team meeting and share key video clips, quotes, and qualitative data from your tests.

- Share quick insights via email or instant messenger: Even a short, 10-second clip of a user struggling to use a feature—or loving it—can have a huge impact on your team. Whenever you get compelling feedback from a test, don't hesitate to share it internally via instant messenger or email.

- Centralize your insights: With solutions like UserTesting's Insights Hub, you can get a holistic view of your customer insights by centralizing feedback and enabling companywide access to user feedback.

After you've shared your insights, it's up to each team to incorporate that customer feedback into the experiences you create and to continue to test and re-test to stay connected with your customers.

Champion a customer-centric culture within your organization

We encourage you to gather customer insights throughout your product and campaign development process and across multiple teams and departments.

- Early in your process, this helps you better understand customer pain points and challenges. Thus, allowing you to brainstorm effectively and support product and solution ideation. You can also hone in on the right customers and audiences to assess market opportunities and analyze product-market fit.

- You can understand usability challenges in your prototypes or early-stage products to course-correct before you’ve spent much time or resources on development.

- As you launch new digital experiences, you can monitor how customers are reacting to and interacting with these new products. This yields ideas on how to continue evolving your product.

Getting frequent feedback and insights from your customers is the best way to keep your finger on the pulse of customer challenges and expectations. This will ensure that you’re making the right decisions and taking the right steps towards ensuring ongoing customer loyalty and satisfaction.

Unlock your customer insight ROI

Discover the hidden ROI of your customers' insights. Book a meeting with our Sales team today to learn more.