How to test voice UI with UserTesting

The recent proliferation of voice user interfaces (voice UI) in human computer interaction has complicated the machine of digital experience.

With the boom of smart appliances, there’s now more pressure to make your entire home "smart" by connecting every common home appliance to the internet. Fridges, TVs, doorbells—even vanity mirrors that are now part of the Internet of Things (IOT). One day, it's possible that appliances without access to the internet will be a rarity.

What does Voice UI mean for UX?

Virtually all smart appliances come with the capability to be controlled via a mobile device, but some companies want to extend this control to voice as well. Amazon Echo, Google Home, Apple’s Siri, or any of the other smart assistants currently on the market are all designed to respond to voice commands as well as integrate with a variety of third party smart devices.

This increase in voice controlled experiences has brought with it some unique challenges that are different from traditional interfacing methods. The variation in how people communicate culturally, physical differences like voice volume, and many other variables will all contribute to how voice user interfaces should be designed.

However, the process of understanding and providing solutions to the challenges associated with creating a voice user interface is not different from understanding and solving challenges associated with traditional interfaces. The answer is to conduct user experience research.

Voice UI Testing Best Practices

1. Select the most effective approach to answer your questions

The capability to run mixed-methods studies with virtual assistants already exists in UserTesting. Examples include:

- Think-out-loud (TOL) studies using the Advanced UX Research project type for desktop or mobile devices when you have testable hypotheses about how people interact with smart assistants.

- Diary studies using the Advanced UX Research project type for mobile devices when you want to observe interaction with smart assistants in a natural environment.

If you want to compare the experience of using smart assistants from different manufacturers, you will be best served creating a think-out-loud navigation task using an Advanced UX Research project and making sure that your participants have their Echo, HomePod, or Google Home device close at hand.

If you're more interested in understanding how people use their smart assistants in a natural environment you will be best served creating a mobile study using video questions.

2. Give your participants instructions for setting up their workspace before your tasks begin

Create a message similar to the one below when writing tasks for smart assistants:

“For this next task you will be asked to interact with Alexa using the Echo Dot. Here are a few tips for completing this task:

- We will refer to Alexa as 'your Echo,' however, you should initiate and interact with your Alexa as you normally would.

- Make sure the Echo is plugged in and is connected to wifi.

- Make sure to place the Echo within a foot or two of your microphone and have the volume at least at a 6 before beginning the task."

You want to make sure participants are as prepared as possible to give helpful feedback. Some other things that you may want to add into a message like this are:

- Specific trigger words for the device you’re using (e.g. “Say ‘Alexa’ before your voice command”).

- Phrasing for stopping an accidental activation of the smart assistant (e.g. “Say ‘Alexa stop’ to cancel an unintended activation of your assistant”).

3. Do NOT use ‘trigger’ words as part of your Scenario and Task Description

When writing task instructions and creating the scenario, make sure to instruct participants in such a way that they do not accidentally trigger the smart assistant before they intend to if they decide to read your instructions out loud.

For example, in the copy below you can see that by reading the scenario or the task aloud the participant’s Echo won’t be triggered:

Your Scenario: You have just started getting ready for your day. You want to know what the weather will be like so you can plan your outfit accordingly.

Your Task: Use your Echo to find out what the weather will be like today.

4. Take advantage of follow-up questions

When creating tasks, consider that people will vary in how they ask their smart assistant for things, both in syntax and choice of words.

Take advantage of follow-up questionnaires to probe further into the experience participants had using their smart assistant.

5. Mix methods for best all around results

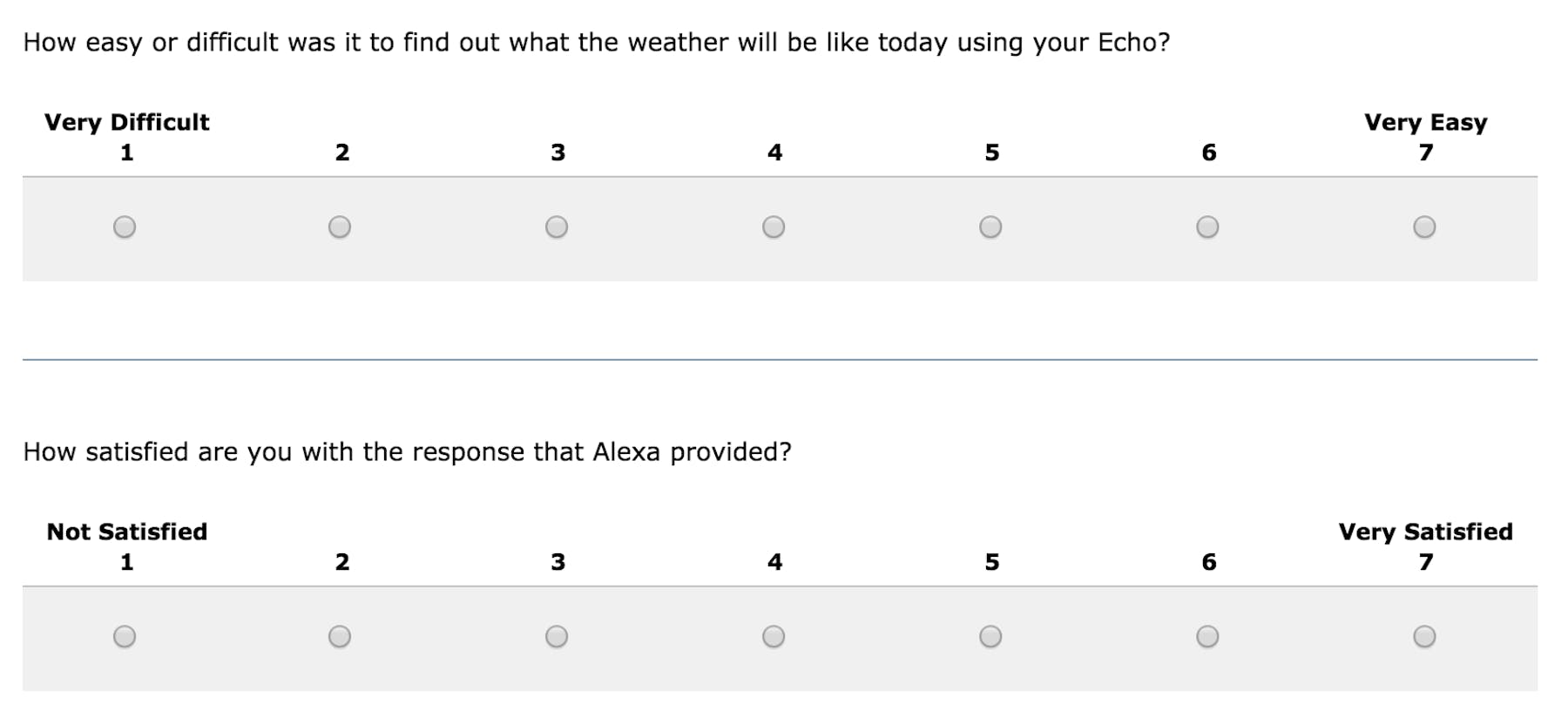

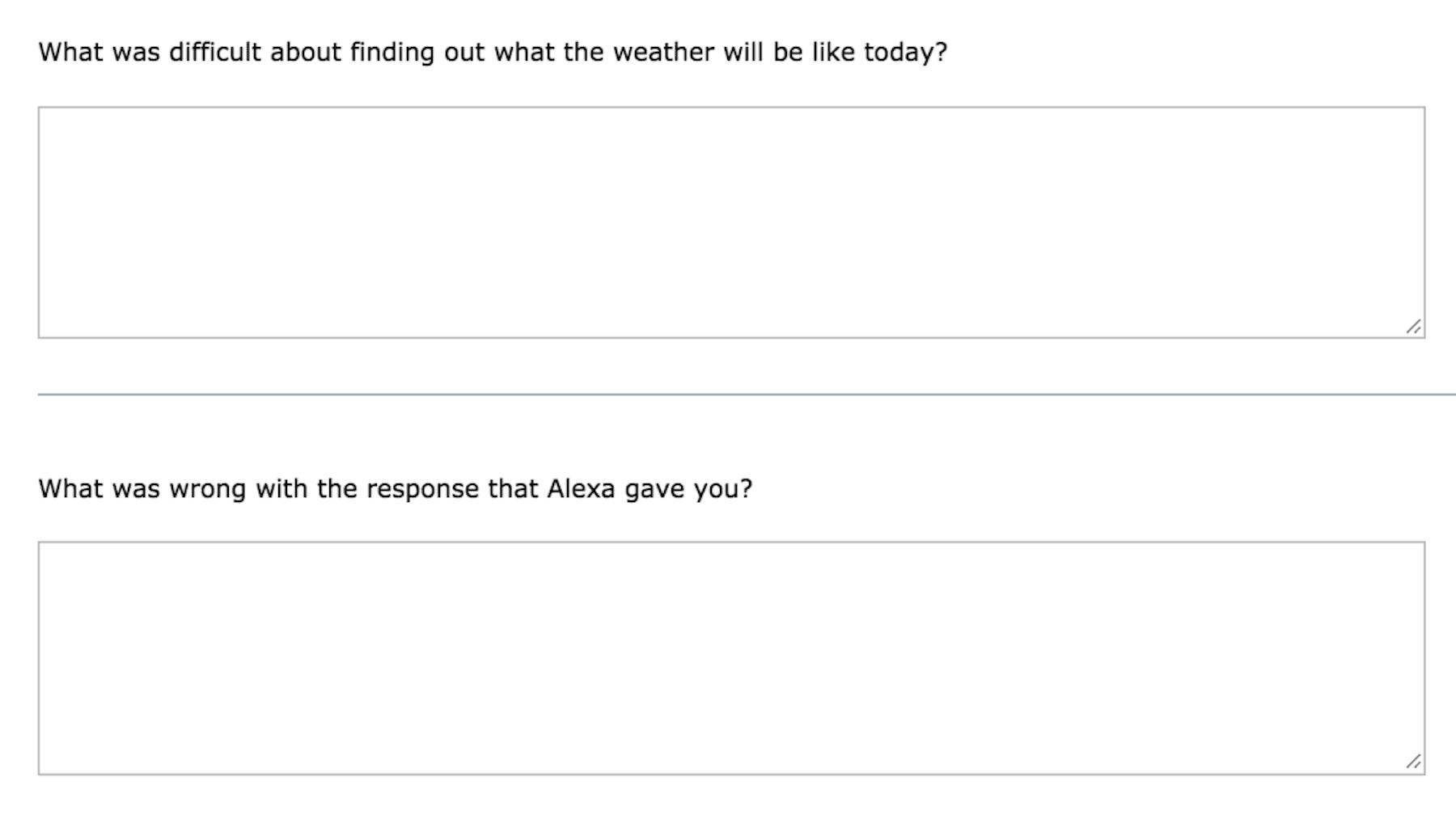

In a sample study using smart assistants, we set open text questions to show up when participants reported a rating of ease or satisfaction as 4 or less in order to better understand what exactly contributed to a low rating.

This gives participants a chance to answer a directed question about their thoughts on what could be improved about interacting with a specific smart assistant.

6. Incorporate tasks specifically for enabling and disabling skills for your study

If testing a "skill" (an app created to integrate with third party developers) on Alexa or Google Home, ensure that you do not instruct participants to either enable a skill they already use on their device or use a skill on their device they have not already enabled.

Failure to provide logical directions for the use of skills on these devices will cause confusion and lead to a high rate of failure.

7. Revert any changes made to the device during the study

If testing in a laboratory setting, make sure to reverse any changed settings that occurred on your test device over the course of your testing session before the next session begins.

If you are asking participants to use their own device for remote testing, include a task with specific instructions about how to disable any skills and revert back to previous settings changed for the purpose of your study.

8. How the setup for a participant might look

All a participant in your study needs is a computer, so that they can run the UserZoom study and the smart assistant of your choice. No extra materials are necessary to run a desktop moderated study using virtual assistants!

Conclusion

Here’s a sample video of this kind of study from a participant’s perspective. We chose a black screen as the stimuli here but you can use any kind of hosted image or website.

As voice UI’s become more and more ubiquitous (please no smart toilets, we have to draw the line somewhere), UXers, product managers and anyone else involved in tailoring the experience can and should conduct these kinds of studies. With that in mind, we hope you find this article helpful.