How to Write Great Questions for Your Next User Test

Today's post is an excerpt from our free eBook, The Complete Guide to User Testing Websites, Apps, and Prototypes. Enjoy!

The time is right. Maybe you’re about to launch a new product, or you’re in the early stages of a website redesign. Either way, you know your project would benefit from having real people give you feedback on what works and what doesn’t. It’s time to user test!

But wait! You need to ask the right question in the right way. You want results you can trust. Results that you can comfortably base your decisions on.

It’s important to structure questions accurately and strategically in order to maximize your user tests and gain the insights that will really help you move your project forward. In this article, you’ll find a series of tips to help you gather a range of data (both factual and subjective) by asking your questions just the right way.

Pro tip: When writing a question, every word matters. Every. Single. Word.

Carefully consider every word to make sure you’re asking the question that you want to be asking.

Tips for gathering factual responses

Don’t use industry jargon

Terms like “sub-navigation” and “affordances” don’t jive with the average user, so don’t include them in your questions. Define key terms or concepts in the questions themselves (unless the goal of your study is to see if they understand these terms/concepts).

Define the timeline

If you are asking about some sort of frequency, such as how often a user visits a particular site, make sure you define the timeline clearly. Always put the timeline at the beginning of the sentence.

Bad: How often do you visit Amazon.com?

Better: How often did you visit Amazon.com in the past six months?

Best: In the past six months, how often did you visit Amazon.com?

Give complicated questions some breathing room

Don’t try to pack all the juicy and complex concepts into one question. Break it up!

Ask specific questions to get specific answers

If your respondent can give you the answer, “It depends,” then it’s probably a bad question.

Ask about firsthand experiences

Ask about what people have actually done, not what they will do or would do. It’s not always possible, but try your best to avoid hypotheticals and hearsay.

Example of asking about what someone will do or would do: How often do you think you’ll visit this site in the next six months?

Example of asking about what someone has done: In the past three months, how often have you visited this site?

Example of hearsay: How often do your parents log into Facebook?

Better example: Skip this question and ask the parents directly!

Tips for gathering subjective data

Leave breadcrumbs

The name of the game is to make sure that users are all answering the same question. Yes, the text in each question may be presented exactly the same from user to user, but were they seeing or experiencing the exact same thing prior to being asked the question? The stimulus needs to be standardized.

But wait! How do you do that in an unmoderated, remote user test?

Make like Hansel and Gretel and give them little breadcrumbs along the way! Remind them where they should be on the site. To ensure they’re where you want them to be, provide a URL for them to click so they are looking at the right part of the site or app.

Don't make the user feel guilty or dumb

You’re not judging the moral quality or character of your respondents when analyzing their results, so make sure your questions don’t make them feel that way. To avoid that, place the burden of blame on the website, product, or app so it’s not the user’s fault, it’s the site’s fault.

Bad example: “I was very lost and confused.” (agree/disagree)

Good example: “The site caused me to feel lost and confused.” (agree/disagree)

Make sure your rating scale questions aren't skewed

Be fair, realistic, and consistent with the two ends of a rating spectrum.

Bad example: “After going through the checkout process, to what extent do you trust or distrust this company?” (I distrust it just a tad ←→ I trust it with my life)

Good example: “After going through the checkout process, to what extent do you trust or distrust this company?” (I strongly distrust this company ←→ I strongly trust this company)

Pro tip: Be aware that emotional states are very personal and mean different things to different people.

Being “very confident” to a typically sheepish person may mean something very different than what “very confident” means to an experienced C-level executive.

Subjective states are relative

“Happy” in one context can mean something very different than “happy” in another context.

For instance:

Option 1: Happy / Not Happy (Happy = Opposite of not happy)

Option 2: Happy / Neutral / Unhappy (Happy = The best!)

Option 3: Very Happy / Happy / Unhappy / Very Unhappy (Happy = Just above neutral)

Ask many specific questions, rather than a few all-encompassing questions

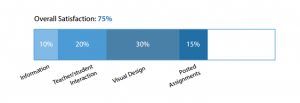

When you ask questions about vague or complex concepts, users often don’t know how to answer them. Break concepts up when you’re asking the questions and put them back together when you’re analyzing the results.

Imagine you want to measure parents’ satisfaction with their children’s school website. Satisfaction is a vague and complex concept.

- Is it the quality of the information?

- Is it the quality of interaction between the teachers and students online?

- Is it the visual design?

- Is it the quality or difficulty of the assignments posted there?

Why not ask about all of those independently?

For more tips on running a super-insightful user test, download our free eBook, A Complete Guide to User Testing Websites, Apps, and Prototypes!