13 experts on quantifying the commercial impact of UX research with A/B tests

I have an inkling that UX and A/B testing should work together more often and more effectively.

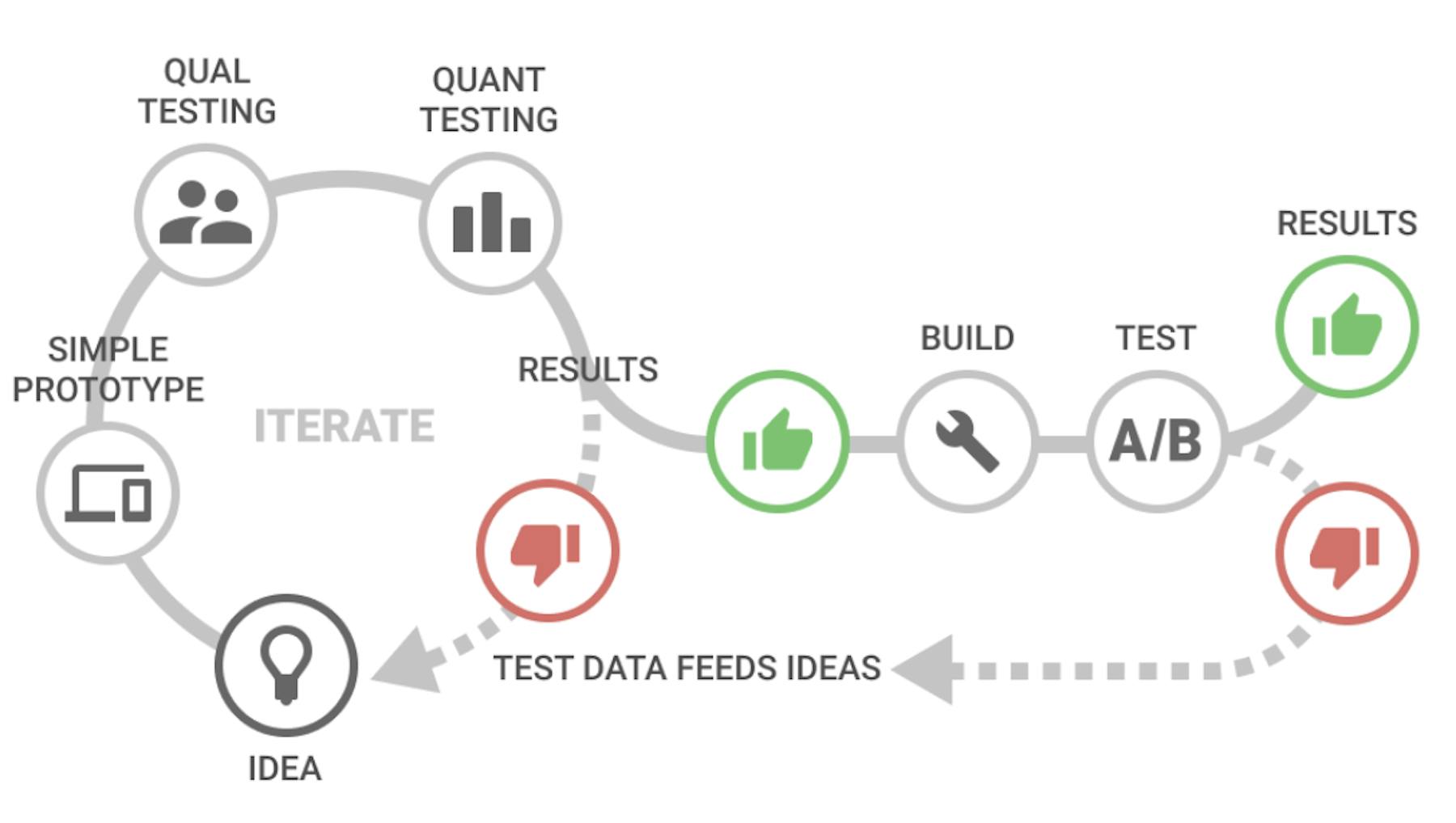

There should be a virtuous circle where A/B testing and UX research insight feed into each other. UX research can lead to more A/B wins and A/B testing could be the answer that all researchers have been looking for when linking research to commercial gain.

I spoke with 13 experimentation experts to find out what they thought about this crazy idea.

"By combining forces with UX research we can strengthen our hypothesis with qualitative insights and this could, in my opinion, increase the win rate of A/B tests. Giving more attention to the voice of the customer, instead of only their behavior."

Florentien Winckers

Experimentation Consultant, Albert Heijn

The cult of A/B testing

Academically, A/B testing is just one of many UX research methods. However, I’ve discovered in my interviews that A/B testing and other UX research methods (usability testing, interviews, click tests, card sorting, tree tests, etc.) are not understood or valued equally by business stakeholders. This will be no surprise to many readers.

A/B tests will show whether a change to the experience will work in ‘real-life’ and win or lose, they reduce the risk of developing and launching a dud feature. BUT some of the more cynical experts I spoke to, described how a cult of appreciation has risen around A/B testing because the results from these tests will often directly impact commercial KPIs and are therefore very easy to communicate with senior stakeholders.

"A/B testing is an invaluable tool for any UX researcher when it comes to communication with stakeholders and management, since it has the advantage of producing very clear, very business-driven numbers. However, if you rely solely on A/B testing, you'll never really do user-centered design, but KPI-centered design."

Max Speicher

User Experience Manager, C&A

In fact, most of the experts interviewed described how the purpose of A/B testing was perceived by most business stakeholders to generate revenue through higher conversions, basket values, etc. As a result, it’s easy for business stakeholders to see A/B testing as the holy grail and try to run as many tests as possible and forget that it’s not an unending source of profit.

Experiments cost time, planning, development resources and therefore many experiments will result in a loss of revenue when they fail or remain inconclusive. The fear of bad experiments even leads some ecommerce teams to ban A/B testing on key revenue-generating pages just in case a bad hypothesis costs the company multiple £€$¥.

The cult of UX

UX research on the other hand will often be perceived by product teams or business stakeholders as slower than A/B testing, less trustworthy, and crucially, less connected with delivering revenue. This perception drives action and investment.

For example, many of the experts I spoke with described how the number of A/B tests run per year greatly outnumbered the number of UX studies they ran. Traditional lab testing could indeed take a long time to plan and execute and explain this phenomenon but the massive rise in remote UX platforms over the past few years (you can build, run and analyze a test in hours) deflate this argument.

This is an example of how senior stakeholders will assign their budgets where they see demonstrable returns.

There have been many articles about the differences between UX research and A/B testing and how they can be used to answer different questions. Classic examples are often focused on doing UX research when A/B testing is not possible, putting it in second place. Product managers may often think it's an either/or situation when the perfect blend is to do both.

The utopia is to create a virtuous circle where UX research influences A/B test hypotheses and A/B test results fuel further UX research and further, valuable A/B tests. Most CRO teams and UX teams know this, they’ve begun to noodle over it but their data is disconnected, they don’t talk to each other enough and they have no evidence to support closer alignment.

The importance of a good hypothesis

The experts I interviewed spoke with passion on the importance and value of a ‘good’ hypothesis in defining the success of a campaign.

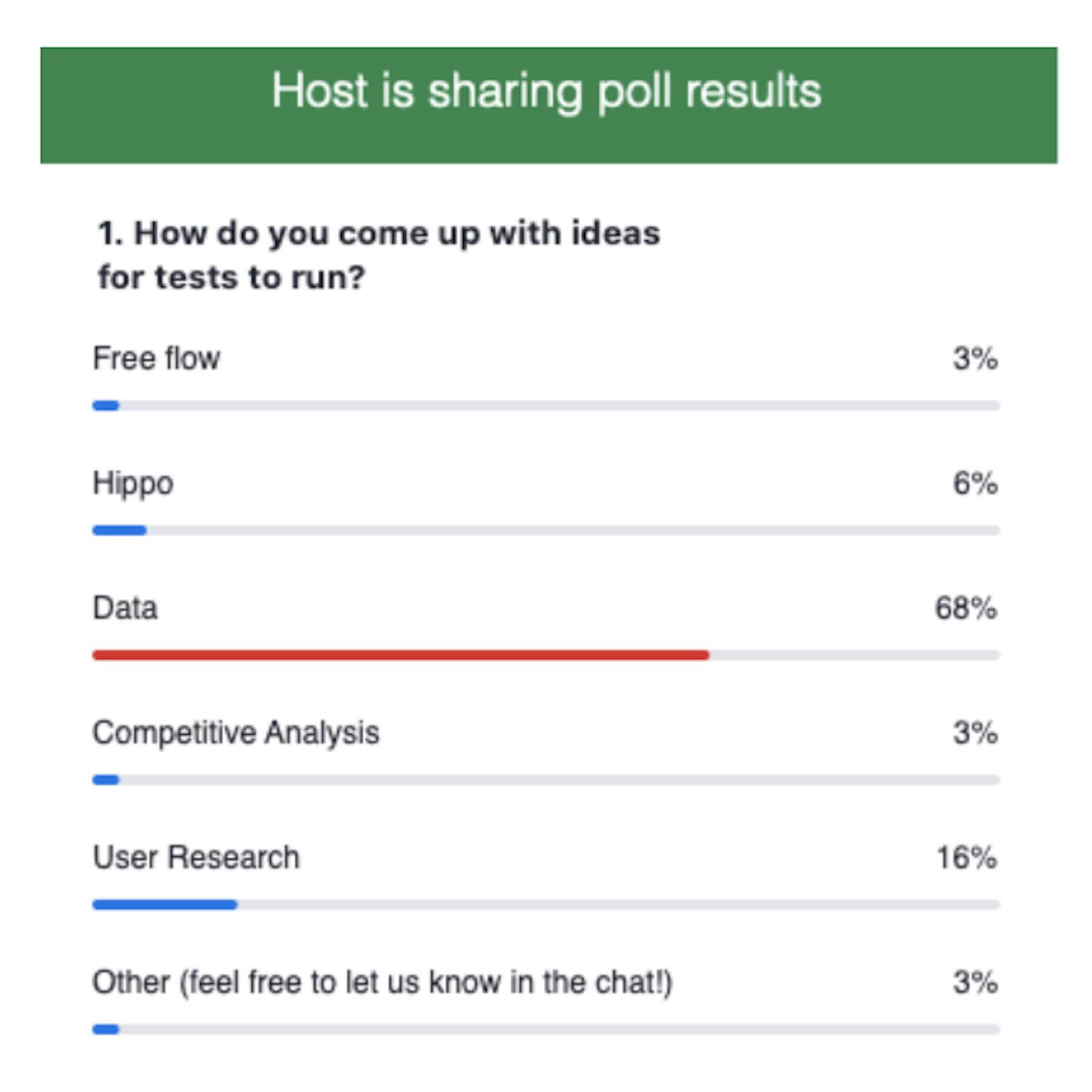

Digital analytics data still tops the charts for the source of most ideas alongside feedback data, previous A/B test results, stakeholder opinions and UX research too.

I was surprised by how many experts still related that many of the tests they run are still HiPPO directed. This is evidenced in the online event I attended in June and their poll shown below:

For most companies their experimentation backlog is big, they don’t have unlimited testing capacity and good ideas don’t always get tested. This was where the experts explained the importance of prioritizing A/B tests on ICE, PXL, or PIE. The most quoted was ICE, which stands for:

- Impact: How much of an impact will this have on revenue or the desired KPI?

- Confidence: How sure are we that this idea will work or that we’ll have a conclusive test?

- Effort: How much effort is required to run this test and/or implement the final solution?

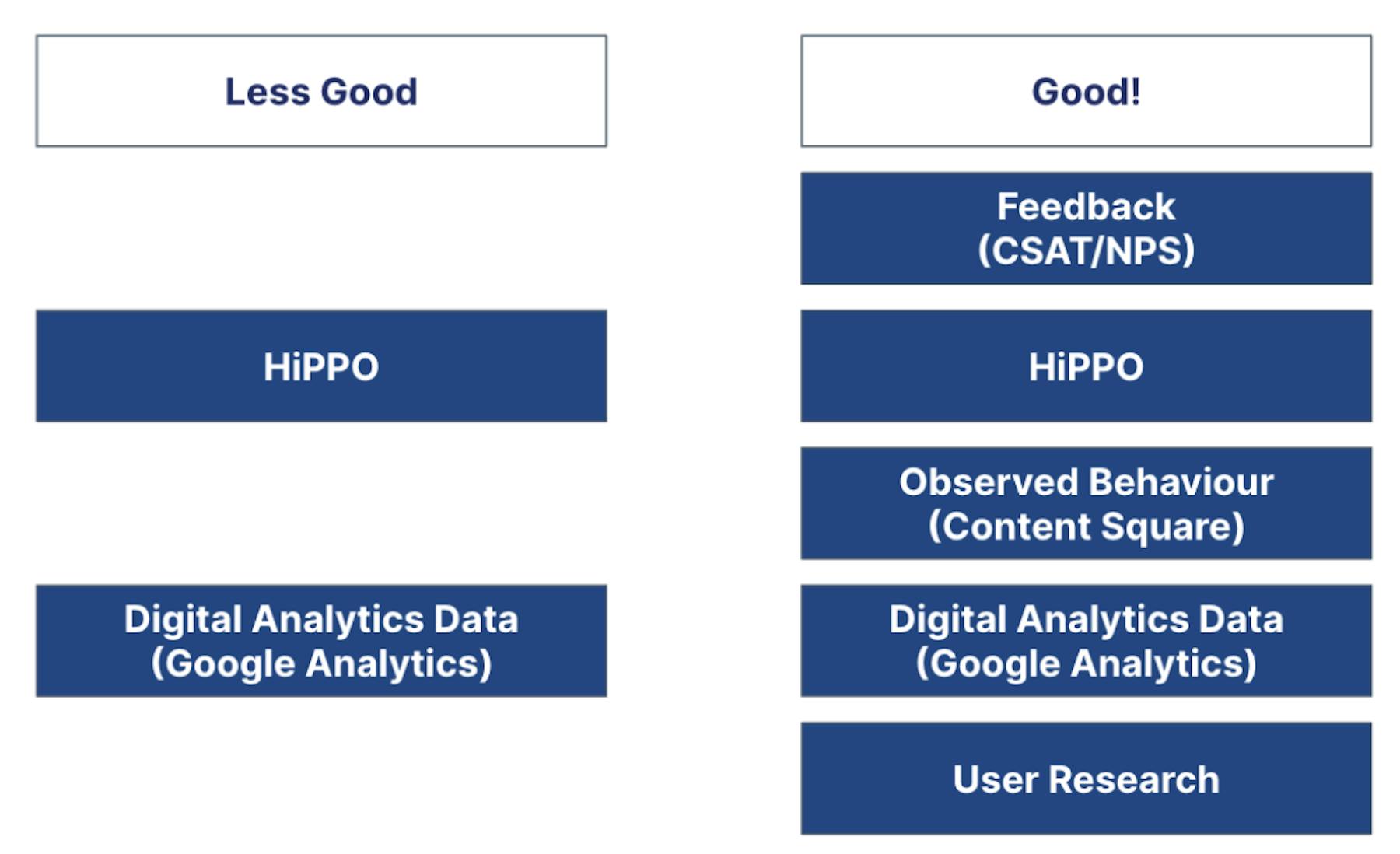

In regard to confidence, the experts I spoke with were clear that a multitude of sources for an idea was preferable. Also, that different sources had differing degrees of bias and reliability. A simple diagram illustrates the word picture I was given.

"Stacking (evidence) is something you should always try to do. We always want to have at least one qualitative source and one quantitative source… So the quality of your hypothesis will increase and you’re more confident and less biased."

Mark de Winter

Conversion Optimization Specialist, ClickValue

Anecdotally it seems that most people in product and their stakeholders understand there is value in building a strong hypothesis based on many-layered levels of insight and ideally UX research should form a part of this. In reality, this is not the case for most tests.

Most experimentation teams LOVE UX research, however, there seems to be a perception with business stakeholders that UX research is slow and it’s not easy to communicate the impact it has on revenue. On the other hand, web analytics tools, feedback and observed behavior data are very easy to lay hands on and an A/B test will often show impact on revenue.

How and why to link UX research with A/B testing win rates

So why don’t companies start tracking the impact of good UX research and the impact this has on forming good hypotheses and winning A/B tests? The major reason seems to be because no one is asking for it, there is no proof it works (yet) and this is because most teams exist in their own silo with their own measures.

In my experience, UX designers and researchers are in it to create an ideal user experience and make the world a better place. The fact this may lead to greater revenue is good but it’s not their primary objective.

This is obviously a different mindset than most ecommerce executives who see UX as a tool to generate higher conversions. Many researchers may see a commercial measure of their work to be too crude and ignore the depth and nuance of creating a great customer experience.

My personal belief is that this gap needs to be bridged and one of the ways to do this is by tracking the impact that UX research is having on A/B test wins.

Over the last few months I have asked research teams and experimentation teams to work together and try to identify:

- Average A/B test win rate(between 20-36% - Yes I know this removes much of the nuance of experimentation but it’s the wins that stakeholders care about most).

- Average A/B test win rate when some form of UX research with real users went into building the hypothesis or contributing to the designs being A/B tested(between 50-88%)

Even looking at the lower end this meant 30% more A/B test wins when UX research was conducted as part of the wider product design process and before the A/B test cycle. Will a 30% higher A/B test win justify more UX research? I expect it will, many times over.

My research is still in its infancy but the message, though crude, seemed to make sense and encourage the experts I spoke with. The sample size in this research is too low to be conclusive, it’s only anecdotal at the moment but I’m hoping we can change this if more ecommerce companies begin to connect UX research with A/B testing.

As some of the experts explained to me if we go back to the importance of prioritization when launching A/B tests. This concept firmly influences the C in ICE: Confidence.

"It takes discipline to challenge each hypothesis and story behind them, but our results clearly show that when we do our homework and prioritize for confidence – the investment pays off."

David Sollberger

Conversion Optimization Specialist, Farnell

If experimentation teams can prove that hypotheses informed/backed by UX research are more likely to deliver a win then they can prioritize accordingly and win rates go up accordingly. Other than win rates this could:

- Reduce the risk of a bad test

- Lead to fewer A/B test variants needed

- Avoid situations where KPIs go up but where the UX is adversely affected

All the above situations in turn lead to faster time to market, reduced development resource wastage, faster decision making, and most importantly leads to the virtuous circle.

Many of the experts were keen to point out that this concept will directly benefit UX research managers, Directors and VPs looking for a way to gain the attention of senior stakeholders.

Simply put, if we can show that UX research leads to higher A/B testing win rates then UX researchers will have an easier time gaining budget and proving the value of their team.

What’s the call to action?

As the experts told me, most experimentation systems are not set up to report on this out of the box right now and this won’t change unless someone from experimentation, product or research does something about it.

Tools like Airtable, Jira, Confluence and Asana were all mentioned as a means to record A/B test hypotheses, their inspiration and results. Start tagging now and see what happens by the end of the next quarter, you can use the format at the end to help with this.

If you don’t see any correlation then bin it and go back to normal life. If you start to see a difference then keep going and use this data to transform your product design process.

The last request, let me know! Is this a good idea? Has it worked for you?

With special thanks to:

Florentien Winckers - Albert Heijn, Angeliqua Dieye - IKEA, David Sollberger - Farnell, Melanie Kyrkland - Specsavers, Anand Vijay - IKEA (Al Futtaim), Erik van Houwelingen - Intergamma, Haylee Taylor - JD Sports, William Willcox - Holland & Barrett, Preston Daniel - AO.com, Stefan Twerdochlib - Werlabs, Maximilian Speicher - C&A, Praveen Kumar - Zalando, Mark de Winter - Clickvalue and all the hundreds of UX researchers, designers and designers I’ve met over the last three years for inspiring this article.