10 tree testing tips and tricks

Tree testing is one of a researcher's most powerful techniques, unveiling insights into how our navigational information is serving users and how it can be improved.

Taking a website structure like a menu, a tree test asks users where they would look for information to achieve a goal. But like most things in life, research isn't always straightforward. So, we put together our top 10 tips and tricks to help you get started.

1. Choosing to run a tree test

Our first tip is to understand how a tree test is run in the first place.

To determine whether you need a tree test, (and not a different technique like card sorting or usability testing) start with asking yourself some key questions:

- Have I already identified possible navigational structures of information for the tree test?

- Are the labels and structure of my information what I need to test?

- Are we, internally, struggling to define the logical order of our navigation?

- When was the last time I tested my information architecture with those who use it?

If you don’t already have possible structures to test then you might actually need a card sort. Read up on tree testing vs card sorting before you decide.

However, if you have a rough idea of the labels you might use and how the structure of information looks, then you need a tree test.

Tree tests are more effective if you haven’t yet narrowed down to one structure or label per piece of information. This is because tree testing gives you the freedom to be experimental.

2. Treat your tree test study as an experiment

Information is incredibly ambiguous. Take something as simple as red pepper. If you’re talking to someone in culinary or looking in your local supermarket, you’ll find this item in the vegetable section.

However, a botanist will tell you that the red pepper is in fact a fruit. As a designer, how do you deal with this ambiguity? Do you categorize it by science, cooking, or let your users decide?

This is where tree testing helps to identify common patterns amongst the relentless ambiguity in information. Treat your tree testing as an experiment. Your structure may be the result of numerous card sorting tests, but that only determines groups of information when users can see all the options available. Tree testing looks at whether or not people can find what they are looking for in a predetermined structure.

Tree testing works best when you embrace the ambiguity of information and run multiple variations of a structure. Versions should include different labels and different structures. At the end of a tree test, you should understand what produced, contributed to, and caused your final findings and results.

3. Plan your steps to success

When you're confident that a tree test will answer your research questions, you need to ask yourself what a successful study should look like.

What type of findings do you want to end up with? How much budget do you have and how are you going to ensure your study fulfills your needs?

Clearly define what you need from the study. Having an idea of what you need to accomplish means you can work backward from the ideal future, defining the steps you need to get there. There is more than one way to run tree testing, so having a goal in mind helps uncover your strategy.

Does your tree test need to uncover issues in a menu structure that already exists? Or do you need evidence to propose a new structure for a product? All of these decisions will impact how detailed your study is, how long the work will take and how you analyze and use the findings.

4. Preparing your trees

While tree testing is experimental, it should not be a mystery.

Tree testing is seldom conducted as the sole technique in a study. Most of the time card sorting and tree testing are techniques that go hand-in-hand and for good reason.

Your card sort will highlight whether each label accurately represents its information, and how well labels are understood by users. Before conducting a tree test, you should ensure that your labels make sense with little or no context.

You're probably not going to be able to explain or clarify the information they would find under a label, and even if you're with your users while running the study, answering the user's questions will tarnish your results.

Your goal is that your information is discoverable and findable when users are at home alone without any help.

Secondly, you'll have the groups of your tree but not yet the priority of the information. Your information should tell a story or help the user guide their own.

The trees should have a defined logical order. Even if you don’t know the exact order of the final structure, use different structures as a variable for multiple tree tests. This will help to uncover which structure best suits your users. Experiment with the order of the who, what, why, and when.

Make a note of the tree and the controlled variable, so you remember its logic after the study. Now you are ready for participants!

5. Choosing the method to conduct your study

As with most user research methodologies, there are the same never-ending questions of "how many users do I test with?" and "should an interview be face-to-face or unmoderated?" etc. There are so many different recommendations for each of these questions because research is not simply black and white.

First, let’s tackle the question of how many users you need. Here are a few areas to consider:

- Who are your users?

- How many user segmentations do you need?

- How different does each segmentation behave that you need to ensure you have sufficient representation?

- How big is your user base?

- If you’re at the beginning of building your product or software, will you have enough users to make results representative and statistically significant?

Industry experts like Nielsen Norman Group recommend around 50 users per test. But that is per user group and variation of the test.

Tree testing is a quantitative method, so it works best with a large sample of users. But if that’s not possible, then at the very least, aim to have the same amount of users in each group so that you can make fair comparisons.

Next is the unmoderated and moderated question. Tree testing is usually run unmoderated and remotely, as tasks shouldn’t be extensive and are usually part of a larger study that involves other techniques like card sorting and explorative research.

Tree testing can easily be set up and completed by users while you pull together other data entry points. Nothing is stopping you from taking the quantitative insights from your testing and noting them down to ask in future qualitative sessions.

6. Writing effective tasks

Once you know how the study will be conducted, what your tree looks like and the success of your study, you can start to write effective tasks. Each task should aim to uncover where users look for a common goal they need to accomplish on your site.

Tasks should be realistic for users, while using synonyms for the labels actually in the tree structure. This avoids users just clicking on the words mentioned and tarnishing results. So, what does an effective task look like?

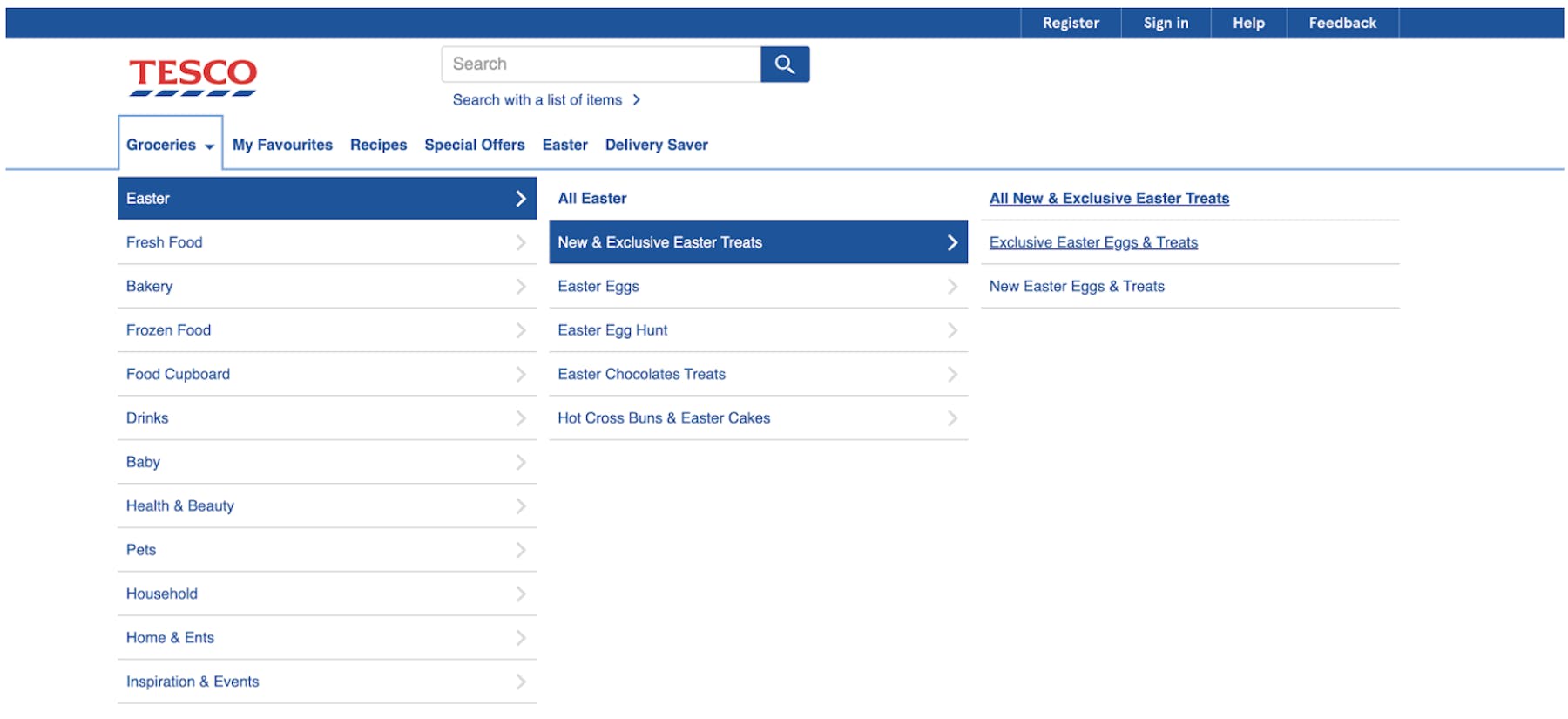

Well, let’s say you work for the retailer Tesco, where you have categories like “Health & Beauty”, “Fresh Food” and“Bakery” etc. As the designer, you want to see if users can find items related to the next special occasion, which in this case is Easter. This may be because stock for special occasions has a short shelf-life, and you want to make sure users can buy products in time for the occasion.

An example task would be:

“You’re hosting a get-together for the upcoming public holiday and you want to buy themed dinner party food for your guests. Where would you look for these items?”

There is some ambiguity here in the task, but it is narrowed down by the theme of the next public holiday. It also doesn’t mention "Easter" explicitly, so users aren’t guided to Easter. Of course, in your test "Easter" will not be highlighted, like in the above screenshot.

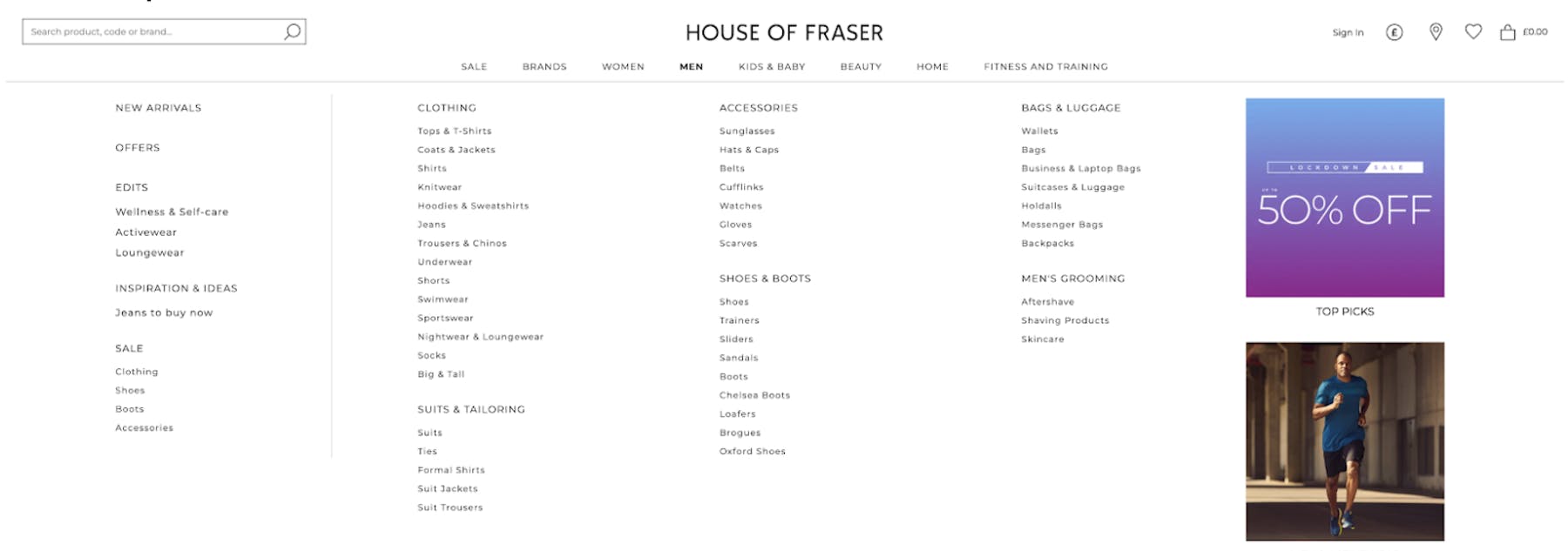

Let’s take another example. Say you need to test the information architecture for House of Fraser and you want to understand how users are supported in buying clothes for the next season. Your navigation menu looks like the following image.

An example task for this scenario would be:

“You are looking for a gift for your dad so that he can trim his beard. Where would you look for a gift like this?”

Tasks should be vague enough so that they don’t direct users to the exact label, but also specific enough so that not every menu item is a correct possibility.

These tests are great for testing realistic scenarios and are vague enough to start to uncover the user's train of thought as they navigate through your structure.

7. Screen your users, again

Working with users, in reality, is working with people. People come in all shapes, sizes, attitudes, temperaments and characteristics.

You can write screeners until your heart's content, inform recruiters that only the finest customers will do, but some people still manage to answer every screener with the perfect response, despite not being relevant in the slightest.

Always screen your users before they do the tasks. At this point, users are less inclined to fabricate responses because they’ve already succeeded in being invited to participate. You may still have to compensate for their time but if you can spot the inaccuracies then you can remove them from your final insights. It is a lot cheaper to pay them at this point and remove their results than to build a product based on inaccurate insights.

Include a few questions like “What are the reasons why you use our product/service?” or “Tell me about the last time you achieved a similar task/goal." These questions will help you to understand how relevant the study is for the user and you can start to paint a picture of how emotionally invested they are.

It also helps ease users into the mindset of actually using your product and finding the information they would normally look for.

8. Always ask users why

Tree testing in the typical unmoderated and remote fashion means it’s very difficult to uncover users’ reasoning behind their choices. Simply because you’re not there to ask them.

Moderating each participant is overkill for most tree test studies and it is specifically a quantitative method, but it is still vital to include questions that probe users about their decisions.

So, our next tip is to always include post-test questions to uncover final thoughts and reasons and always ask why. These questions should aim to uncover answers like how easy or difficult users found the tasks and why; what labels they felt were missing and why; and how did you feel using the tree and why.

It may be tempting to also ask users why they made the decisions that they did. This is not a bad question to ask, but only if you can remind them which choice they made.

9. Piece together your story

Tree testing results tell a story of how users acquire information in your data structure. Results are usually divided into four parts, and some platforms will give you data visualizations of the metrics; success rate, directness, time spent, and path measures.

Analyze the results, pull out the story and add some flare. Your story should be captivating and something even you would read it time and time again.

- Start with your participants. Who took part in the study? What were their key demographics? Were they participants who would be emotionally invested in the tasks? Set the scene with who the story involves.

- Next: Directness. What was your participants’ thinking process? How many directly chose the correct answer, versus those who ventured far and wide through all the options? The more directness, the more confident the user appears. Although that doesn’t mean they were confident and correct.

- Then: How successful were participants in their tasks? If a user only backtracked twice but took five minutes to decide on a label, this is just as much of a problem if they clicked through the majority of the tree and took only a few seconds to decide.

- Finally: Path measures, such as first click and destination. Which labels were immediately selected to start the quest? Which items were the chosen final answers?

Now, all of these together start to answer your research questions and form the story of your study. All you have to do is tell the story in an impactful way, to those who aren’t research-savvy and weren’t there from the beginning.

10. Question your insights

Tree testing is rarely conducted as an entire testing project and research rarely has a definitive end. User experience is incredibly subjective and as designers and researchers, we must take our insights and further question the results.

A user may be directly choosing the ‘right answer’, but does the positive experience come from exploring the information in the first place?

Let’s say your users want to find a gift for someone. The journey isn’t as straightforward as clicking on ‘gifts’. You can buy a gift based on age, gender, interest or current trends. If you are unsure about gifts to buy, then browsing information and being inspired along the way is positive and the time spent isn’t necessarily a useful measurement. The same can be said for other user experiences like puzzles or gaming.

The final step is to bring together all the needs of the user, the business goals and an understanding of what it means to have a positive experience in acquiring information through these structures.

In summary

Tree testing is just one step in uncovering insights into your information architecture. Like other user research methodologies, you have to ask yourself what is the success of my test and what are the steps that I need to accomplish that success?

Our tips and tricks are here to guide you at each point of tree testing, from deciding whether tree testing is right for you, preparing effective tasks, selecting your participants, and presenting the story hidden amongst the metrics of directness and time spent.