A comprehensive guide to A/B testing for CRO and UX

Here's a familiar scenario: you're at a fork in the road with two different versions of a web page, product idea, or marketing. Both options seem compelling. How do you choose which path to take? A/B testing, or split testing as it's also known, is your secret weapon for selecting the best option.

A/B testing means splitting your audience randomly into two groups and letting their preferences deliver the final verdict. One group encounters version A while the other encounters version B. By observing how each group interacts with its respective version, you can move guesswork and know once and for all what genuinely resonates with your target audience.

Marketing insights

Discover how UserTesting helps marketers refine messaging, optimize campaigns, and drive stronger customer connections.

Here’s the rundown: We split our audience randomly into two groups. Group A encounters one version (our Version A), while Group B experiences something a bit different (that’s Version B). This could be variations in design, messaging, layout, or call-to-actions. By observing how each group interacts with its respective version, we move beyond guesswork and dive into what genuinely resonates with our audience.

What is A/B testing?

A/B testing can be used to compare the performance of a webpage, app, email, or advert by pitting a control, ‘A’, against a test variant, ‘B’.

A/B testing works by randomly splitting your inbound traffic equally between the two versions. The data we capture from each group and their interaction with the variant can help you to make informed, evidence-based decisions about marketing, design, and user experience.

As such, an A/B test can often tell you if a proposed new feature or design change is a good or bad idea before committing to it permanently. This is done by measuring the impact on a given conversion goal, for example clicking or opening a link, completing a form, buying a product, or finishing a checkout process.

Common elements for testing include headlines, text, images, colors, buttons, layouts, and features. However, ideally there should be only one element different between each variation, so if you’re testing the layout then you should not alter the text. By isolating individual elements in this way, you can get a clear representation of their influence within the user journey.

Why should you A/B test?

A/B testing ultimately takes the risk and guesswork away from making changes.

Developing a website or app can incur significant investment and may require some change to your overall marketing strategy. If a substantial design change results in a downturn in conversion performance, it can result in wasted investment and could have a negative impact on business performance.

Through A/B testing, this risk is negated. You can target individual features to see the effects of changing them without making any permanent commitments, allowing you to predict long-term outcomes and plan your resources accordingly.

Furthermore, A/B testing can help you understand the features and elements that best improve your user experience. Sometimes, even the most minor changes can have a huge impact on conversion rates.

The power of A/B testing lies in its data-driven nature. It's not about going with your gut or playing favorites; it's about letting real, actionable data guide your decisions. This approach helps in several ways:

- Boosting conversion rates

- Elevating user experience

- Optimizing marketing efforts

- Validating ideas

- Fostering continuous improvement

By adopting A/B testing, you’re not just making changes based on what you think might work. You’re making informed, data-backed decisions that enhance the user experience, improve conversion rates, and ensure your marketing resonates with your audience. It’s about continuously learning from your audience, iterating based on evidence, and delivering experiences that truly connect.

So, if you're keen on driving better results and making decisions that are truly informed by what your users want and need, let A/B testing be your guide. It’s not just a tool; it’s your roadmap to creating more engaging, effective, and user-friendly websites and campaigns. With A/B testing, data takes the driver's seat, steering your digital strategy toward success with confidence and precision.

But how much impact can one feature on a webpage have?

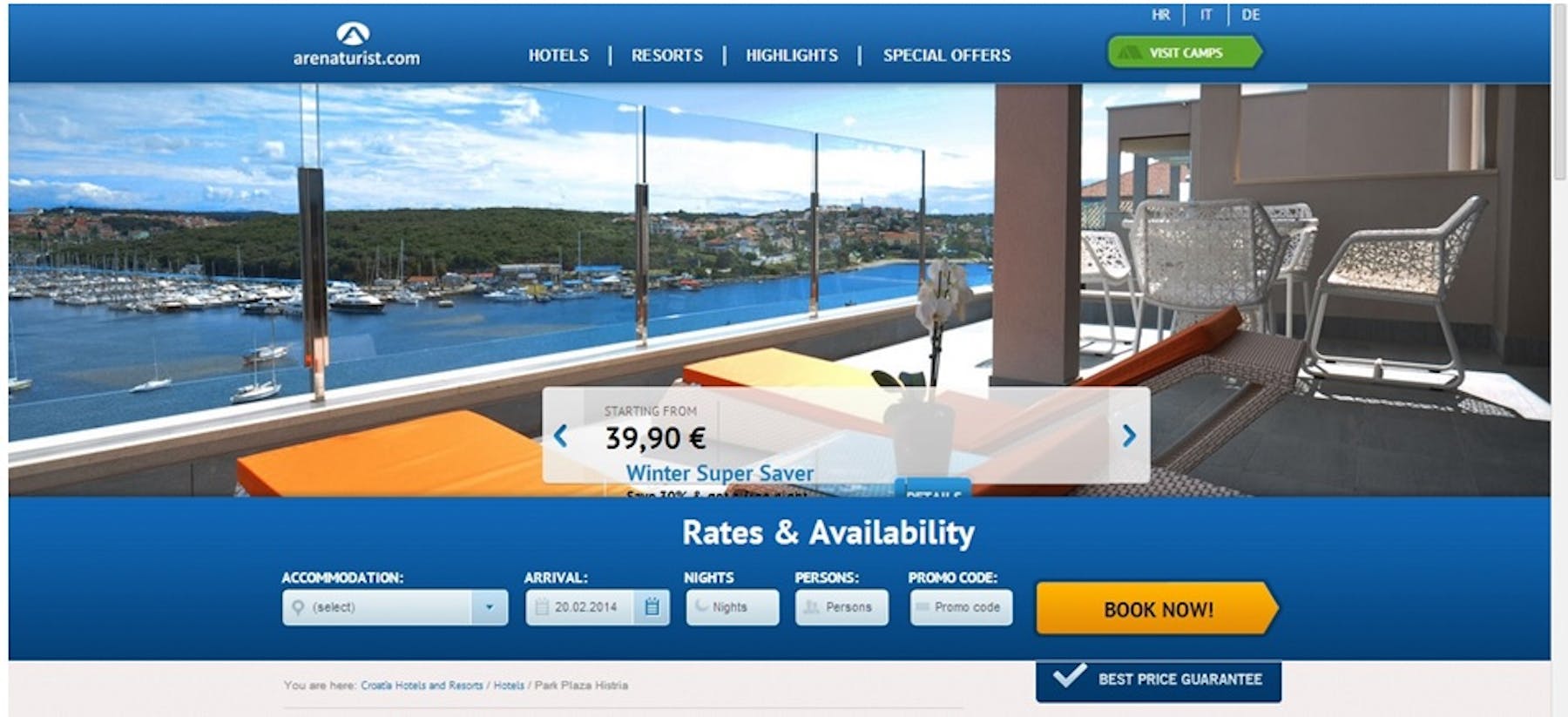

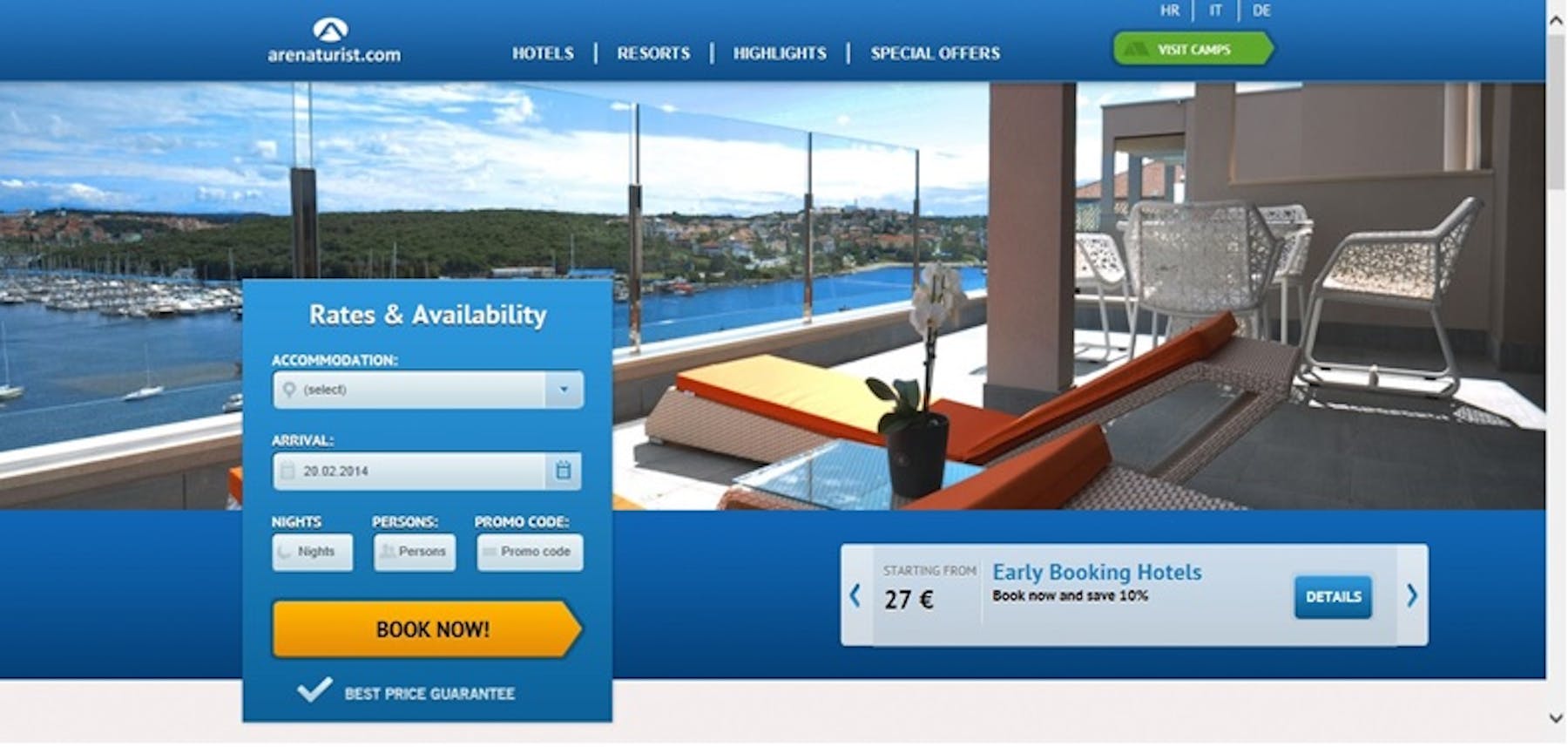

Through A/B testing, hotel booking site arenaturist.com found that a vertical form (vs. a horizontal form) had a huge impact on users and conversion rates.

|  |

Their aim was to increase submissions on their forms, and by making this small change, they certainly achieved this—by an impressive 52%.

Switching from a horizontal to a vertical form had a significant impact on users and massive potential to increase conversions and revenue, especially when you remember that this is just the first step in the booking process.

Benefits of A/B testing

Improved user experience

Users are always looking to do something on your website. Often, there are blockers or pain points which hold users back from being able to complete their task. The result is a poor user experience. We can identify these blockers with user research, and then A/B testing is how we find a solution. The data we gather through A/B testing helps us to make decisions that improve the user experience, which we know can have a positive impact on engagement and conversions.

Improved return on investment (ROI)

A/B testing is a powerhouse for maximizing your return on investment. By optimizing your existing web pages or marketing campaigns, you're not just shooting in the dark; you're making calculated decisions that enhance the performance of your current assets.

The beauty of A/B testing lies in its ability to leverage your existing traffic. Instead of pouring resources into acquiring new visitors, you focus on converting a higher percentage of the ones you already have. Even seemingly minor tweaks—like changing the color of a call-to-action button or modifying the headline of a landing page—can lead to significant improvements in conversion rates.

This method offers a high ROI because it allows for targeted improvements based on real user data, minimizing the need for large-scale, costly overhauls or the risks associated with untested new features.

In essence, A/B testing empowers you to make more revenue from your existing user base with a relatively small investment, ensuring that every dollar you spend on optimization works harder for you.

It's affordable and scalable

One of the most appealing aspects of A/B testing is its accessibility. Modern A/B testing tools have democratized the optimization process, making it possible for anyone, regardless of technical expertise, to run experiments.

These platforms are designed with user-friendliness, offering intuitive interfaces, drag-and-drop functionality, and clear analytics. This ease of use means you don’t have to be a developer or data scientist to understand how to set up and interpret tests.

Furthermore, the cost barrier to entry is low. Many platforms offer free tiers or affordable plans that make A/B testing accessible even to small businesses and startups. This affordability and the simplicity of setting up tests means that improving your website or app’s performance is no longer a resource-intensive endeavor.

You can start optimizing with just a few clicks, making it an efficient way to enhance user experience and conversion rates without significantly investing time or money.

You can test your competitor’s ideas

Keeping an eye on your competitors is crucial in the competitive digital landscape. A/B testing offers a strategic advantage by allowing you to test how well your competitors' ideas could work for your audience.

For instance, if a competitor introduces a new feature or redesigns their product pages in a way that seems to resonate with their audience, you can use A/B testing to experiment with similar changes in a controlled manner.

This doesn't mean copying your competitors blindly but rather taking inspiration from their strategies to see what might resonate with your own users. By conducting these experiments, you can gather data on whether adopting similar features or designs improves user engagement, satisfaction, or conversions on your platform.

This approach allows you to make informed decisions about incorporating new elements into your user experience, ensuring that any changes you make are backed by evidence of their effectiveness with your audience.

It can validate (or invalidate) opinions

Everyone has their own ideas on what will work best on your site. And some people’s opinions can be more influential than others. A/B testing these ideas can provide clear data to support—or disprove—whether ideas and implementations are worthwhile.

Your business can become more customer-centric

Optimizing features to improve the user journey will automatically enable your business to focus on the customer, especially when tests are repeated with features becoming more user-friendly.

Ultimately, A/B testing provides a relatively simple way to analyze and improve the performance of a webpage. With limited risk and cost involved, you can continually optimize content to improve the user journey, experience, and conversions.

When to conduct A/B testing

1: After completing user research

User research can uncover issues and pain points within the user journey.

For example, people might struggle with a menu layout or a form. Your user research could include session recordings, live testing, focus groups, or surveys. You might then ask questions or set tasks to find out why people struggle with the menu, which features they’re struggling with the most, etc. You could then use the insights from this research to design a new menu – and A/B test it against the old one to see if performance improves.

2: When your conversion rate is falling

A declining conversion rate is a clear signal that it’s time to employ A/B testing. This downturn could be due to various factors, including changes in user behavior, emerging competitor offerings, or your website not meeting user expectations as it once did. A/B testing allows you to dive deep into your analytics, identify at which points users are disengaging, and pinpoint the reasons behind the drop-offs.

For example, if your data suggests that users are abandoning their shopping carts at an increased rate, this could indicate issues with the checkout process. By A/B testing different aspects of this process—such as simplifying forms, adding trust signals, or testing different checkout button designs—you can identify what changes lead to an improvement in conversion rates. This methodical approach ensures that your decisions are data-driven, targeting specific areas for improvement based on actual user behavior.

3: When redesigning your website

Web redesigns can damage traffic and conversion rates—think 404s, lost linkbacks, broken crawlers, and plummeting rankings. User experience can also suffer. The site might look better, but users may not know how to navigate it. A/B tests should be carried out before, during, and after any redesign to ensure the site is as effective and usable as possible.

You could use any A/B data as a basis for some redesigns. If your redesign doesn’t produce statistically significant results in an A/B test, rethink and optimize your strategy.

Be willing to go beyond just testing “variations on a theme”—you might be surprised. If you're working on a major site redesign or overhaul, don't wait until the new design is live to A/B test it. A/B test the redesign itself.”― Dan Siroker, A/B Testing: The Most Powerful Way to Turn Clicks Into Customers

4: When you add a new feature, plug-in, or service

Introducing new features, plug-ins, or services to your website can significantly impact the user journey, especially if these additions affect critical conversion points like the shopping cart or sign-up forms. Before fully committing to these changes, A/B testing allows you to assess their impact in a controlled environment.

For instance, if you’re considering a new plug-in for your online store that promises to streamline the checkout process, A/B testing can help you evaluate whether this plug-in genuinely enhances the user experience or introduces confusion and friction that could deter purchases.

By comparing the performance of the new plug-in against the existing setup, you gain valuable insights into its effectiveness, ensuring that any changes made to your site are genuinely beneficial to your users and your bottom line.

5: When you want to increase revenue

Ultimately, optimizing your website is not just to improve the user experience but to translate those improvements into increased revenue. A/B testing is an essential strategy for achieving this objective.

By adopting an ongoing A/B testing process, you can continually refine and optimize every element of your site, from landing pages and product descriptions to checkout flows and call-to-action buttons.

This continuous optimization cycle allows you to stay ahead of user preferences and industry trends, ensuring your website always performs at its best. For example, testing different pricing strategies, promotional offers, or even the placement of customer reviews can unveil strategies that significantly boost conversions. Each successful test represents an opportunity to enhance the user experience in a way that encourages more conversions, leading to higher revenue.

A/B testing examples and elements to test

Some page features have a more significant impact on users than others. Use A/B testing to optimize influential features within the user journey:

Headlines

The page headline hooks viewers to stay on the page. It needs to generate interest and engage users. You could test headline variations, such as:

- Long vs. short

- Positive vs. negative

- Benefit-oriented vs. feature-oriented

Email subject lines can also undergo similar A/B testing, with the goal of users opening the email. Subject lines with different features can be tested:

- Questions vs statements

- Personalization

- With emojis vs. without emojis

- Different power words or verbs

Try our messaging comprehension test template

Design and layout

Pages should include relevant content in a layout that isn’t cluttered or confusing. You can find optimum content and combination layouts by testing:

- Features in different locations

- Customer reviews and testimonials

- Images vs. video

Some user research tools include qualitative test features like heatmaps and click maps. These can support A/B test data to find any distractions or dead clicks, i.e., clicks that don’t link to another page.

Try our visual design evaluation test template

Copy

Your audience will respond better to copy that's optimized specifically for them. You could use testing to find whether your audience responds better to:

- Long vs. short text

- Persuasive, informative, or descriptive tones

- Formal vs informal

- Full-bodied paragraphs vs. bullet-pointed lists

Call-to-actions (CTAs)

CTAs are where the action on a page takes place – and may also be the conversion goal site during a test. An example would be a colored button that says “click here.” A number of factors can influence user behavior at this point:

- CTA text vs. button

- Persuasive vs. informational copy

- Color of text/button

- Size of text/button

- Contrast between button and text size/color

- Location of CTA on the page

Try our website conversion test template

Navigation

Navigation can be the key to improving user journey, engagement, and conversions. A/B testing can help to optimize site navigation, making sure the site is well structured with each click leading to the desired page. Possible test features include:

- Horizontal vs. vertical navigation bars

- Page or product categories

- Menu lengths and copy

Forms

Forms require users to provide data to convert. While individual forms depend on their function, you can use A/B testing to optimize:

- Number of fields

- Form design, structure, and layout

- Required vs. non-required fields

- Form location

- Form heading and copy

Media

Images, videos, and audio files can be used differently depending on the customer journey, e.g., product images vs. product demonstration videos. A/B testing may determine how media can optimize different aspects of the user journey:

- Image vs. video

- Sound vs. no sound

- Product-based vs. benefit-based

- One media piece vs. several

- Autoplay videos

- Narration vs. closed-caption

Product descriptions

While descriptions are likely to depend heavily on the product, some elements can be optimized, such as:

- Brief and clear vs. longer and detailed

- Full-bodied paragraphs vs. bullet-pointed lists

Social proof

A/B testing could help determine whether adding social proof to your page is beneficial. You could test the formats and locations of features like:

- Customer reviews

- Testimonials and endorsements

- Influencer content

- Media mentions

7 steps to conduct A/B tests

“If you want to increase your success rate, double your failure rate. Thomas J. Watson, Former Chairman and CEO of IBM”

A/B testing should be treated as an ongoing process with continual rounds of tests, with each test based and built upon data collected previously. Within a single round of testing though, you can use the following framework to start, carry out, and complete a test.

1: Select features to test

Your existing data can help you find ‘problem’ areas. These could be pages with low conversion or high drop-off rates. Use your own data to inform which features you should test. You could base A/B testing on yours or someone else’s gut instinct and opinion. But tests based on objective data and in-depth hypotheses are more likely to gain valid results.

2: Formulate hypotheses

Generate a valid testing hypothesis—consider how and why a certain variation may be better.

For example, you might want to improve the open rate of emails. So your hypothesis could be: “Research shows users are not responding to impersonal subject lines. If we include the user’s name in the subject line, significantly more users will open our email.”

3: Select an A/B testing platform

Testing tools are embedded into your site to help run the test effectively. There are a number of tools available, each having its own pros and cons. Look into each different platform to find out which would be the best choice for your tests and goals.

4: Set conversion goals

Decide how you will determine the success of each variation: to click through to a certain page, to buy a product, or fill a form etc. You set this goal within the testing platform, so it knows what to class as a successful conversion.

If the goal is to complete a form, for example, it’s logged once the user reaches a “thanks for your submission” message. Or if the goal was to play a video, it’s logged once the video is viewed. The testing platform will record each conversion and the test variation shown to the user. This will almost always require a snippet of tracking code to be added to one or more webpages.

5: Create variations to test

You can create variations in two ways, usually within your chosen testing tool. Remember to include a control or ‘champion’ page.

- Create two variations of a feature: To test a single feature on a page, create two different variations within your testing tool. The A/B tool will randomly select which variation the user sees.

- Create and redirect to two different pages: Create two almost identical pages – just change the testing feature. You’ll have two different URLs. The testing tool will randomly redirect some users to the alternate URL.

6: Run the test

Run the test over a pre-set time scale. Don’t cut it short if you see results earlier than planned; the average duration is 10-14 days, but this ultimately depends on your site and its traffic.

It’s crucial to consider and stick to a set sample size. The sample size needed usually depends on the change you expect to see. If you hypothesize a stronger change, you will need fewer users to confirm it.

Also bear in mind that some users may have seen the page before; they may automatically respond differently to users seeing the page for the first time.

If you’re testing emails and their content, you may have more control over the sample and what individual users see. You may need to randomly split the sample, and create and schedule the emails manually.

7: Collect and analyze data

Analyze your results using your chosen testing tools. Regardless of whether the test had a positive or negative outcome, it’s important to calculate whether the data is statistically significant.

Statistical significance determines whether a relationship between variables is a result of something other than chance. It can inform whether the test results and implications are reliable, and whether they justify making a change to your site.

Generally, running your test for a longer period of time – and allowing more users to be involved – lowers the risk of results being down to chance. Larger samples are often more representative of overall audiences. And so their behavior can more reliably represent how a whole audience would behave.

Analyzing the data

While A/B testing software will present quantitative data, you will need to analyze results effectively. And there are a few things to consider when doing so.

1: Focus on your goal metric

A/B testing can collect a lot of data on many aspects of user behavior. Focusing on your original goal metric is important. Your analysis and results will then align with your original hypothesis and goals.

2: Measure the statistical significance

Statistical significance is the probability that a relationship between variables is a result of something other than chance. It can inform whether the test results and implications are reliable, and whether they justify making a change to your site. Most testing tools include an analysis feature to calculate the statistical significance of data collected.

3: Base your next steps on the test results

If one variation proves to be a statistically significant positive change, then implement it. If the results are inconclusive or negative, use this knowledge as a basis for your next tests.

Understanding the why of A/B testing using qualitative research

As we said earlier, A/B testing provides quantitative (numerical based) data, but may not reveal the actual reasons why visitors to each page behave the way they do.

UserTesting customers often run a usability study to understand the quantitative data they receive from their A/B testing. They may also run a think-out-loud study to probe more deeply into their two A/B designs and find out why one is performing better than another.

Top tips:

- Focus on your goal metric: even though you’ll collect a lot of data, focus on your initial goal.

- Measure significance: calculate whether results are statistically significant, i.e., are they enough to justify making a change?

- Take action based on results. If one variation is statistically better, go with that. If not, go again. Don’t count negative results as failures—learning what doesn’t work and what to try next is important.

- Reinforce your results by using qualitative testing to verify quantitative findings.

Challenges of A/B testing

A/B testing can carry some challenges. But they can usually be overcome by following an objective and thorough procedure:

1: Deciding what to test

Use existing data to determine when and why to test a feature. For example, check for pages or links with fewer conversions. Test one element at a time to pinpoint the influence on users easily.

2: Formulating hypotheses

Data should be used to identify issues and formulate objective theories and solutions.

3: Sticking to the sample size

The sample size should be determined before the test runs. People commonly cut testing short because they want quick results. However, A/B tests need a sufficient sample size for representative results.

4: Maintaining an ongoing test procedure

A/B tests should be repeated to ensure pages are continuously improved – this will help optimization efforts be effective in the long term. Use results and insights from each test to form the basis of the next. Learn from successes and failures. They can indicate how your users behave, and how other features can be optimized.

5: Staying objective

Try to ignore your own opinion and the results received by others. Focus on the statistical significance of results to ensure that the data—and any subsequent changes made—are justified.

When planning an A/B test, be sure to consider any external factors, such as public holidays or sales, that may influence web traffic. You should also research which testing tool is best suited to your needs. Some may slow your site down or lack the necessary qualitative tools, such as heatmaps and session recordings.

When driven by data and executed objectively, A/B testing can generate great ROI, drive conversions, and improve user experience. Subjective opinion and guesswork is taken out of the optimization process. So, A/B testing can ultimately inform strategic decisions across your marketing efforts, driven purely by data.

If decisions and tests are carried out randomly, based on opinion, they’ll probably fail. Start the testing process with clear data, a strong webpage, and a controlled process. It’s the best way to start a cycle of effective A/B tests and a great first step to a well-optimized site and user journey.

Optimize conversion rates

Accelerate growth and streamline operations by crafting frictionless ecommerce experiences that exceed customer expectations.