How we used Machine Learning to improve Transcripts

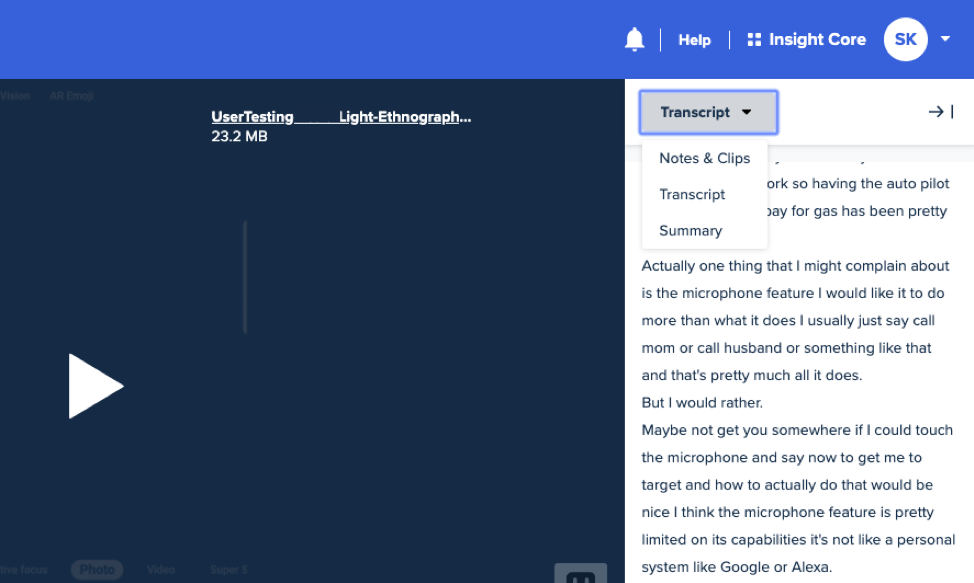

When customers are viewing the video of a completed test, helpful features speed up the analysis process. These include the ability to speed up or slow down video play speed, jump to any part of the session, create Notes & Clips, and immediately access the Transcripts.

We know that transcription can be subject to errors since it’s an activity completed by machines. So we worked with our transcription vendor to identify ways to improve accuracy—and in the process, we learned a lot.

Domain can impact the accuracy of transcripts

The bulk of vendors providing transcription services are either general purpose or optimized for use cases such as call centers, voice assistance, or conference transcription.

However, most UserTesting videos record feedback on ease of use and difficulty, satisfaction and delight, expectations and requests, and other ideas related to UX and the creation of better experiences. This means that words, phrases, and concepts that our customers are used to hearing may sound unfamiliar and downright foreign to an algorithm that is primed to transcribe customer support calls. And a mismatch between training and test domains can negatively impact transcription accuracy.

Machine learning allows us to make the software better

In order to help the Automatic Speech Recognition (ASR) system learn our domain language, we worked with our vendor to create a custom model. This is a core tenet of machine learning: the provision of a dataset that enables the machine to learn and change algorithms the more information that is processed. The resulting domain adaptation algorithms help bridge the domain difference or mismatch between the training data and test data.

Using proprietary video content, part of which was manually transcribed, we worked with our vendor to create a dataset that was then used to train the custom ASR model. Now the software better adapts to the common ambience or background noise in our recordings. When it hears a word that sounds sort of like “slick,” it knows that the word is likely “select.” Or if it hears something resembling “cook,” it knows that the word is probably “click.” We taught the model the language of UX and it, in turn, is much better at generating text that is a closer match to the words that were spoken during a test.

We’ve deployed this new model and we can already see that our Transcripts are more accurate. Premium subscription customers can view Transcripts from self-guided video recordings as well as Live Conversation sessions (English only).

Transcripts are a great time saver when analyzing completed tests. You can:

- Scan or do a search of the auto-generated text to immediately locate key insights

- Create video clips based on these insights you’ve located

- Download Transcripts into an Excel file for a record of feedback from testers, displayed side by side

- Copy key pieces of feedback for your reports or presentations

If you haven’t yet tried Transcripts, we’d love for you to give our updated feature a spin and then let us know your thoughts.

This post was written with assistance from Huiying Li (Machine Learning Engineer) and Krystal Kavney (Product Manager).