Setting clear objectives for your UX research

“What do people want?”

It’s a simple question... with a complicated answer: It depends.

It depends on the person in question, right? What are their hobbies? Their quirks? Their passions? To provide an answer that’s less vague than “it depends,” further information is needed.

The same is true when testing the user experience of a site or app.

A broad question like “Does the app work?” or “Do users like the site?” will yield a broad answer like “it depends.” In order to get really valuable feedback and actionable insights from a study, you’ll need to craft more detailed questions.

I’ve spent a lot of time writing about how to construct solid tasks and metrics-based questions, but today I want to step back and discuss the importance of solid study objectives or UX research questions.

So what exactly are we talking about?

Goals. Research questions. Study objectives. There are lots of terms and shades of variation, but I’ll use the term “objective” to describe any idea or question that we want to understand at a deeper level by performing research.

Objectives should be the driving force behind every task you assign and every question that you ask. These objectives should be focused on particular features or processes on your product.

As a UX researcher, I’ve learned that the more specific the objectives, the easier it is to write tasks and questions, and the easier it is to extract answers later on in analysis.

What distinguishes a “good” objective from a “bad” one?

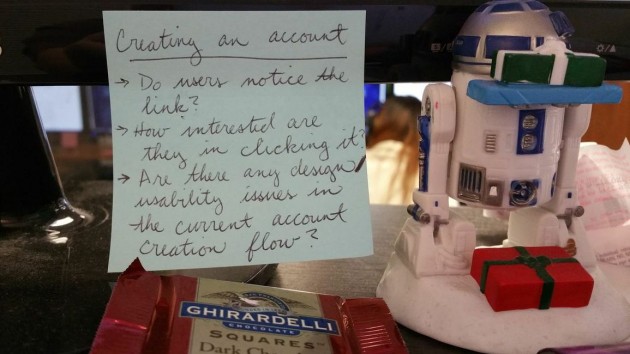

Mostly, it’s just common sense. Would you rather write a test using the objectives shown on the first post-it, or the second?

This question might seem clear at first, but it doesn't offer any specifics. (Though it does offer Starbursts.)

This question gives much more specific parameters. (Plus, it comes with R2D2 and a square of chocolate.)

As you might realize, good study objectives are specific. They name a particular feature or process, and often mention different elements or aspects within those features or processes.

A not-so-great objective

The first example above, which only asks whether users can create accounts easily, mentions a particular process. But when it comes to writing actual tasks and questions, you probably have to reach a bit.

You also might interpret the objective as having to do with usability, and craft questions to address that, while another researcher might focus their tasks and questions on design.

You might craft a broad task, instructing users to simply create an account, while a colleague might walk users through each step of the process, asking questions all along the way.

The problem is that when the objective is unclear, two researchers could write very different test plans. And while the findings from both studies would be insightful, they might not match up with what the team actually needs to learn.

A much better objective

The second set of objectives above, however, is laser-focused on different aspects of the account-creation process. If you’re researching with these objectives in mind, you have a much better sense of what kinds of tasks and questions to include in your study, and what kind of information to send back to your team.

So how do I get those detailed objectives I need?

Talk to the team

First and foremost, you can get detailed objectives by collaborating with the product’s stakeholders. You can start the conversation at a high level, of course, by determining what features or processes they want to have testers review.

Once you’ve figured out the broad topics that the study will cover, it’s important to drill down.

If you’re having trouble getting the stakeholders to articulate themselves further, try reviewing any available analytics data to pinpoint areas of concern, like pages with an unusually high bounce rate or average time on page.

You can also suggest a demonstration or walk-through of the feature or process in question; more detailed concerns may come to light as everyone goes through the experience together, and you get the chance to probe about different aspects.

Prioritize your objectives

Some researchers may encounter the opposite problem; stakeholders are giving you an abundance of objectives, and you need to figure out what to tackle first!

If this is the case, ask your stakeholders to prioritize their needs. This could happen via email or in a conference, of course, but another way might be to list out all of the possible objectives in a Google form and have everyone rank them by dragging them into their ideal order.

Finally, what do I do once I’ve got objectives to work with?

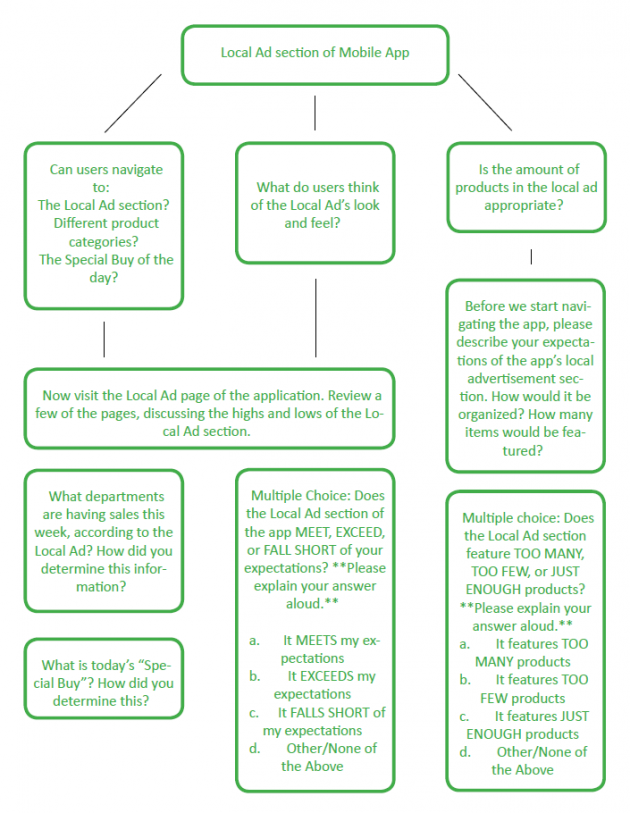

The UserTesting Research Team has a lot of methods for turning research questions into a test plan that yields solid answers, but they essentially boil down to this: turn each objective into 2-4 tasks and/or questions. Here’s a handy diagram for that:

Our team uses a worksheet like this one to make sure we're on track with specific objectives.

This worksheet ensures that your test plan stays focused as you begin the more detailed task of writing clear instructions and questions for your users.

Here’s an example of a recent project we worked on, and how the tasks fed back to the objectives provided:

By plotting our objectives onto the worksheet, we were able to craft focused tasks and questions for the test.

Visually tying back each task and/or question to a particular objective helped keep the test focused, improved the quality of the feedback, and resulted in better findings!

A few things are worth noting about this worksheet:

First, this isn’t an indication of the order in which tasks and questions should be posed to users. When I posted this test, I started with the first task listed in the 3rd column, then used the task that spans across columns 1 and 2. The Multiple Choice questions came later, after users had spent some time exploring the Local Ad feature.

Second, it’s possible for a task and/or question to tie back to multiple objectives, as you can see by the task that spans across columns 1 and 2. This is a super-efficient way to get answers to your biggest questions about your site or app, but tread carefully; you don’t want to split a user’s focus too often. If a lot of your tasks are multi-purpose, we strongly encourage you to perform a dry run of the test to make sure that users are giving you feedback that touches on both of your objectives.

What do I do next?

If you’re a UserTesting Pro client, reach out to your Client Success Manager with your research objectives, and they’ll help you figure out how to turn them into actionable findings.

And if you’re not, well, we hope you found this discussion helpful! There’s a growing wealth of information about goal setting and growing as a company: RJMetrics just hosted a webinar about it and published a whitepaper, plus linked to a whole set of additional resources.

Go forth, and define those objectives!