Win friends and influence people: how to scale insights enterprise-wide

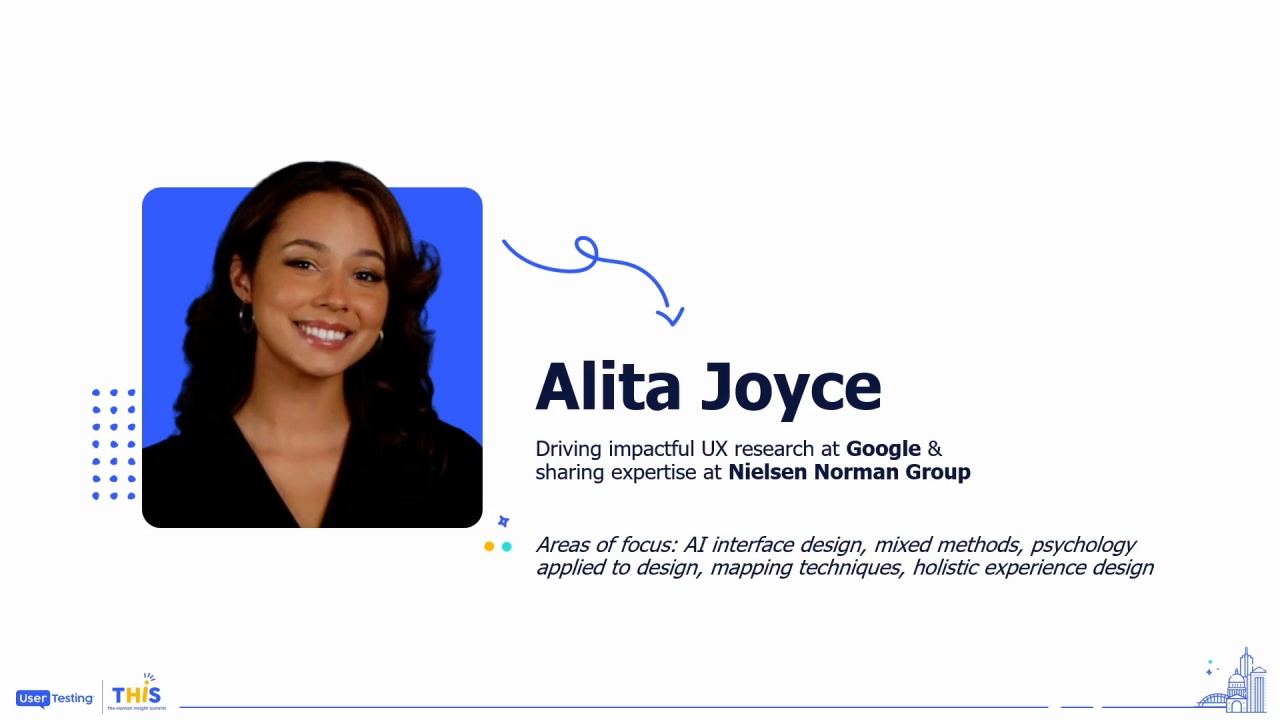

Ruchi Solanki

Senior Lead UX Researcher, Cox Automotive

Ruchi Solanki, Senior Lead UX Researcher at Cox Automotive, demonstrates how to quantify UX's impact on an organization, scale UX research by building strong relationships with stakeholders, and establish UX as a core component of product strategy. Ruchi will share strategies for aligning with organizational OKRs, effective resource allocation and communicating with data visualization. Attendees will learn to transform UX from a service to an integral element of product development, with practical tools and templates for immediate implementation, ensuring UX research is valued as a critical part of product success.

Good morning, everyone.

Real quick, just because I like to see who's actually in my audience. Folks who are researchers, raise your hands.

Oh, okay.

Any designers?

Oh, any product?

Just kidding. Just kidding. My twin sister's a product manager. It's fine. Anybody else? Engineers or other?

Other.

Interesting. Nice little crown. So I know who's booing. It's the people in the back. Okay.

Hi, everyone. Today, I am going to talk to you about one of my favorite topics, which is everything UX research, but specifically looking at insights and quantifying...

Good morning, everyone.

Real quick, just because I like to see who's actually in my audience. Folks who are researchers, raise your hands.

Oh, okay.

Any designers?

Oh, any product?

Just kidding. Just kidding. My twin sister's a product manager. It's fine. Anybody else? Engineers or other?

Other.

Interesting. Nice little crown. So I know who's booing. It's the people in the back. Okay.

Hi, everyone. Today, I am going to talk to you about one of my favorite topics, which is everything UX research, but specifically looking at insights and quantifying the value and impact of user research.

My presentation is not on there.

So it's gonna be there we go. I should have mimed it, or had you helped me with that.

Alright. So we're gonna spend a little bit of time working on creating the blueprint. And I think this is probably one of my favorites, because it's a lot of stuff that we don't talk about as researchers or even as folks working in corporate America. Spending a little bit more time on laying the foundation. This is where you're gonna do a lot of that work, a lot of that quant heavy lifting and and kinda creativity.

A little bit of time building the house. This is gonna be actually more time what you're gonna be doing in general, but we're gonna just touch on it a little bit. And then finally, we're gonna end with all of the housewarming. Once you can finally celebrate being amazing, what does that actually look like?

How do we actually communicate how amazing we are to our stakeholders? So alright. I think some of this stuff is a little bit low. So, just to kind of, give you a little bit of context, again, my name is Rishi Solanki.

I work for Cox Automotive. I am in a unique position, in that I am a manager as well as an indigent individual contributor. So I have a very unique, role within Cox Automotive.

So I've been with them for about three plus years or so. And about three months into my tenure at Cox, we really the SVP actually came to me and was asking me, what does great look like? What does UX research, best in class, look like in the industry?

And honestly, I had no idea because I didn't know anyone was really doing it. We were all struggling. We were all complaining. And really, it was kind of one of those moments that I think why this topic is so well, not just attended, but just so prevalent is because we all are in the same boat. It's just trying to understand what is actually happening.

So let's talk about creating the blueprint.

Start reading with the basics.

So I love this slide and not just because I created it, but really because I wish I had this when I first started my career, specifically in research.

And it's stuff that no one tells you.

This is one of the hardest ones to learn.

Not the hardest, but one of them.

People need to like you in order to trust you and the data that you're presenting.

Period.

Think about the TV that you watch. Think about the media or the news that you consume. And we're not gonna get political here, but think about you are more likely to listen and agree and understand and absorb the information and the data that people are telling you if you already respect and you like and you agree with them. It makes it that much easier.

One of the most important parts of being a researcher, this is a little controversial or at least to me, is not necessarily being the researcher and doing the data and the analytics and all that great stuff. It's actually about building relationships with your stakeholders.

And don't get me wrong, it absolutely sucks when you have to constantly appease them and make them understand the value of the work that we're doing, but it is so incredibly important to what we do. Our job, there's a huge responsibility when we come from research because data can be very easily manipulated.

And so our job is not only to get them to trust and like us, it's to make sure that we're providing support for our stakeholders, making sure that we're making sure that we're advocating for our users, and really making sure that we are starting with empathy and trust.

The second point.

As Mike said, I am a researcher specifically focused on both quant and qual. And the reason why I pinpoint that particular piece is that there is this notion that sometimes quantitative data is a little bit superior.

It is not.

There is an understanding that, really, in order to really understand the holistic experience of our users, you need to have both quantitative data and qualitative data to really understand the context of the experience of what your users are actually going through when they're utilizing your tools.

It depends.

Seriously.

I it's not trying to be a little facetious, although, I am my target audience when it comes to making myself laugh, so I did put that in there for me.

But it really does depend. And this is kind of my disclaimer and it's not to basically say, you know, if you guys didn't learn anything, it's on you. But it really does depend on your organization. We all come from very different backgrounds or very different organizations that have different resources, different org structures, different structures, different priorities, different goals, etcetera. So the stuff that you take from here, you're not gonna take all of it, although that would be great. Just give me a shout out in your book.

It's going to be bits and pieces that work for you, and that's more important than utilizing everything that's in there.

KISS.

Keep it stupid simple. Or if you're self deprecating like myself, keep it simple stupid when you're communicating.

Making sure that you are keeping it short, keeping it sweet. Remember who your target audience is when you're talking to them.

Our all of our attention spans like now are very short. So you wanna make sure that we're getting to the point early on so that you guys can zone out for the rest of this conversation.

And then last but certainly not least, I think the hardest part, that I mentioned specifically in this is knowing when to let go.

This is the hardest lesson that I had to learn.

We are all gonna be fighting the good fight for you, Wags. We are all going to be fighting and advocating for our users, and it's difficult.

And there's going to be times when you have leadership and product and whoever else, you can name, insert department here. They're not gonna take it. No matter how liked or respected or significant your data is. And you're gonna have to be okay with it.

You're not gonna win every battle. You're not gonna win every war. That's not what we do. Our job is to support and move on to the next one.

So let's talk about laying the foundation.

Alright. So what does success actually look like? And this differs for pretty much every organization. This is what we use though.

There are different ways that we can measure success and the impact that we're having on an organization.

What's important when you're thinking about, success and thinking about the data and how to quantify that is use what you already have.

Look at what data looks at being user centric and being data driven or data informed so that when products are making decisions, we can pinpoint how we've impacted that.

Now this is data that we're capturing anyways.

So it's not extra work for me to do it. Well, it's some extra work. But it's not work that I have to go out of my way to utilize a different tool. It's Excel.

How many studies are we actually conducting?

It's a simple simple number here.

How diverse?

What percentage of our methodologies differ across our work? And making sure that we have the right balance, and we'll talk a little bit more about this in the next couple of slides, of methodologies and the different phases that we're actually contributing to.

Who are we connecting with? Who are we collaborating with? Who are we supporting and partnering with?

Research is not a silo. It should never be a silo. Sometimes it is, but that's where, again, building relationships is incredibly important, important first and foremost.

And then last, again, thinking about numbers of interview users interviewed.

Making sure that we're not only capturing diverse perspectives, but also capturing the right user for our testing.

And, again, using what we already have. None of this is new.

One of the the key lessons here that I've learned over my time, and and not just at Cox Automotive, but other companies that I've been with, specifically, within the UX sphere, is speaking the same language that our leaders are speaking.

Thinking about what we already have in play, and building off of that. So for us, our product and our engineering and our UX are beholden to customer satisfaction.

And so we utilize customer satisfaction, and it's a quantitative metric that we have.

But in order to, like I said, mix methods, to build upon that, we have the the what, is we take the verbatim responses that our users go into, find those qualitative themes, so to speak, so you can make qual into quant, and then finding making an actual recommendations based off of that every month. And you're not gonna always have it perfect every single time, but it's the important to at least start. You build that relationship, build the expectation that you're getting this information.

Thinking about UX light, and I put this in here when I talk about building off of that. We've been using CSAT since I've gotten there. We actually I think maybe four months in, I made the case of making sure that our UX research team was responsible for pretty much reporting on that.

We've recently incorporated UX fight, which is basically a usability metric like SaaS, just two questions, for our b to b and b to c teams.

Teams. But utilizing that with our CSAT sneakily so that they've become comfortable with that metric. And again, making sure that we're all beholden, especially as UX, to be accountable for the work that we're doing. Because CSAT is a very vague metric. We can't control the economy. We can't control interest rates. Although, I wish we did, but we don't.

At least with usability metric, with UX Light, we know that we have a tangible way of actually impacting the business.

So one of the the research teams, I I guess, or in organizations that I looked at was Fidelity.

And it was this great presentation and this is COVID time, so it was definitely pre COVID watching these videos and why coming to these conferences are so incredibly important. Tell that to your boss. Was really focusing on understanding how to measure and quantify UX, specifically research. And as VP of research, really focus on a couple of things. The first one being proactive and reactive.

For a lot of UX organizations, we're more reactive. We get dictated, hey, we need this request.

We get told we need to do this. Can you help us with that? And that's fine. It's exactly what we should be doing. But it's not where mature UX organizations lie. It's in that balance of that future state.

Being proactive, that's where the innovation starts. That's where you can be a little bit more strategic with the work that you're doing. And it's not about proactive being better than reactive. It's a balance.

We are beholden to our users, which is our internal partners.

So being able to identify gaps and look at the really cool stuff that we haven't done, being proactive.

But then also making sure that we're addressing the pain points of our PMs and our engineerlings and our UX designers.

The other thing that she put together, if you're familiar with the double diamond, this is kinda something similar in three phases instead of two, but she really wanted to focus on three different phases that all of us should be really thinking about.

Are you focusing on the right problem? Do you understand what your users are going through? What pain points? What difficulties? What opportunities are there? What unmet needs that we should be thinking about?

Now that we have the right problem, did we come up with the right solution?

Are we evaluating? Is it validating or invalidating? Did we do right?

And then once we have that right solution, did we actually implement it right? And this can be done with a prototype or this can be done with engineering actually having coding in play doing live site testing.

For us, no surprise, especially three months in, we were doing a lot of done right. And this is where there's not one better than the other, and we're too late for a lot of the the stuff that we were doing.

We hear about a lot. We wanna fail fast, make sure that we pivot early, whatever synergy and business terms we wanna actually use. But again, it's finding that right balance. It's not necessary that we look at those numbers of twenty five and thirty five and and sixty percent. Those were just what we had put in. It's kind of arbitrary. It's up to you how aggressive you wanna be.

What's important is that you're balancing the different methodologies.

The sooner that you can test, test early, test often, the sooner you can pivot and, again, drive the direction of what your users actually want you to build because they're not gonna necessarily tell you. We hope for that, but it never happens.

There's that underlying theme. And if you're doing it early, you're more likely to succeed.

And so taking what she had pulled together, I wanted to look at this to frame the methodologies of, like, okay, what does that actually look like? I do strategic work, but I'm a very tactical person.

So I'm looking at what the right problem is. They're pretty much very typically the same research methodologies that we use, same with the, you know, right solutions and done right now. You're gonna have some that overlap and that's completely fine.

But it's there just as a reference so that when future me is actually documenting all this stuff, I have a way to look back on this and also communicate to our partners And they, don't get me wrong, are a very And they, don't get me wrong, are a very important piece to the work that we're doing.

But on the day to day, you're working with product directors, product managers, mid level managers for the most part. We work with very cross functional teams for the most part. So you need to also appeal to them. And so part of my job when I was coming to COTS, and we're consumer facing, so we have Auto Trader, Kelly Blue Book, Dealer dot com, was really focusing on rebuilding the foundation of the research that we were doing and understanding the individual jobs to be done and different tasks that our users were doing when they were actually coming to our websites and how we were performing. Looking at the current state and seeing we were doing good or we're not doing so good.

And there's so many different metrics that we can utilize. Are we gonna use all of this stuff? I wish.

But even if they take one or two metrics from there, that quant, that qual, even the attitude and and behavioral data that we have there, this is helpful for us to then measure against over time.

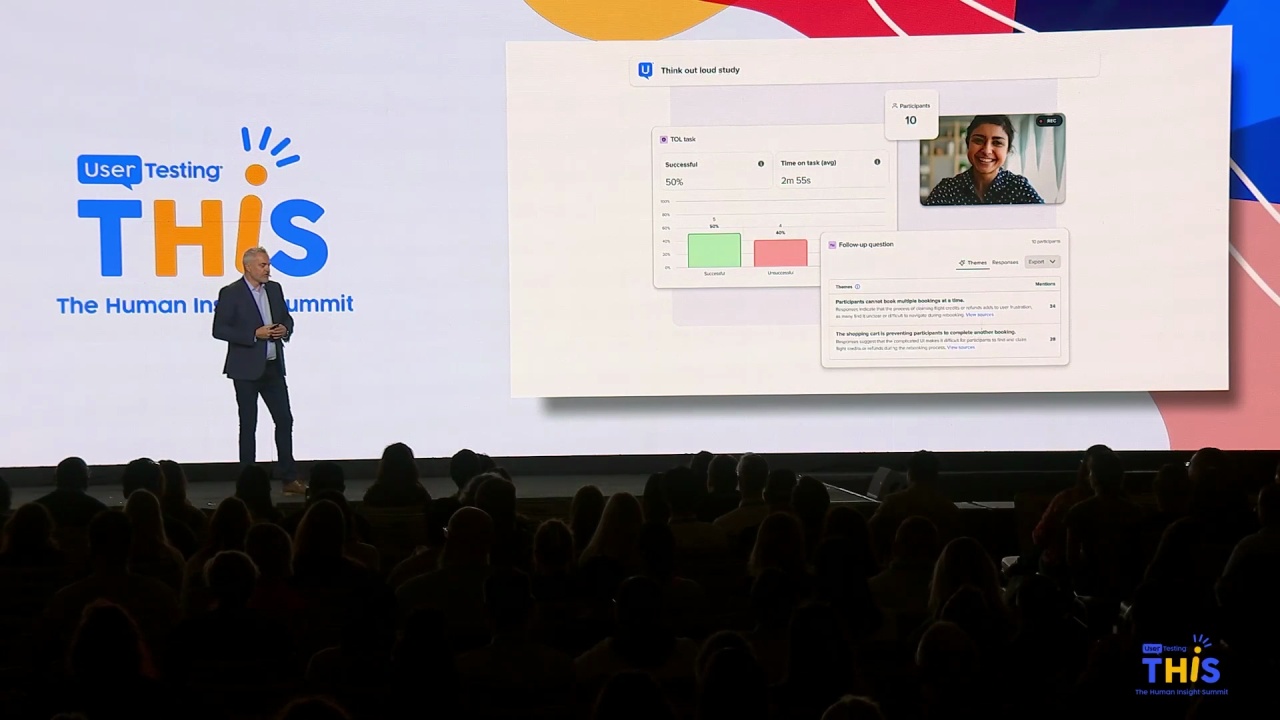

And this was actually all done within user testing.

So building the house.

This is the longest part of the journey, not necessarily this conversation.

But this is the longest part of the journey because this is where all of your hard work is actually being in play.

This is when you guys are doing the actual research. This is when you're doing the collaboration. This is when you're doing setting up all the meetings that you potentially need to have. It's actually just one.

But this is the part where you're gonna spend the most time, and it's incredibly important that you get it right. You've done the hard part. You set the stage. You need to know what metrics you wanna use.

I just threw all of them in a blender and just hoped for the best. And eventually, when resonates with some folks, that's what I ended up using.

So we have something that's called a learning plan.

What is a learning plan?

Great question. So what a learning plan is is really making sure that we're focusing understand what's happening on their road map, what's important to them. Again, sharing that same language with them so that we can understand where we can actually be helpful and valuable. And again, in that space, the more you speak the same language, the more you partner and build with them, the more likely they're gonna help you at the end result when you're trying to calculate how good you did, what impact that you actually had.

And what that looks like is making sure that we're all on the same page. Again, this is not just for product and UX to to be figuring out. We're having the engineering leads here. We're having other research teams here.

This is really focusing on us being able to democratize the research. We are a very small team of three and that includes myself.

So we have three different huge brands. That means twenty plus products for three people. So you can imagine I'm very, very busy.

So I can't do it all on my own and I don't have to.

That's where I'm working with my partners, utilizing user testing. Again, shout out to user testing. Creating templates within the tool so that my designers can actually utilize those templates and do their own concept testing, their own preference testing, their own usability testing so that I don't have to.

We're identifying the roadblocks early. We're making sure that it's efficient, and we're seeing that we have the time dedicated for that. Sometimes we don't wanna do research for the sake of doing research. We wanna make sure that we're doing it for the right thing. Again, right problem, right solution, done right.

What does that actually look like? It's gonna be different for every one of you, but it doesn't have to be this long thought out process. I literally put a meeting on everyone's calendar. Again, six to eight people. PM, PO, engineering lead, UX designer or architect, or both, and whoever research. And that includes our our team, the UX research team, but we also have a research and marketing intelligence team.

We also have our competitive intelligence. We have product analytics, live site testing.

If they're interested in in joining us, we wanna partner with them. Again, we're not doing this alone.

But it can be thirty minutes. It can be sixty minutes. I would say it can go longer than that, but no one ever goes into a meeting and is like, I wanna do this longer.

What partners what folks are gonna be doing during that conversation is going through the road map and understanding what they're working on. Again, your job is support the work that they're doing. We're never going to get that gold star and be front and center. That's just not the role that we've played.

But what we are gonna do is support our stakeholders, advocate for our users, and making sure that we're collaborating with the right partners at the right time. Now this is not the only touch point that we have with them, but it is in the beginning because it helps us map out what the rest of the quarter may look like. And things change. Research begets more research.

You will fail fast and realize that that did not plan out well. And so you're gonna completely decide not to go in a certain direction. That's okay. That's not what this is there for. This is there for so that you can build that relationship, have that conversation, say the things that you don't wanna say, maybe in a Slack, and move forward.

But the most important part and the hardest part is documentation, and you don't need a third party tool.

You can use Excel. And maybe that's my own bias of loving Excel and what it possibly can do for you. But in reality is it doesn't have to be pretty. It doesn't have to be great.

It just has to be done. Documenting the key things that are important to you. What OKR are you actually impacting? Again, seeing what impact that there is gonna be doing.

What's the timeline gonna look like? Who's responsible for this? What methodology did you use?

So on and so forth.

And those first two are stuff that we've had, and and they've transitioned a little bit over time. Again, not that pretty. And then I sold this from somebody on the Internet, that did a way better job, and I was like, I'm gonna steal that because that's what research is, stealing other people's work with credit.

And they did a really great job of incorporating color, but also incorporating key points that were important to them. Again, quantifying what was valuable for them so that future them is gonna make it a little bit easier. And and past Ricci and current Ricci always looks out for future Ricci, usually.

So now that we've done all of that, the hard part.

Now we're near the end of the year, of course. This is the time to to really focus on. Now I wanna get to party, but not really, but sort of.

How you communicate all the amazing work that you've already done, because we already know that we do some amazing work. Without us, we probably wouldn't be in a position where other folks are really basically churn is not doing so great.

Our users are one of our competitors.

Conversion is down, all of that.

Building relationships is the number one thing. Communicating your value is the second.

And that is also or can be also difficult to do that. Again, we talked about it before. Keep it stupid simple.

Less is more. We tend as researchers, and I know what you're gonna say because you're like, no. We don't. We do.

To overcommunicate, to get into the weeds, to talk about the equations, to talk about z score, which is a true conversation I had with other researchers. And I'm telling you right now, nobody cares.

The only people who care are other researchers and that is not who our target audience is.

Use the right graphs.

It seems like a duh, but you will be surprised at the amount of folks who don't necessarily use the right graphs. And I get it. Per graphs, pie charts, donut charts, all of that line graphs are not always the most fun to constantly look at. But just kind of as a side note, I remember doing a design sprint. And one of the experts put in their design a box and whiskers graph. Does anybody know what that is?

Yeah. Yeah. When's the last time you guys used it?

When is the last time you can actually explain that without going to Google?

I have a background in biology, which I don't do anymore, and I have to Google what the heck that means. Now the people who the users, the end users were, they're not pharmacists and it was a, financial software solution specifically in pharmacy. So if I don't understand what that means and you can't explain it to us, nobody knows what that means. Don't use the box and whiskers, use the damn bar graph.

Collaborate with designers or others.

And so I I put this here, and shout out to Akiko too.

I'm a researcher through and through. I can create a heck of a good PowerPoint.

But every single time I have a designer who ends up looking at my PowerPoint, they make it better ten times. And it would honestly irritate me if it wasn't that it was so much better. It's a very humbling experience.

But collaborate with them. That's what they do. They're better than you for that. That's okay.

They can make you look better, look like you're an expert, if you collaborate and work with them. They wanna help. They are in it as just as much as you are. And it's not just designers, but they are definitely a big part of that. Color.

Making sure that you utilize color where you can. Also thinking about accessibility.

Don't overdo it, but understand that there it can have a very impactful part of showcasing data that's important to to our stakeholders.

Talk about accessibility. So incredibly important, not just from a business value, from moral value. And it's actually a lot easier than you think.

Well, some parts. And this is something that I'm constantly learning about.

One way of doing that is instead of just using color in a graph to make a point, let's say, like, a line graph, you can actually use different symbols, different dashes or dots to represent something similar, and you're not having to worry about accessibility. That was something I learned last year, and I started incorporating it into our reports because it was a simple, easy fix. We can incorporate color. Absolutely.

But it doesn't have to be the only way that we're doing it, and it's so incredibly easy.

Being direct and clear with words. So, obviously, I'm a talker.

So I have had to learn when to shut up. I've had to learn when to keep it simple, and I've had to learn who my target audience is. At the end of the day, you guys are kind of forced to be here, sort of. You can leave at any time, but please don't.

But not everyone has that luxury. When you have ten minutes or five minutes with leadership, use that time wisely. Know what it is that they need to know. Being direct, being clear, being quick, and keep learning. I am constantly learning. I don't have it figured out.

I wish I did, but I don't.

And in fact, in some cases, it would make me a bad researcher if I did. I'm constantly learning from people who may not be as mature as our UX research organization. I am constantly learning from people who are better than us. That's the point. The more we learn, the more we talk, the more we share, the more we collaborate, the better we are as part of our industry.

And this is just some examples that I have.

That's actually real examples that we use.

What you'll see on the right hand side is what we originally had working with a group of researchers.

Analytical researchers really focused on data analytics, statistical significance. This is where the z score came in.

And try explaining that to people who have no idea what that is.

Nobody wants to talk about that. It becomes more of a conversation than really just give me what that number is. Are we good or we not good?

So we changed it. We made it so that color was a part of that, but also looking at the location of that score to help with understanding where we were.

And it's always evolving. And it started with putting CSAT and changed to satisfaction. It started with UX lite, change it to usability, understanding who your target audience is. Nobody wants more acronyms if they can help it, Ending us all off. If you learned nothing from any of this, apologies. You can blame user testing.

But, hopefully, there was at least some couple tidbits that you can take away, really focusing on starting with the basics. Again, understanding that relationships are number one.

Conversing with your peers is incredibly important.

It's not a job that I signed up for, but it was a job that I had to have.

We are advocating not just for our users, but a lot of times for ourselves. A lot of organizations don't have a lot of UX researchers as a part of their company.

And it's exhausting. I get it. But build that relationship first makes things a heck of a lot easier.

Democratize the research. Again, small team.

Do not have time for all the things.

Make people work for it too.

Utilize what we have. We have user testing. We have the ability to create templates. And as any researcher knows, and I'm gonna say the the quiet part out loud, we do the same type of questions every single time.

That's what research is. I still, for my own, questions every single time. That's what research is. I steal from my own stuff.

That's how it should be. So why am I not helping our designers or PMs do the same exact thing? Using it as a template, not necessarily being the end all be all, but using it as a starting point.

We do it for ourselves, why can't we help them for that?

Knowing who your target audience is.

This would be a very different conversation if there's engineers in the room.

But since I'm speaking to my people, again, making sure that we're speaking their language, making sure that we're really focusing on targeting the way that we speak, the way that we communicate to the right person.

My always little caveat, there is no one right way. I've done a lot of research into looking at what is the right way. And this is where it gets very annoying for a lot of researchers because we're so accustomed to having a methodology or a technique or a way that we usually go. That's how research usually is. But the fun part, the creative part is that there is not one way. You're gonna get sometimes some of those articles that are gonna tell you do it this way. Here's the equation, and it's not gonna work for you, and that's okay.

That doesn't make the work that you do bad. It's just different.

Keeping it simple.

Not gonna say anything more to that because that kind of proves my point.

And then documentation is key.

This is a marathon, not a sprint. This took years. Three years into it and we're still constantly learning.

Thank you. Questions. I have, like, ten seconds.

Run, Mike. Run.

Hi. I wanted to ask about your documentation.

Mhmm.

One of the things we've tried to do is create an insights repository, like, a an overall, here's all of the stuff.

Looked like I couldn't tell from your your Excel whether that was intended to be, like, an overall repository. So just maybe a little bit about how you do that.

Yeah. Absolutely.

That's a great question. I think a lot of folks that's another conversation that I think a lot of researchers struggle with is that repository and what does that actually look like.

I had an interesting conversation with our IT security team, and she was absolutely gashed when I told her SharePoint, the search feature was subpar. And I don't know if she had, like, shares in Microsoft or something, but she was, like, aghast. She was like, no. No. It's not true. I'm like, have you used SharePoint?

Nobody likes SharePoint. We use it because we have to. We actually use Dovetail and it's been a a company initiative to actually try to get all of our, business portfolios to actually use Dovetail. And so for us, we are actually currently working on that now.

We are getting all of our research for the past two years within Dovetail, and that includes incorporating some of this. Now there is gonna be a little bit of a switch over to teach our PMs and our our UXers and whoever else, that we kinda communicate to eventually start to use Dovetail. And there is gonna be some level of SharePoint. I don't know why.

Well, that will still be there. But we're also not at that stage because we have all other research teams. Part of my want is to make sure that we're not just incorporating UX research in there, but we're incorporating our research market intelligence, our competitive intelligence, all the other folks. But right now, we're incorporating it into Dovetail.

More to come on that. We'll see how that works out. We're gonna be doing some testing with our users as researchers to see how do you actually search for this stuff. Are we actually inputting it correctly? Are we tagging correctly?

And for us, that's how we're kind of approaching it, for the time being. Does that answer your question?

Cool. We're gonna do one more question before we break for lunch. I'm sorry for everybody else.

Yeah. I know.

There's gonna be time here in the room if you wanna ask us questions, but here we go.

So my question was about democratizing research and how would you balance that with the idea that in a low UX maturity organization, people might then conflate, you know, the ability to do research from the lack of need of UX at all or UX research in general?

That's a great question.

That's work, I think, that particular slide looking at the vast array of methodologies that we have. So I only actually democratize specific types of methodologies, for a reason.

Interviews, if they can handle it, and it's also part of it is a big part of it is is training.

Concept testing, usability testing, and preference testing. That's it. There are so many things that are out there that we already do. Nobody knows what tree testing is outside of, our our department. I don't know why or they think it's something different, card sorting. So there's so much more that's out there and it's a lot of it is just education.

There have been so many times where people were like, well, we just do usability testing. I'm like, no, you don't. It's not just usability testing. So it's basically reeducating folks about the vast array of what we do have and really focusing on partnering with them to make it a little bit easier.

There is a lot of training that went involved actually having lunch and learns with user testing for our teams so they become accustomed to what they're capable of being able to do. We made screeners for them. We made it easy. We did templates.

We just did plug and play as much as we possibly can. We even made it so that we could, they would have to go through a researcher in order to actually launch the test. And until they were actually comfortable and doing a decent enough job of utilizing those, templates that we finally let them kind of go. So hopefully the answer to that question is watching them go through the process and showing them where they may not be measuring up and that it's harder, which is, again, a humbling experience helps with the education process.

But also showing them that, hey. This is not just what we do. There's so much more. And there's nothing more humbling than watching a user struggle for two minutes not knowing where a button is using that to your advantage.

Answer your question?

Excellent.

Thanks so much, folks.