Building a customer-obsessed culture at Centene: a recipe for connecting insights to bottom-line business results

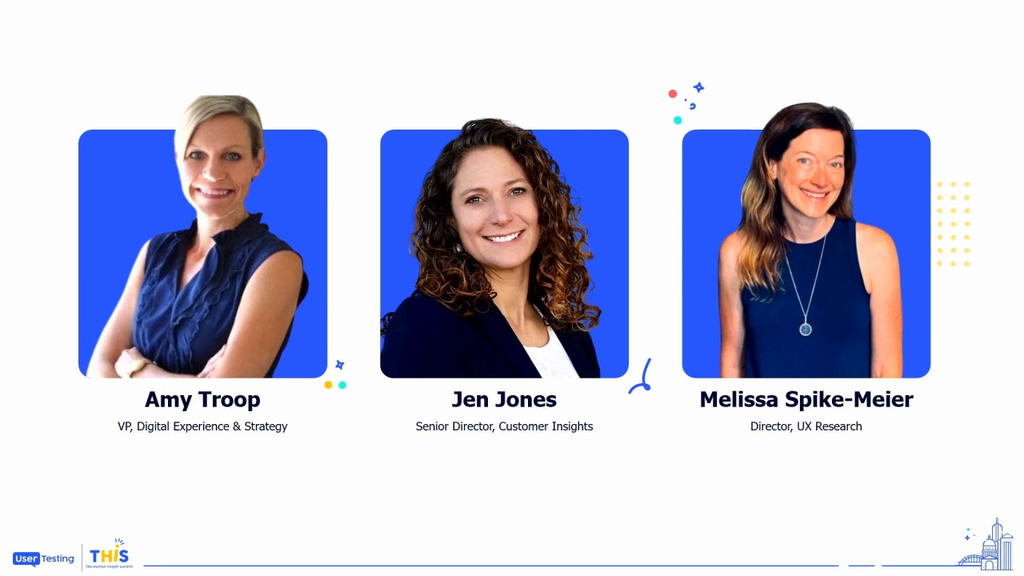

Amy Troop

Vice President Digital Experience & Strategy, Centene

Jen Jones

Senior Director of Experience, Centene

Melissa Spike-Meier

Director of UX Research, Centene

Join a panel discussion with leaders at Centene to learn how their team is developing a customer-obsessed culture. Experts on the panel will discuss the team’s journey to develop and implement a model that measures what matters to customers. The speakers will discuss how they combined customer insights and data, and unified customer and user experience teams to deliver a holistic understanding of customer experience. Discover Centene’s strategic approach to embedding these insights into everyday business practices, driving business value and organizational change.

I feel like this weird owl thing needs a little explanation.

So what I am really obsessed with is, distracting my two I have a tween and a teen, eleven and thirteen year old girls who are on the hormonal ride, and you seriously cannot rage while you're listening to weird Alkopamania people. Okay? Look it up. It is hysterical.

Yes. So as Amy said, I am Amy Troupe, and I am the, vice president of experience in digital strategy for Centene. We are absolutely thrilled to be here today in Austin among so many fellow UX and...

I feel like this weird owl thing needs a little explanation.

So what I am really obsessed with is, distracting my two I have a tween and a teen, eleven and thirteen year old girls who are on the hormonal ride, and you seriously cannot rage while you're listening to weird Alkopamania people. Okay? Look it up. It is hysterical.

Yes. So as Amy said, I am Amy Troupe, and I am the, vice president of experience in digital strategy for Centene. We are absolutely thrilled to be here today in Austin among so many fellow UX and CX practitioners.

Today, Jen Jones, who is our, who leads our CX insights practice, and Melissa Speitmeier, who who leads our UX research practice, we're gonna share with you some of the approaches we've taken to really begin to alter the DNA of our company and and begin to transition it into a customer obsessed culture.

I do wanna admit and note that this is a journey, and we really are at the very beginning stages.

So before we dive in, by a show of hands, who here had actually heard of Centene before today's session?

Oh, wow.

Okay. I heard. I think that was about a third, and that's not surprising to me.

So Centene is one of the biggest companies that you have never heard of. We were founded as a single Medicaid health plan, back in in nineteen eighty four in Wisconsin.

And now here we are forty years later, and we sit at number forty two on the Fortune five hundred list. We serve roughly ninety million members across all fifty states, and with about one hundred and sixty billion in revenue.

So there's a couple of reasons you've never heard of us. First is our brand strategy.

Stentine, it's a corporate brand. We don't sell any health plans or operate any health plans under that brand name. They all bear their own distinct brand name. And then the second reason is that we specialize in government sponsored as opposed to employer sponsored health care. So we are the number one provider of, Medicaid health plans, of marketplace health plans, and then what we refer to as dually eligible Medicaid Medicare health plans.

So Centene was born out of this b to g, business model that really did not necessitate a focus on experience.

The company grew historically, both organically and through acquisition, but really focused on creating hard value through things like administrative cost savings and efficiency, not soft value drivers like experience and satisfaction.

And so when we embarked on this endeavor a couple of years ago, we said to ourselves, hey. In order to change the culture of Centene, let's start by embracing it.

So Centene, you know, we recognized the fact that the company is comprised of actuaries and epidemiologists who really trust in math and models. And so we leaned into this, and we leveraged it to empirically tie experience to hard business value. This this morning, we're gonna share with you how we've really quantified and operationalized experience at Centene.

First, we look to answer these questions.

Does experience matter in terms of business value, and which factors matter the most?

How should we measure those factors such that that measure is actually predictive of that value?

And how do we translate their impact into dollars and cents?

To answer these questions, it definitely requires the effort of some smart statisticians, but it's pretty straightforward.

Then comes the trickier part. How do you begin to actually operationalize this all the way down to your UX practices?

Jen and Melissa are gonna share the steps there. I'll talk through the modeling.

So to answer these questions, we first have to talk we have to start with the customer that we're talking about and the business value we're looking to realize.

We started with Ambetter, which is our marketplace line of business and focused on the business value of member retention.

Let me let me talk you through our methodology.

So first, we fielded a quantitative study, large scale, of those members who renewed and those who did not, asking them to evaluate their experience, on on on the factors of ease, trust, satisfaction, and likelihood to recommend.

We then ran a regression analysis to determine the relationship between their evaluation of their experience and whether or not they stayed or left.

We, isolated specific moments of the experience to identify the relative impact each of those individual moments had.

And then we confirmed which measure was actually predictive of that renewal behavior.

And then lastly, we did the math to translate the experience measure into both top line revenue and bottom line income.

So to answer our first most basic question, does experience matter?

Yes. Experience matters. And I'm I'm certain this is welcome news to everybody in this room.

So members who scored their experience at a six or higher were, were more likely to have renewed.

Okay. Great. But we know that not all experiences are created equal, not all moments.

Which aspects of the experience have the greatest influence on that score. So the re the regression analysis also described how much effect each of the experience factors had on the score and, in turn, that likelihood to renew.

We found that there were four factors that had the strongest influence on that renewal behavior, getting help from customer service, getting care approved and paid for, getting an appointment with an in network provider, and understanding plan coverage.

These became our moments that matter.

We use moments that matter as a very specific term now, and we can we we say we will only assign this term to, you know, specific experiences that we have proven have a material influence on business value.

These are the areas that we must maniacally monitor. These are the areas where we should make our investments.

In a, you know, in a in an environment where you're experiencing daily fires, I don't know if any of you have this scenario in your company, the moments that matter, they really give us a way to focus our efforts and to prioritize our investments.

Next, we looked at how effective each of the measures of ease, trust, satisfaction, and NPS were at predicting a member's likelihood to renew.

We also evaluated a composite score that combined ease trust and satisfaction.

So the headline here is that, independently, trust and NPS were the best indicators of member retention at this relational level. Remember, this was a large scale quant study, really, trying to understand the brand relationship.

However, the so the CX composite score, that combination of ease, trust, and satisfaction, also performed very, very well, and it had this added benefit of being able to tie what's happening at the transactional level, everyday interactions up to the relational level. And we really wanted to establish a framework where we could tell, you know, what's happening day in and day out. How do we expect that to ladder up to the overall relationship in our brand?

So, and and this is, I I think, common sense, but if you think about it, how easy or difficult is it for you to accomplish what you want to on a website, or when how helpful is it when you when you talk to customer service? That directly ties to your satisfaction with your experience, and then in turn, your trust with a brand.

And so, for this reason, we have chosen to implement the CX composite score as our enterprise standard member experience metric. And Jen is gonna talk a little bit more about how we've, done that.

Okay. So finally, we did the math to translate the impact of a change in experience to revenue and income.

For I I took out some of the most proprietary data on this slide, but for for Ambetter, the model showed that a one unit increase or decrease in that composite score corresponded to a two point eight percent increase or decrease in the likelihood to renew.

So then from there, all you really need to know and understand is sort of the size of your customer base, in our case, our membership base. What is the lifetime value of that member? So what's the, you know, average, revenue per member, average income per member? And then you can do the math, and you can equate improvements in CX to top line and bottom line.

Now we've just looked at the model. This is our model for the marketplace line of business. We have also developed a model for Medicare, and I think it's important to note that the influence, the relative influence that experience had on retention does vary by line of business, and the moments that matter are distinct and are unique to each line of business.

So it's really, really essential that you do this analysis for each of your customer types.

So with these models, we are now able to demonstrate and articulate, you know, the return on investing in experience to our super hard numbers minded organization.

We can use the models to focus attention, of the organization on the experience factors that matter the most to each of our customer types.

We can use them to weigh investment options, for improving different aspects of the member experience, and we can use them to build out substantiated business, cases for making those investments.

We are currently working with our partners across lines of business to standardize the way in which we we measure CX and to use the new CX composite score to set their experience goals for twenty twenty five.

It is no easy feat, as Jen will attest to next. So when I hired Jen roughly two years ago, one of the very first, efforts she, embarked upon was to go around the company and to inventory all the different measurement methodologies that were in place.

And as you can imagine, it was all over the map. So some of our lines of business were using CSAT. Others were using NPS.

And then even within NPS, she found use of a seven point scale, a ten point scale, and the eleven point scale.

So, Jen, now that we've, you know, seen how can we identify and how should we measure moments that matter, Can you talk us through the trickier part of this exercise, which is, how to actually operationalize it?

Thanks, Amy. I sure can. And thank you for that memory because I have that. It's a little PTSD, but it's the wild west of what we inherited.

And so as we applied this new metric, as we applied this new model, Amy, Melissa, and other leaders, we sat down and we said, what is our vision? What is the framework we wanna create for how to truly infuse this into our business and make it work? So we then documented our listening posts. And thankfully over the last two years, we've stood up listening posts everywhere we possibly can in the customer journey.

When Amy said you haven't heard of Centene, that's because we have over three sixty websites across all of our brands. And yes, we have intercept surveys on every single one. I'm also happy to tell you that there's a post call survey after a phone call to customer experience across all of our brands. But making sure that we tied that to our UX study results, Making sure we tied it to our relational instruments.

My team is largely responsible for the CX, where my sister here Melissa is responsible for the UX. And we are partners in crime because while my team's out doing more of that generative work, her team is constantly looking at the evaluative side of it. So our annual survey, our monthly pulse survey, we wanted to make sure that this all tied together. And you can imagine after we got all those results back from the actuaries and from the statisticians, we're like, I sure hope this works.

So we sat down and looked at the data, and we went retroactive and said, well, what happened this past year? So month over month, when we looked at our transactional survey scores in aggregate and our relational survey scores, thankfully whew. I remember the moment this happened. I was, like, breathing a very, very sigh of relief because those lines on that graph lined up.

And that's the alignment we were hoping to have. But our groups pride ourselves on a tagline, which is delivering actionable insights. And this aggregate score isn't enough to do that. As Amy talked about, we now have the moments that matter.

So looking at those, we wanted to make sure that those categories of tasks were lining up in their scores at this composite, both relational and transactional. You can see for the top four that we listed on that bar chart, they in fact did.

That allows us the confidence going forward that when we see a score dip in the month over month, tracking of it, we can absolutely get to the root cause of what happened and quickly get something into testing to see how we can make an improvement happen.

So here's a little legend that we're providing our executives on how they can remember that the CX composite score matters. It matters to the business bottom line at Centene, and it matters to them because they're going to be setting a retention target. In our Ambetter line of business, which Amy spoke of, they're looking at somewhere around seventy percent. We haven't finalized it yet. We're still in the fourth quarter of doing that work. But if they are setting that retention target at seventy percent, we are telling them that our dashboard should show a target line of four point o for our straight average of trust, ease, and satisfaction.

What we did was then provide the numbers from this year's annual monthly and ongoing surveys so they could see, hey, we're not so far off. Four point o is achievable next year. We need to work hard though to analyze where our root issues are occurring so we can have a very quick impact on those month over month as they come in.

So that's the what. That's the what of operationalizing this new framework we've developed.

I think that's easier than the how. The how is how we impact our culture. How do we get in and convince that people who are married to NPS or who truly love the old way we did top two box CSAT scores, that that's the way they want to work? We did it by looking at these three parts of our culture.

We looked at process, the process for how people make decisions at Centene.

We looked at relationships.

And Johan actually referenced this this morning when he talked about relationships that need to exist across the customer journey to make this come to life. And then finally, insights.

Making those actionable then allows us to pull that voice of customer through the model and make sure that everywhere we go, we are truly gaining alignment in what should be the priorities for the improvements we make.

So I hope you'll play along with me. I have a little illustration on the first one. So when it comes to process, do you know how your leaders make decisions?

Do you know how the prioritization happens when an enhancement goes in and a change gets made? So to illustrate this, we'll play a little trivia game. I have a question for you. Here's your challenge.

When I throw the question on the screen, do not touch your phone. Do not Google this answer. Trust your gut. Tell me if you know which band is the most popular of all time.

Just think about it. Don't use a device.

I'll give you a spoiler alert that when I was rehearsing this in front of my college age daughter, she's like, definitely Taylor.

Taylor is an artist, not a band. So let me give you a list because Taylor's not on the list, Danny. And, among these seven now, maybe your brain's kind of switching over a little bit. Like, wait. I thought I for sure knew it, but my band is not on the list.

So let's ask this question a different way because if we were truly in a UX or a CX study, we would be debating as a team of researchers, was this specific enough? What do we mean by popular?

Let's add a caveat.

What if I asked you for two different metrics? What if I asked you about record sales or concert sales?

Now we have something much more objective, and the answer is Beatles and YouTube.

That is the objective truth. You can look up that statistic. You can find where the data lives, and you can point to it and trust it. Your opinion, or my daughter's opinion of Taylor, is the subjective truth.

That is truly your opinion.

And in my world, our team likes to have a little joke. We call it the n equals one syndrome. And I bet this has happened to you. It's Monday morning.

You're just trying to get that second cup of coffee going, and suddenly your phone rings, and it's an executive. Or in my world, the chat rings because we're all virtual. So the chat's popping up. Hey, Jen.

Do you have a minute?

It's like, okay. What is it? Well, this weekend, I was at a party, and I talked to one of our members.

I know exactly what's wrong with our website.

Oh, good. We have n equals one. I was waiting for one customer to tell us how to solve all the problems. So my team laughs about that, but it's truly something to think about.

Are your insights objective or subjective? Is that kind of groupthink occurring by one really persuasive executive who has their pet project or their friend who's a customer? That inside out thinking can be problematic if that alone is how your decisions are made. It's a little bit more siloed, relies on popular opinion instead of the facts that we're all busy gathering in our research roles.

That combined with outside in thinking is really what we're striving for. That's where we're getting those data and facts. We're looking at concert sales. We're looking at record sales. Not that these should be done alone, but together. You don't wanna stifle the brainstorming and innovation that comes from the inside out thinking.

Lastly, I'll talk about relationships. And again, I have to thank Johan for setting us up so properly because this slide was ready to go.

We had to stop and think about the customer journey and make sure that we realize that our customers don't care, that there isn't a relationship from our website development team and our contact center.

So when a customer goes to pay a bill on the website and struggles, they're expecting, when the customer service agent picks up the phone, for them to pick up where they left off, help them with more advanced knowledge, help them figure out their task, not have a repeat experience of that customer service agent sitting in front of the same screen that they were on five minutes ago.

In order to increase that empathy and make that awareness known, we had to foster relationships between those groups. We had to get people together in meetings and say, hey, Here's a situation where we're watching people struggle on the web and then several minutes later make a phone call about the same task. So thankfully, we have the metrics to show that. And Melissa's gonna come up next and tell you about a case study where it came to life.

Alright. Thank you.

Alright. So thanks, Jen. We are so grateful to have such a tight partnership with Jen's team who she already mentioned we affectionately call our sister team.

So as we saw earlier, Jen talked you through the CX composite framework, score that's built with the transactional and relational data.

So these data are all collected in the wild, essentially, what has already gone out to production.

My team conducts our research mostly prior to that phase. We're we're evaluating designs prior to launch. So this gives us an opportunity to look at satisfaction, ease, and trust during the design evaluation of these tasks, which ladder up to those moments that matter. So this can give us an early read on how these features are performing with respect to the CX metrics, and we can make changes hopefully prior to before going to production.

So we, as UX professionals, all know the value that we bring to the table, but it can sometimes be difficult to show that value to our stakeholders, to leadership. So being part of this framework, really shows how tightly coupled UX and CX are and how UX research can contribute to those higher level CX metrics and business goals.

So we're gonna dig a little bit into how the UX research team operates and how we tie CX in throughout our process.

So earlier this year, the UX team actually started working in more of a matrix model. So it used to be more of a service model. Now, our designers and our researchers are embedded into the development squad. So designers, one to one. Researchers are responsible for two squads just due to the size of our team.

UX research leadership. So we are responsible. We are the people leaders still of the UX researchers, and so we stay really tightly close, close with them in the work they're that they're doing. So, you know, continuing to mentor them, you coach them whatever they need throughout the process.

We're also making sure that their capacity is good, that they don't they're not, overloaded or underloaded. We're also overseeing that, you know, UX research process and strategy.

And then when it comes to our process, I'm sure what we have laid out here looks a lot like what you all are doing in your organizations as well. So we start with the initialize phase, which is really just understanding the problem. You know, better understanding any research goals, any hypotheses that are out there, talking through success metrics. And this, a lot of times, is a conversation. We work through this together.

Next, we go into the gather phase. So that's really just pulling together all of the information that we have, all the data that we know so that we can lay it all out there and understand if there are any gaps in our understanding.

If there aren't any gaps, we don't necessarily need to conduct research. We can share out what we know and inform the work going forward. But if there are gaps, that helps inform our research plan.

And then we get into creating our research materials, our study. We rely heavily on user Zoom for a lot of our research, and then we launch to participants.

And next, we get into the synthesis, of course. So, you know, extracting those themes, those insights, and, packaging it up into, you know, a big report where we really try to prioritize prioritize actionable insights for our stakeholders.

And then finally, this last part is really key and really a big benefit to us being embedded in the teams because instead of moving on to the next project, we're saying, hey. Let's let's watch this. Let's see how we're doing in production so that we can really, understand and assess the effectiveness of the change that we've made.

So this is definitely not something done in a silo. You know, as I mentioned, we're working through this with, you know, our UX partners, with the squads that we're part of, you know, many other folks including our sister team. So, we're always in close collaboration with others. You know, again, those relationships are really key.

Okay. And, you know, as I mentioned, there's really few places along the way where we're not in close partnership or having those touch touch points with CX. So, again, during that gather phase, we're pulling all the research we can from her team, you know, looking at what what, generative work has been done. What are the surveys saying, all those hundreds of intercept surveys that we have. You know, what are people calling about in the call center? So really pulling all of that together to help inform our research plans.

When we're conducting the research, we are implementing those those validation or those metrics as part of our validation. So the satisfaction, the ease, and the trust is part of that measurement protocol.

And then with synthesis, this is key too is really making sure that we're triangulating all of these insights together. So looking at what the surveys told us, looking what we learned, and tying that all together really helps us build a stronger story of what's going on. Where did we come from? What did we learn? And where should we go from here?

And then finally, again, at that last sort of it's not really the last step. It's ongoing. Right? But we really look to our CX partners to say, hey.

Like, let's measure the impact of the change. So what where were we before? Now that we've released this, what are we what are we seeing now? And that helps us understand the the effect of the change.

So as Jen mentioned, we're gonna go through a real world example.

So This is the Epilogue is a case where we set our sights on facilitating collaboration, again, to bring those actionable omnichannel insights to Centene.

So this was a larger effort where we really pulled together a lot of different groups. Right? So our call center analysts, our digital product owners, UX, CX, amongst others.

And so, kind of setting the landscape here, we're looking at data for the task of resetting a password on on one of our secure sites.

And so the graph shows the CX composite metric scores in the blue dotted line and our calls to the call center in the solid purple line.

And, again, these are showing, the the task of resetting a password.

They are the week over week scores of call volumes and those CX composite metric scores.

So, again, this project is showcasing where we uncovered an issue that had occurred with an update to our website and people were starting to start to call the call center, with confusion over the website. So we saw calls related to this task almost double in volume, so that's definitely not not probably a good sign.

So we took a look at we started to dig into the intercept survey data so that we could kinda understand more context around the problem and then, really start to understand, like, is this something we need to take a look at, prioritize a fix?

And so it's this really encompasses some of the feedback we were getting. Setting up password with entry key, extremely confusing.

Worst password experience ever. So not a good one. We see this as the very sad face on our journey map.

Definitely something that we, are not happy with. So this allowed us to prioritize a change. Right? So that's where UX came in.

You know, we looked we took all of the data and created an updated design, and then the UX research team validated the change.

The fix was then implemented, rolled out to production, and almost immediately, we saw those calls drop. So if you see that nice little, line down, that's very good news for us. So that was definitely showing that, we were having an impact. Right? And then, of course, we need to go back, you know, to the the other data and kinda looking at our other metrics to to really make sure that the change is, in fact, having that positive impact. So we were able to validate that with the survey data and, as you can see, the CX composite metric came back to its original levels.

Very good news.

So this is an example of measuring the CX composite metric at that transactional, level and how UX and CX work together to validate the impact of the change.

Alright. So in conclusion, Amy, Jen, and I have shown you how we have built a customer obsessed culture at Centene.

The experience factors that matter the most will be a function of the customer that you are considering and the business value that you are looking to drive. But as mentioned earlier, not all are created equal.

So then we select a metric based on its ability to predict that business value.

You saw our formula. You'll need to build one that translates your metric to the hard value statement that is most impactful to your company and then measure it regularly.

Get obsessed.

By understanding the process of how decisions are made, fostering relationships between groups, and delivering actionable omnichannel insights, we have shown you how we've operationalized our model.

And finally, we've shown you how a strong connection between UX and CX allows us to tie our data together to build stronger insights that then fuel amazing experiences.

Thank you.

Great. We have time for a few questions, so.

Okay. Hello. Hi. So I'm not sure if I remember your names correctly. Jen and Melissa, I believe.

Mhmm. I'm representing UXR, and I talk to customer support now and then to kind of have touch points, but that relationship is not strong enough to be able to pass on, like, hey, we have this problem. Let's do something more tactical about it. So I'm curious what those touch points might look like if it's a weekly meeting or how you guys choose to prioritize the things you're seeing in CS.

I wanna make sure to clarify. You're talking about the customer support team, like the call center team?

Yeah.

What whatever it is that you guys, your customer feedback from.

So if it is those surveys, if it is the call center, however you're taking that boots on the ground information and then being able to pass it down to the UX team, what that looks like.

So one thing we did, I don't know if you have this at your company, but we do have a metric that tells us how much it costs per phone call. So while we've stood up here today and talked to you about trying to preserve revenue, there's also an expense side of this equation that you could be monitoring. So we worked in partnership with the web analytics group to make sure, like, when Melissa was showing you, that was a secure logged in site that we were studying.

When that particular customer departs from that site and makes a phone call because we're doing call analysis as well, we can see this is where channel switching occurs and is really expensive to the customer. Because these are self-service tasks. They should be doing on the website or the app by themselves. So when they have to pause now and go repeat the task by calling customer service, that's an expense we could avoid. So we tried to quantify that by showing that cost per phone call as something that could be avoided. And then they came to the table and they were like, oh, yeah. We'd like to reduce calls.

When they came to the table? Yeah, yeah.

She wants to know about the UX.

So however When I've shared it, like what you guys are hearing, I'll pass it on to the UX team so you can approach it.

What are those conversations look like?

I mean, sometimes they're just very organic in nature, right? Where Jen's like, Hey, these guys called me and we definitely need to pull you in. And which is awesome because we have that really close tie together. We so we will do that quite often.

And my team is also digging into data as we're doing that gathering phase too. We we have dashboards and things that we can look at, or we also partner with Jen's team as well and be like, hey. Like, can you help me interpret this?

And so there's just a lot of organic back and forth conversation.

There one one additional, I guess, forum or capability, I'll call it, that we're in the process of standing up. We're probably about nine months into forming this group is, what we call an experience management team. They are sort of consumers. They're they're experts in the end to end journey of each of our customer types, and they breathe day in, day out the data and information that Jen's team is producing.

And their job is to know the moments that matter and to monitor those. And if there is a blip or in the case of, like, the password reset, that's like an enabling capability to many of the other moments. Right? If they see something like that that's impacted, it is their job to work in the, existing processes depending upon the group they need to reach out to for the fix. But in the case of the web team, it would be reaching out to the product manager who would then likely get in touch with UX design and work it into, is it something that we need to fit into this sprint now? Is it something that we can leave till the next PI planning? Or, you know, so I think that's I don't know if that helps.

Yeah. That's exactly what I was gonna say. Thank you. I appreciate it.

We have time for one more question because We can stay after.

Yeah. We could be a little late to lunch. I know.

I know.

We want to make everyone late lunch, but you're going to be here the whole conference.

So, yes. One more question for now, but I'll tell you where you can find them during lunchtime.

Thank you. Hi. I'm Julie. I work in the climate tech sector and I'm a B2C marketing team, but we can only market through our AD utility clients. So we don't have direct end customer interaction unless we go through them. One thing that we find is that there's often a lot of pushback that's, like, nagging the customer too much. I saw that you have a lot of different touch points where you're asking for feedback.

How do you balance getting all of these different types of touch points with potentially creating more frustrating experience by asking too many times for people to pig in?

So like trying to avoid survey fatigue in that sense?

Exactly. And like making sure that you're prioritizing most important parts for resourcing that feedback.

Yeah. So the first thing we did early on, which was kind of at the one zero one level, was to make sure that we put cookies on all those surveys. And some of them are pretty lengthy. One of our lines of business said, I only want that pop up to show once every ninety days after a customer visits our site.

So we use the power of cookies to prevent that. What we're working on now that's a work in progress is trying to get it all the way down to the customer level as a data source to say, these are all the interactions we have had with them in total. Whether it's a marketing interaction, a research request, maybe something transactional happened that they got an email from us confirming a payment. We wanna know, like, we're not bothering them too much.

So having that data in more of a journey view at the exact individual customer level times millions and millions of members is what we're trying to do for next year.

Thank you. A girl can dream. Right?

Yeah. We'll let you know.

Awesome. I want to, have us give a big, thank you to teens and teens for their wonderful presentation.