Customer panel

Brian Cahak

Co-founder and Managing Partner, Zilker

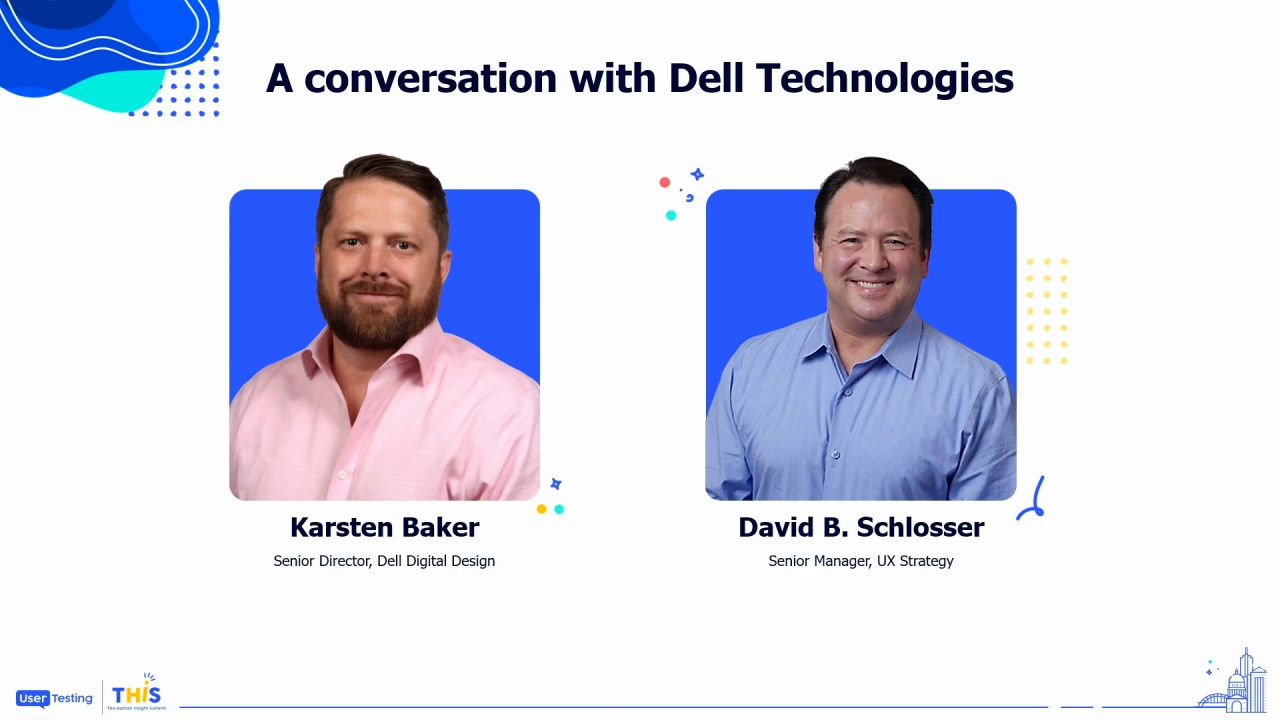

Karsten Baker

Head of Dell Digital Design, Dell Technologies

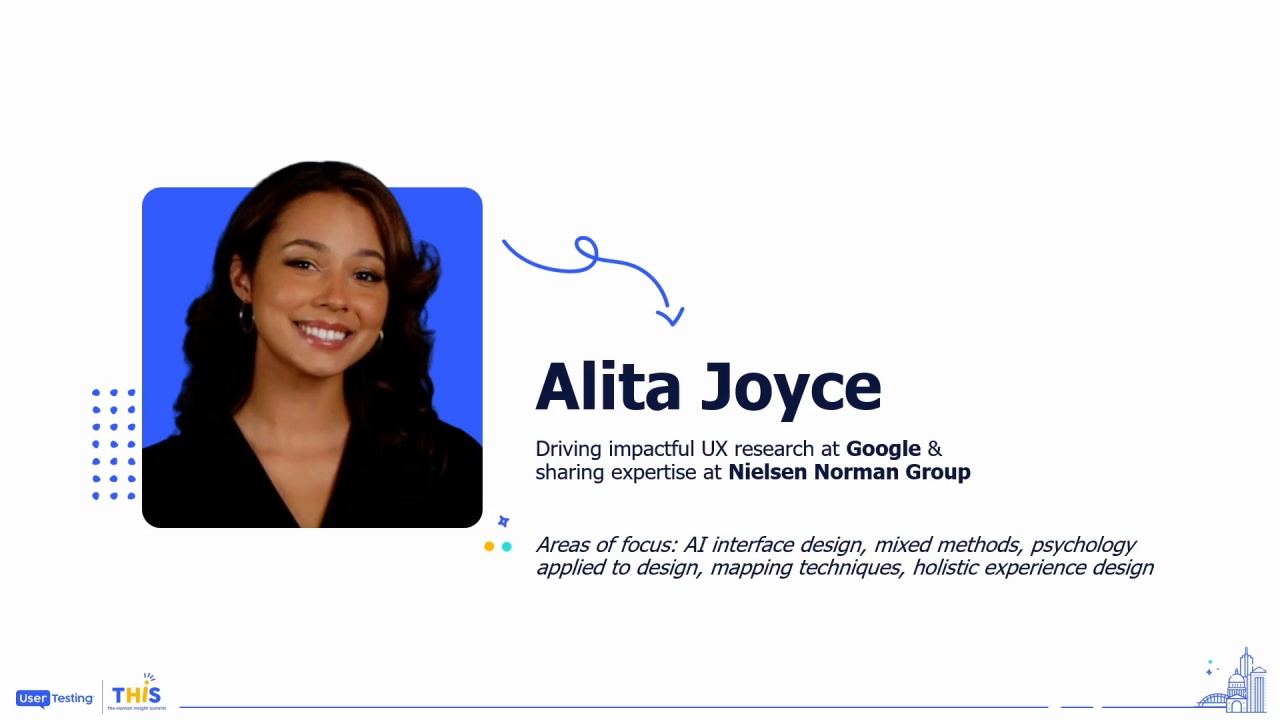

Aditi Sharma

Head of Research and Design, Amazon

The panel will address adapting UXR and Design roles, teams, and workflows to meet changing consumer needs. Speakers will share their experiences in leading teams to innovate and improve insight collection and sharing, inspiring the audience to embrace change and challenge the status quo.

It is it's great to be back together.

I thought it would be nice for you all to go right into this meditation. Our friends at Zilker Trail, wanted to make sure that that everybody could recenter before we start the, the next set of programming.

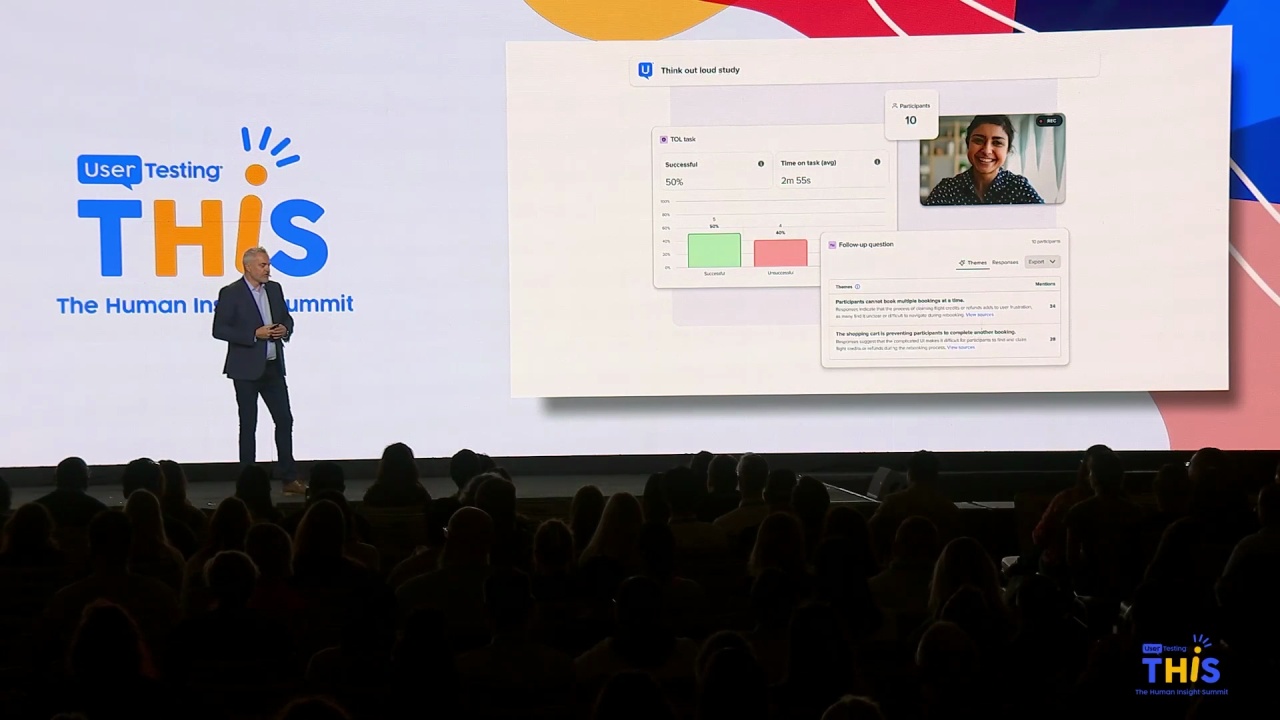

We're trying to make sure that the stories that you hear are customer stories. That's one of the most important things for us as we as we all come together is is for you not just to hear from us, but to hear from our customers. So, before we we get into the...

It is it's great to be back together.

I thought it would be nice for you all to go right into this meditation. Our friends at Zilker Trail, wanted to make sure that that everybody could recenter before we start the, the next set of programming.

We're trying to make sure that the stories that you hear are customer stories. That's one of the most important things for us as we as we all come together is is for you not just to hear from us, but to hear from our customers. So, before we we get into the customer panel, I'd like to show you a video from one of our customers.

BT Group is the UK's leading communications provider. We have about twenty five million monthly subscribers, four hundred and fifty plus retail stores, about three million plus monthly app users.

Traditionally, e sells the telecoms products such as broadband, mobile connectivity side of things, but we're going into newer verticals now, so tech, gaming, home security, insurance.

One of our key goals was making E the consumer facing flagship brand and moving away from BT. We use human insight to test the app, website, but also physical devices, like how people set up the hubs. User testing is an insight platform that allows us to observe people using our digital products and services, and it allows us to reach wide range of people across the country. We need to do research to understand user behavior, user motivation.

We have a very robust user research team within design function that actually obsess about getting that type of unique customer feedback and input.

Far Ethos as a company, is is to try and be the most personal customer focused brand. This is how you be customer focused. You spend time with them. The app is the the window into that personalized, helpful conversation every day.

It's a lot more than just an app to check your bill. It's going to be a very personalized app to also shop for your consumer electronics. Human insights are essential when we are designing for our ecommerce platform. We discovered that what worked best was a product led approach so that customers could go straight to phones or tech or gaming or broadband.

Ultimately, when we released it, we saw a fifty percent uplift in traffic to the broadband pages. It's all enhanced by our new AI assistant, Amy. We found the chatbot wasn't answering a lot of our customer queries about roaming. So we did some research.

We redesigned it. But since redesign, Amy responds more naturally to people. The chatbot containment rate increased by seventy five percent. We also had a fifty percent increase in click through rate.

User center design used to come from a place of moving pixels, and now it's it's moving companies against their competitors. That that's the impact on a strategic level it has if done correctly. What an opportunity to be part of that.

Fifty percent uplift in traffic. I really like that stat. Right? Like, what a what a great set of statistics. So, it's a great story about how meaningful it, it is to use human insights.

Wow. I can't even speak. Let me try that again.

Interesting to see how meaningful it is to use human insights to shape the way that customers experience applications, and their whole journey with a brand like BTE.

The next session is a customer panel, and, I'm gonna stop talking and let the customers do the talking. So welcome to stage.

Our moderator, Brian Cahack with Zilker Tail Trail, Aditi Sharma with Amazon, and Karsten Baker with Dell.

Awesome.

Hello.

How great is it to follow that, stretch break? Hopefully, y'all got some, fresh blood going there as, we we were in the back watching the the sunrise. It wasn't too terrible standing in the back room watching all that. We were stretching out with you, so it was very fun.

So my name is Brian Cahack. I'm the cofounder of Zilker Trail. And before we jump into the panel, I just wanted to kind of frame it really quick for you. As we were kind of getting ready for this panel and how do we really provide some insights that you really find valuable, we started really thinking about these kind of these tensions or these balances we all roll around in.

So we're gonna talk about creativity and accountability, innovation generating outcomes and artificial intelligence, and how do we kind of navigate larger organizations that are really, working through these tensions, these balances, and how do you how do you kind of really think through that? So that's what we're gonna talk about today. Before we do that, I'll let my esteemed panelists introduce themselves. Go ahead, Aditi.

Hey, Brian. Hi, everyone.

It's good to be back.

So my name is Aditi Sharma. I head research and design, at AWS.

Specifically, my team and I, we work on, fintech products that help our CFO and his team make better financial decisions to help, Amazon and AWS, with their revenue growth or profitability, or looking at free cash flows. Again, very, very technical terms and finance related, but things that I find interesting. And, so, the team today is a mix of researchers, strategists, and designers, and we work really closely with product and engineering, to drive results and outcomes for our business partners and for our finance partners. So that's me in a nutshell. Great.

Carsten Baker. And I have the pleasure of leading our digital design organization.

And we are a human centered design organization that focuses on creating experiences that are modern and measurable.

And in addition to working on some of our experiences like Dell dot com and our b two b experience, which is called Premier, we have a pretty broad portfolio. So we support a lot of experiences from our sellers to supply chain. So just a a lot of digital areas across Dell just for some context. Awesome. Yeah.

So a really good blend of, capabilities and organizations. So we'll start off with, like, a little bit of humanity. We always love to, like, start with humanity, end with humanity.

That's unfortunate for them. Yeah. But, maybe a little bit first on, how did you choose this career? How did you get to this spot? Maybe Aditi, we'll start with you.

Yeah. I think this career chose me. I usually didn't have a lot to do with, the jobs that I picked or the career that I wanted to shape. I was really going for, opportunities or problems that were exciting me.

And I learned along the way. I think it started with curiosity. It started with going back, to learning Mhmm. Continuously, whether it was from the industry or my colleagues or, you know, the places I was going to. It was really getting a lot of that inspiration.

I changed my path. In fact, I was almost going to be a doctor. Cliche. I know. Stereotypical.

But, that quickly changed when I found design and design found me. So, it's been that romance for years that I don't wanna give away, but it's been quite a long time, where I've, really found meaning with design. And, whether it's working at Amazon or really looking at, you know, giving back, whether it's through teaching, whether it's through being a part of not for profit organizations. I've really found meaning both by improving employee experiences, which I'm doing, at AWS today, but also, making the world happier. Cliche, but yes.

It's wonderful.

Wonderful.

Yeah. So my journey, you know, was was probably different than most.

I had the the fortunate pleasure of getting to try a lot of different things. I've been at Dell for twenty one years now. And I started off, my background, I got a degree in management information systems and started off doing, web application development straight out of school, moved into doing some front end UX work, some UI design work, jumped into Flash and really got excited about taking my programming background and getting to create more interactive experiences. I've been in marketing, doing a lot of content marketing.

And then when I kind of stepped back and, you know, looked at some of the roots of of where I came from, I actually came from a really creative background, in my family. My father is a commercial architect.

My mother, did a lot of abstract oil painting, and, she was also an interpreter, for for the deaf. And so, it was kind of a natural transition for me to take a lot of my technical background and transition over to focusing on more human centered occupation. And so, it got took a lot of my knowledge around content and around something I was passionate about for around accessibility and, found that we had some gaps within our design organization at Dell. And so leveraged my background to get my foot in the door and then, slowly started picking up more design work and led our enterprise design team. And then a few years later, had the opportunity to to lead this organization and haven't looked back since. It's been a lot of fun.

Flashes all the rage still.

Dating myself, clearly.

You know. We're all twenty nine here. Yeah.

So the the first thing we wanted to explore is this kind of balance between creativity and accountability. And we all with our organizations around research and design and how do you kind of give people that room to breathe for the creative elements, but also we're accountable to the business. So love to hear your kind of thoughts on how you're doing that today.

It's definitely not easy. Right? Like, I think we were also talking about just backstage how design needs its time, but it's our job as leaders to make it more pragmatic and more approachable, both for new designers or new researchers in the field, but also for our partners so they know the expectations when it comes to a design process. It's not something that should be limited to our community. I think we need to open it up and make it everyone's problem or everyone's process.

So we do that today at, AWS. And I've been working with some other amazing design leaders across Amazon to really categorize the problem space in three ways. One are your known knowns. So that might be, hey, you're working on an existing product, and you were adding a new feature for an existing customer.

The second one could be your known unknowns. So this could be a new feature that you're adding for a new customer that you want to onboard on your product.

And the third category could just be unknown unknowns. This is the most complex one, and which may require a more integrated design process. So we look at the problem space. We look at the context that, we are dealing with. And then accordingly, we've been finessing a playbook or a decision tree.

I shared some of the glimpses of that earlier in my talk this morning, but if you are interested in the artifact.

But essentially, we have a series of questions that we've set up for our teams.

So it really helps them narrow down the artifacts or the templates that they may need to conduct research, even user testing, interview scripts, and the overall setup that we've now put in place. How do we fasten the process, the overall operations? And how do we make it transparent enough for our stakeholders? So I also want to talk about outcomes, but essentially thinking about process more so as pragmatically as possible, opening the doors to how the sausage is being made by inviting your partners to be a part of that process, and then also tying outcomes and metrics so that each bio frame or each interview that you're conducting is tied to a business outcome or is tied to an operational outcome.

So that so what is always a part of your process and thinking. So that's how we are doing it. We are keeping it really zero and one, very binary right now. But it's been really helpful for our teams and for our stakeholders to be aligned on those expectations. Yeah.

Yeah. And I'd I'd you know, I think one of the things that you said that I resonate a lot with is that transparency. I think when I stepped into this role, I got a lot of feedback that the design part of the process can often sometimes feel like a black box. And people are really excited, and they're anxious to to get to the end result.

You know, they wanna see the high fidelity, you know, prototype and and, you know, wireframing. And, sometimes people have a hard time being patient while while we work through the process to get there. And so one of the things that we've been doing is, really breaking down our design process into smaller chunks. So at Dell, we've been, recently onboarding all of our product teams onto Jira, and we've found that that to be a great opportunity for us to really drive transparency into the design process, take some of the, you know, the the original epic, story that's entered into Jira and break that down into smaller chunks.

So, for example, if we're in the discovery phase of of our design process, you know, we may show that we're gonna, you know, complete our user interviews in week one and focus on data synthesis and insights in week two, just so people start to see progress being made towards the end result, and understand that we really need the time to focus on understanding our users, understanding the problems that we're we're trying to solve instead of rushing through. And that that's a that's another great thing that we're also looking at is, you know, we're not we're not interested in in metrics to operational metrics really to track time on task or something along those lines.

But we are interested in understanding, when we follow our design process versus when we don't, how does that impact the outcomes that that our team is delivering? And and understanding that is important to communicating that information back to our stakeholders.

So Yeah.

Love that. Love we you certainly love the playbooks, how you're doing, and love the idea of the transparency throughout so it's not a black box Yeah. Even though everybody loves a good high fidelity comp. Right?

Yeah. Absolutely.

Can't get away from that, but it's it's real. The next thing we wanted to kinda jump into is innovation. Mhmm. So and again, how do we balance these elements together? So how do you help your teams innovate? How do you help their stakeholders innovate as part of their overall charter? So, yeah, I'd just love to hear how you think about that.

For us, I think innovation has, been very integral to the DNA. And I would say that just not for, AWS, I would say that just for this entire room. I think we as creatives, or folks who are more closely connected to what our customers need, we become more, adept to taking what our customers are telling us and maybe not telling us and, using those insights in a creative way to drive innovation. So at AWS, we've been doing it both proactively, and of course, we are responding and reacting to, the customer needs and escalations.

Of course, that do happen when design process is not being followed. We do come across those use cases as well. So our approach has been, to look at, the skill set mix that we have on our team. I think identifying almost that assembly chain when you're starting with an outcome and then you're connecting it to your org strategy.

And then from there, you are starting to think about what are some of those experiences that may be built along the way, and then you are crafting some North Star visions.

So I'm not opposed to high fidelity prototypes. Like, please, visions.

So I'm not opposed to high fidelity prototypes.

Like, please don't kill me. But I do think that that's our strength. Like, we should embrace our strength of visual storytelling as a community.

And, maybe product managers have, their requirements backlog.

What we have is multiple iterations of wireframes and concepts and insights and research reports, that we've done. So I think we need to embrace that. We need to gather those artifacts and use it to amplify our voice as a community.

So that's what we do. We bring back. We connect. We listen to the industry, to what our customers are telling us.

We link that with service blueprints, journey maps to align everybody on a shared vision. And then we break that down into incremental pieces, whether it's through epics and, of course, looking at we use Asana, for example, to track what is the overall progress. And then ultimately, we make sure that the intent of that design is coming through to our front end build. So for us, quality is also very important.

And my team tells me I insist on higher standards. That's Amazon LP. And that's always at friction with delivering results. So I think we need to think about pragmatic approaches so that we continue to deliver incremental value while we think big, innovate, whether it's proactively by being ahead of the curve, or just innovating even within constraints.

I think that's where true magic lies.

Yeah. Very cool. Yeah. That's wonderful.

I think, one of the things I'll share with y'all, one of the best definitions of innovation that I've heard comes from, I'm sure many of you are familiar with Jared Spool, who's an educator and and, author in the UX community.

But he defined innovation in such a meaningful way for me that was really around, anticipating needs and exceeding expectations. And I think it's such a simple, beautiful definition of innovation that can if you're stuck, you're not sure where to get started, I think it can if you break that down, it's really about understanding, you know, the needs and starting with your customers and your your stakeholders. You know, what are the struggles? What challenges are they facing?

What are the opportunities that that are out there? How does that line up and tie in with your business strategy and outcomes and, you know, trying to look look forward and look ahead. And one of the things that we've been doing is educating our stakeholders to understand, you know, that that you know, I think we all agree time is one of our biggest constraints. Right?

And and when folks look to design to drive a lot of innovation, it it it's hard if you have, you know, the same person that's focusing on delivering against a road map trying to look at the future. Right? That context switching back and forth can be really difficult if you're being pressured to deliver something, you know, against a a near term road map, but also looking ahead. And so one of the things that we've been doing is talking to our leadership a lot to help them understand, you know, we we wanna be innovative.

We wanna continue to to look forward.

One, we need an environment where it's okay to fail and to and to fail fast and learn from that and move on. But we also need time. Right? We need a little bit of time to, get the creative juices flowing and and, you know, help get that mind share freed up to to, to to try to focus on new ideas and exploring different areas that we might not have looked into in the future. So I think really communicating, you know, that to your to your stakeholders and getting that buy in upfront that, it can lead to great results. It can lead to to, to great ideas.

And, you know, I think we've also started leveraging a lot of our case studies. We've been building a lot of case studies. We've got a really great library of of many case studies that we can use to educate folks and help them understand, more around the creative process. We have a lot of folks, a lot of teams that we support, and the levels of maturity are quite different between all of these engagements.

Some folks are like, yeah. No problem. I get it. I understand. And some folks are like, well, how long do I need to wait?

How long is this gonna take? And Yeah. Alright. Just calm down. We'll get you those high fidelity Time.

Soon enough. Yeah.

But just Yeah.

Just chill. Give us a few minutes. But yeah. It's amazing.

So the next topic we wanna jump into is this idea of the feature factory we've all seen as especially in the last couple years, it kinda came to life, at least in my opinion, on the back of COVID where companies just started really cranking out features, functionalities, and all of a sudden that becomes like the underpinning of how things are built.

It's like we're just, you know, there's, launching a lot of features, and then it becomes kind of the norm. And and so in this world of the tensions that we have to live in, how do we kinda think about, the the role of the insights or the role of design in the world where maybe that's that's kind of some of the the context of the the feature factory. And so how do you kind of how are you navigating that in your world where there's a lot of expectations to ship, but at the same time you're trying to be customer centric and leverage insights?

For for us, I think what what you said is is so critical. Like, thinking about, delivering value, removing that black box, and inviting people in. And, that, to me has been so important to change the way we think about our disciplines.

We need to start blurring the lines a little bit because I do think that, everyone's on a timeline. We also want to deliver results fast. So if we think about that shared mission a little more and start aligning the teams around that, I think, that's what really moves the needle. And that's what I think has been critical for us at AWS as well.

So we've been focusing a lot on end to end workflow mapping. And we've used, of course, user testing when we validated concepts, when we looked at the areas of friction or any comments that we are getting off the bat, combining those insights and thinking about where are the friction points within our existing solutions. So being ahead of that and doing more of that user testing ahead of time. So we did a survey.

And I was looking at the report before coming here, so I have the right number.

So we've doubled our effectiveness to get better UX out there, not only faster, but getting it right faster. So we've doubled our ability to do that just in a year. And I think the reason why we were able to do that is because we started to break down the process, into a playbook. And now we made it so easy for people to understand that, hey, here are some expectations.

I need to have my product scope. I need to have my current state understanding of the customer's problems. I need to have, a linkage of their workflow so that I can take out those nuggets of innovation and really give them something delightful, for their current experience that they're not even asking for. Right?

So thinking about that listen, link, and learn again and, really bringing that forward for our partners.

So one thing I shared this case study earlier today, we did this with Uno. And nobody told us to create Uno or this AWS finance system, our concept to bring our twenty seven applications together under one umbrella.

That was just a hunch, right? Like, something that we presented early on to our leadership. Then it was really about working backwards from that and saying, Okay, we have these customer experience outcomes that we've aligned on, like controllership, improving accuracy, improving data analysis times, or connecting experiences. All of these things added up to help us define our system architecture and, on that vision, start delivering pieces that fit that long term story. And, be it now tracking our impact across business, across operations, looking at efficiency and driving hard and soft savings, as well. But, but, yeah, that's in a nutshell, how we are changing the paradigm. And, I don't wanna use the word so much around coaching or educating, but I think as we are getting educated about what it it means to deliver value for a finance world, I think we are also working together on a shared vision for what it means to drive good outcomes with research and design.

Mhmm. And how much it was you you talked about all those metrics and how you kind of track it. How much is that kind of shared out to the organization as part of, like, learning and understanding what you're doing and therefore, you know, creating some interest?

Yeah. Great question. In fact, we wrote at Amazon, we write a lot of documents. So we wrote, a six pager doc, just explaining when we started what is UX research and design? Yes. We started there.

And then we connected We all started there.

I think everybody's there.

Okay. Good.

You're not alone. Yep.

Okay.

So as we move forward, we started to then look at metrics around, connecting research or inputs, honestly. And it was a hard conversation with the leadership to have them change their thinking around, yes, I think of an idea. And the leadership goes and works with the engineering team, and it's built. Now who's tracking where it's headed? Not so much. So we've done or we've set up these episodic research methodologies where we send out surveys to our partners across product, business, and engineering, just asking them about the UX research and design teams engagement.

Have we and we also survey our own teams. So we understand how much time have you spent on getting a feature out. And then once the feature was out, did an engineering team had to work on that feature again? So now we are measuring any rework that's happened post a UI being released.

So again, that tracks the efficiency of the process as you were talking about, and then tying it back to the business impact that we are driving. So we've been able to unlock more revenue opportunities for our our internal teams because we've made the systems easier. Now they have more time to do more fun, strategic things that they've been hired to do as as analysts. So, that's how we are we are changing it.

I couldn't help but think as you're saying as you had to do more Flash, I was going back to okay. I know that's not what you're doing.

Yeah. Hey. Question for you.

Please share your thoughts here.

Yeah. No. Absolutely. So I think, you know, first off, your design process has to be flexible enough to meet your stakeholders where they are.

There's not a one size fits all design process. And as I said, we've got a pretty broad portfolio of experiences that we care for and different levels of engagement in in terms of maturity and experience working with design within each of those experiences. And so we've you know, we have some that are very, very much outcome focused, and everything's great. We all we work.

We also follow a methodology, called OGSMs, which is objectives, goals, strategies, and measures. And that's been really helpful in terms of getting that alignment between, you know, product management, design, and engineering and make sure that we are all collectively moving towards the same beat in terms of what are the outcomes that we're trying to accomplish.

When we when we work or engage with a group that hasn't implemented that practice, we have to be a bit more flexible and and understand, okay. Hey.

We are seeing these key tenets of a feature factory here and and focusing on quantity over quality.

You know, and and we've we've had times where we've come in and started asking why, you know, from the from the get go and realized maybe we're a little too assertive, and they didn't like that approach. So they they start to disengage because we're questioning their, you know, their current strategy, which is is again, line more towards output versus outcomes.

And so it's like, okay. Well, maybe we need to, you know, be a little bit gentler, a little bit softer in in terms of if if we if this is something where we see a lot of opportunity and and, you know, we wanna continue to be engaged here. But, yeah, that transition, I think, is is really important, and you have to strike that balance. You know, sometimes you have to meet folks where they are and, you know, maybe you can ask every now and then, hey. You know, hey. What what what is it we're really trying to move the needle on? You know, what is the metric that we feel like is going to make a difference in our end user's life by adding this feature?

Right.

And and I think, you know, one thing I learned early on in my life is your approach you you can you can accomplish ninety percent of your outcome through your approach. Right? So if you have a software approach, people are generally a little bit more welcoming versus if you come in and you're like, what's going on here? Why are you doing this?

Yeah.

You know, it's just like they get back off and disengage.

But, you know, I've I've I've seen the detriment that it can cause to teams.

I think the best performing product teams are those where you have a flat hierarchy and everybody's empowered to contribute to the conversation, and, you know, that's where you see the best results. And so I think, getting to a point where everyone is is equal in that playing field is important.

You know, however, you know, it can it can be a challenge in that in that process of getting there. So sometimes you have to give a little, you know, to to be able to make progress towards that end goal. And, you know, I find in areas where it's not as mature, we have to educate a lot of folks along the way. And it's and it's not their fault.

Right? It's just they haven't had that moment yet. And so my goal is to try to help them get there and help them understand, oh, yeah. Maybe maybe there is a reason why we aren't moving the needle.

It's because we didn't really need to focus on this area.

Yeah.

Instead, our problem's, you know, much further to the left. Yeah. And so it's I really get excited around seeing those moments when when you see the those those flashes go off and the light bulbs happen. It's it's what it's why I love what I do.

Yeah. But yeah. Yeah. It's incredible. Yeah. We talked a lot about this kind of idea of meeting people where they are with our language, with our body language, with, you know, the approach, and then kind of guiding them along and kind of pulling them forward is a very common way.

We'll see. And so it's being rigorous, not rigid. Right? If you're rigid, then then people get defensive.

So it's a really interesting blend. The next theme we wanted to kind of jump into is around outcomes. We've all seen and felt in the last year plus a lot of pressure to deliver outcomes and tie the work that we're doing back to business value, And those questions get, you know, come more and more. So we wanted to kind of explore how you're doing that in your organizations and how are you helping your teams connect their work to outcomes.

This this takes me back to, a conversation I had with our new AWS CFO when I had my first one on one with him about three months ago.

And the first thing, he told me is what outcomes or the questions he's very curious as any good leader should be.

What outcomes are you driving, through your org? And I talked about CSAT, NPS, and customer effort score. He said, Okay. How do you measure the quality of decision making and the work that your team is doing? How is productivity and efficiency tied to the work that you're doing? That really changed the way, that we approached our own narrative as a team.

That is not just about satisfaction, but what leads up to satisfaction.

Breaking that down and decomposing that becomes really interesting very fast.

So for us at AWS, we look at employees and we look at their employee satisfaction. So what accounts for employee satisfaction? I'd say a great boss, yes.

But beyond that, maybe a good team.

Maybe, the tools that you're working with, how effective are they?

Am I getting better decisions out there? And if, if I am, I'm definitely becoming the hero or the heroine of, of the the show. So really connecting a better financial decision that you're making as an analyst day to day and tying that to your career goal. And in the process, UX played a part.

You know, but you were very subtle. We just provided the tool that helped them make that better decision. We augmented their intelligence, their capability. And of course, I'd love to talk more and more about AI all the time.

But as we think about where the industry is headed as well, those capabilities will help us achieve those outcomes faster and in ways that we've never imagined before. So business impact is one of the things that we have to track, and we do. And we look at hard and soft savings. And so, you know, hard savings might just be a product that's no longer needed because you've made another product so much more efficient.

That investment has gone, or you don't need to be adding a new team. So the KT yellow mode, keep the lights on mode, that we hear a lot around operational efficiency, removing those big numbers. Soft savings may come just from workflow efficiencies that you're driving. Did you make just your user in your specific context go faster from point A to point Z in their digital journey?

And in the process, did you make them better? Or did you make them happier, which is such, again, a very ambiguous term. So I think, I'm on that journey now at Amazon Design, where, we were just talking about our backstage as well, where we are writing this white paper on connecting metrics, UX metrics, back to business impact, operational efficiency, and, of course, experience metrics, but also productivity thinking about lowering the number of UX tickets that we are getting, improving our outcomes in a different way where we've introduced a program like Common Patterns that helps us get to more outcomes faster across teams.

So, and we are impacting through our common patterns, a data grid that we've designed, yes, for finance, that's going across Amazon. So we've taken those approaches, and the team has been really kind with, with me to get on that journey, to rethink, how we position ourselves as as a community.

And thanks to John Felton, the CFO, who really helped me think about a different leader.

Awesome. Thank you.

Yeah. So for us, as we get into a lot of our customer facing ecommerce experiences that we work on like Dell dot com, it's we we do a lot of AB testing in addition to, you know, user testing and validation of of our of our work along the way. So for us for for that type of experience, it's very easy for us to understand the metrics and directly how it's impacting the business, looking at, you know, metrics like conversion and average order value and revenue per visitor and all of that fun stuff. But like I said, we've got a pretty broad portfolio.

So, that works well for for for those experiences. But as we get into other areas, you know, we we understand the fact that, you know, a lot of these experiences we're working on are very different. Right? Therefore, there's likely to be a different set of KPIs that we're tracking towards in terms of how we're going to improve the experience and make the end user's life better.

So that's that's okay. Great.

You know, but but one of the things that we were really trying to explore is, you know and many of my leadership team, you know, we, we took some training on UX metrics and, you know, I was like, okay. You know, this makes a lot of sense. Your experience is different. Therefore, your KPIs are gonna be different. But I'm trying to look at how do I create something and solve something that I can roll up to our executive leadership team. And that was a really big challenge.

And so, we've been, experimenting in a in a lot of different areas of how could we do that. And, you know, a lot of the feedback that I've received since being in this role is, you know, well, you know, engineering has a lot of their metrics that they follow around, you know, page load times or, Google core vitals and looking at the different metrics there and understanding how those impacts are. So we've really been digging in deep trying to understand how can we drive more accountability, you know, back into our organization, of of are we actually improving these experiences or or are we not?

And, you know, we've we've been partnering a lot with our behavioral scientists and our one of my peers leads leads our our research team. And so that's been interesting. We've been looking at different ways around understanding cognitive load. You know, when we get an experience in front of somebody, what are their initial emotions and reactions, just by evaluating their their expressions?

And, you know, it's really fascinating and and pretty exciting. And so, we started to get buy in from our leadership team to like, these are really important in terms of understanding these types of human behaviors and reactions in addition to how it impacts the business.

And so more to come.

We can if you're interested in learning a little more, I'll do a shameless plug. One of my colleagues and I will be, speaking tomorrow morning at eleven AM, and we'll talk a little bit a little bit more about it. But, really excited about, you know, the direction that that we're going and understanding, you know, the role of human behavior and how that that plays into accomplishing your goals aside from just the desired business outcomes and metrics that we're trying to accomplish.

Yeah. Tomorrow morning, eleven AM, I caught that.

Just in case just in case you didn't.

One last question. I know, we wanted to open up for a little bit of questions.

One is artificial intelligence. So kind of how are you experimenting with it today and as much as you can share about where you're finding value and how you're kind of, like, considering leveraging it going forward.

I I won't touch upon what might have might have already been said around, you know, it will augment us. It'll make us better. Yes. I wanna talk about actionable insights. I think I'm really interested to see Gen AI impact products that our customers are using.

How can we look at, say, telemetry data?

How can we look at, some of the, you know, their digital journeys, just not within our tools, but also experience their worlds a little more closely and be a part of that process. So I'm really interested to explore, Gen AI in a different way, not just for content creation, but thinking about it in different contexts. So ambient skills, how do we introduce Gen AI as a part of the product workflow itself where, say, I'm doing a report and it gives me certain insights or certain templates that I can use? So again, recommendations that are embedded as a part of the experience.

Maybe I use a skill to convert a table to a database. So very basic skills, but ambiently available for our users. So one is that. The second piece that I'm excited about is, of course, the reactive part, the no queries, and chat bots.

We can talk about them all day. So I'm really excited about, of course, integrating that at a larger scale and thinking about, how much or how little are we giving away? So ethics also are always the undercurrent, but not that that topic. So but, yes, reactive part I'm really excited about too.

And then the proactive insights. So, recommendations that are being delivered just around, say, anomaly detection. Hey. A threshold was breached and, you need to take this action proactively, how can we be proactive in our approach to drive innovation or in the process translated to whatever success means for you, your team, or your org, whether it's revenue growth or just the growth of of your team.

So again, I'm I'm excited, again, about ambient proactive and reactive skills that that Jane Gen AI will bring to us.

Yeah. Awesome.

Yeah. And, you know, we've we've tried to take this hype around the AI and break it out into two areas. Right? There's designing for AI, and there's designing with AI.

And, you know, when it comes to designing for AI, I'm sure like many of you working at a large enterprise, there was this huge influx of of requests coming in of, you know, we wanna insert AI here and there and without really understanding why and what we're trying to accomplish. And so, one of the things that we actually did is is we, brought all of the designers together that were really passionate around AI and, where we're getting a lot of the ask coming through. And we decided to create a center of excellence around AI for design so that we could align on what are our tenants that we wanna focus on. You know, you mentioned transparency and being ethical.

Another one's around explainability. You know, a lot of people don't trust AI yet, so you have to help them understand how you got to that result and and why.

And so that's been working out really well for us just around what are those experience tenants in terms of our approach of how we're going to design for AI so that we're not, going off and trying to solve common problems separately, but that we're we're actually have a set of common standards that's stitching us together in in our experiences.

And then when it comes to designing with AI, you know, I think one of the things, you know, that that is fortunate for for us in terms of what we do, if any of you are familiar with the robot curve, as an example, it's a great thing to go look at. But, you know, a lot of those rote tasks are gonna be automated. Right? They're but that's gonna free up time to the things that can't be automated around, the the creative thinking process and, you know, focusing on that very high level strategy.

You know, AI is not there. They're not gonna replace those types of jobs. So I I look at it as how do we create efficiency, leverage AI to do things around data synthesis. Right? We know it's very accurate and, you know, less prone to errors than humans when it comes to certain aspects of the of the process. So encourage everyone to, you know, get get familiar and understand how can you leverage it to to create, you know, faster faster, you know, time with your design process overall, and then ultimately free up more of that time for you to to focus on the things that really matter. Yeah.

And, you know, we're we're exploring with some different technologies that's pretty exciting.

One of which we've we've come across, you know, a a, an AI tool that can generative AI tool that can is prompt based that can generate AIs on the fly or UIs on the fly pretty quick.

And we're you know, it's like, well, this could be an interesting brainstorming tool, right, with your stakeholders potentially to do some early, exploration around, you know, how it you know, how are we thinking? How are we reacting to that?

You know, so lots of lots of opportunities out there, but I think at the end of the day, you know, we're there's there's a lot of value in the work that we do and the creative process, and, yeah, I could be able to replace it.

That's right.

It's amazing. Yeah. Well, we literally had four more themes to get to. But in the interest of time, because we have a lot there's a lot to share, we will, stop here for a second. We wanted to open it up to questions, and I know there's gonna be some runners of microphones. So if anybody has a question for Aditi or Karsten.

Hello?

Alright.

Good afternoon. Thank you so much. My name is Derek from the Marine Corps software factory.

Aditi so, this morning I got some notes here. This morning, you gave an amazing brief. If you guys weren't there, you know, you missed out. Huge.

But part of why it was so good and so effective, was your presentation.

And so first kinda question, it's not really a question. It's an ask. Could you share that with us?

It was it was so well done. I like to I try to teach effective, like, presentation skills, and and part of that is the brief itself or the PowerPoint, in in the military. I'm joined with some army soldiers here. I could tell you the I can't tell you the countless barista I sat through. I literally wanna, like, jump out the building.

It's just so poorly done.

So with that, how important was, say, your presentation to getting UNO, you know, from thought to launch, and it just in the history of the things that you've done, how much of an impact have you seen a well put together and delivered presentation?

How did it impact the outcome? And then give us some examples if you have any, some that were really great ideas, but the presentation was so poorly done that it just never saw the light of day.

K. I've I've Energy around. Mic too.

Thank you for the kind words.

I can't take all the credit. My team has been a part of, the storytelling process with me. And I'll tell you that, yes, storytelling, is very important.

Now how you do it, the ways or the media that you use to tell your story can be different and can fit what you are comfortable with.

But for me personally, Uno went through more than thirty iterations, like I mentioned. So we had thirty different prototypes. And, the guy who worked on it with me, Thomas, he's in the crowd somewhere.

So he was the one behind the first iteration. So we went through a lot of visual storytelling to get buy in. We supported that with, documents and narratives as well. But without the storytelling visually being presented, as soon as we saw something, people would react in the room and say, that's wrong.

That's it. And that got them engaged in the story that we had to tell. So we just had to be okay, around the the vulnerability that comes with showcasing your ideas because visual storytelling really just shows it all. It tells you exactly what you mean by a bunch of words.

So, again, it takes a lot of courage to do that, but we've been doing that. It's worked out beautifully. There have been ways. And, it takes me time to finesse the presentation as well because I've been, in spaces where we've done presentations, but, we've not had that outcome.

But we've revisited the same presentation. I also talked about Adapt this morning. It basically started with a failed presentation that we made to our leadership. I was out on maternity leave.

But when I came back, for that, the presentation didn't go well. So, again, just went back to the storytelling, refined it, took us a couple of quarters.

But we went back. And as you saw today, Adapt, is on its way for a reimagining of the workflow. So can't take all the credit, but, yes, visual storytelling is is very important. Happy to share slides. Yeah.

Very important. K. Thank you.

Thank you.

Alright. Well, thank you. Let's give a round of applause for Didi and Carsten.

Thank you for sharing your wisdom and insights today. Really appreciate it.

Thank you.

Thank you.

Alright. Yeah. Awesome, man.